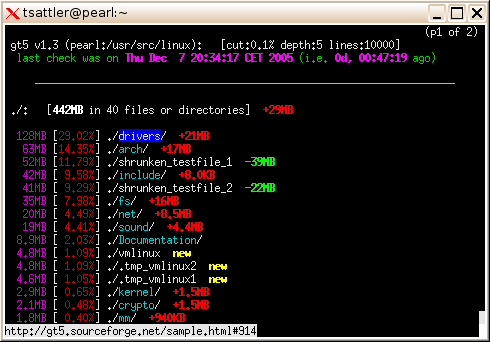

To find the largest 10 files (linux/bash):

find . -type f -print0 | xargs -0 du | sort -n | tail -10 | cut -f2 | xargs -I{} du -sh {}

To find the largest 10 directories:

find . -type d -print0 | xargs -0 du | sort -n | tail -10 | cut -f2 | xargs -I{} du -sh {}

Only difference is -type {d:f}.

Handles files with spaces in the names, and produces human readable file sizes in the output. Largest file listed last. The argument to tail is the number of results you see (here the 10 largest).

There are two techniques used to handle spaces in file names. The find -print0 | xargs -0 uses null delimiters instead of spaces, and the second xargs -I{} uses newlines instead of spaces to terminate input items.

example:

$ find . -type f -print0 | xargs -0 du | sort -n | tail -10 | cut -f2 | xargs -I{} du -sh {}

76M ./snapshots/projects/weekly.1/onthisday/onthisday.tar.gz

76M ./snapshots/projects/weekly.2/onthisday/onthisday.tar.gz

76M ./snapshots/projects/weekly.3/onthisday/onthisday.tar.gz

76M ./tmp/projects/onthisday/onthisday.tar.gz

114M ./Dropbox/snapshots/weekly.tgz

114M ./Dropbox/snapshots/daily.tgz

114M ./Dropbox/snapshots/monthly.tgz

117M ./Calibre Library/Robert Martin/cc.mobi

159M ./.local/share/Trash/files/funky chicken.mpg

346M ./Downloads/The Walking Dead S02E02 ... (dutch subs nl).avi

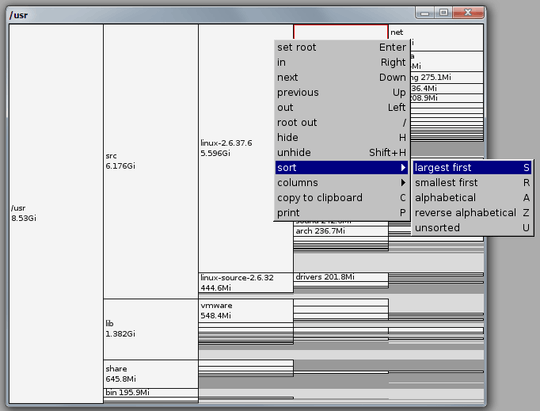

for mac users, I just want to recommend this free software called Disk Inventory X. download it here http://www.derlien.com/ it's simple to use for mac osx

– Nimitack – 2018-01-06T23:05:59.863