23

12

I'm having some problems with mounting an hfs+ partition on Arch Linux.

When I run sudo mount -t hfsplus /dev/sda2 /mnt/mac I get this error:

mount: wrong fs type, bad option, bad superblock on /dev/sda2,

missing codepage or helper program, or other error

In some cases useful info is found in syslog - try

dmesg | tail or so.

Running dmesg | tail gives:

[ 6645.183965] cfg80211: Calling CRDA to update world regulatory domain

[ 6648.331525] cfg80211: Calling CRDA to update world regulatory domain

[ 6651.479107] cfg80211: Calling CRDA to update world regulatory domain

[ 6654.626663] cfg80211: Calling CRDA to update world regulatory domain

[ 6657.774207] cfg80211: Calling CRDA to update world regulatory domain

[ 6660.889864] cfg80211: Calling CRDA to update world regulatory domain

[ 6664.007521] cfg80211: Exceeded CRDA call max attempts. Not calling CRDA

[ 6857.870580] perf interrupt took too long (2503 > 2495), lowering kernel.perf_event_max_sample_rate to 50100

[11199.621246] hfsplus: invalid secondary volume header

[11199.621251] hfsplus: unable to find HFS+ superblock

Is there a way to mount this partition?

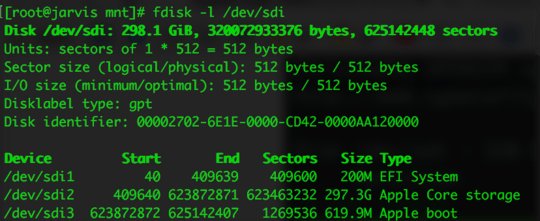

EDIT:

Using sudo mount -t hfsplus -o ro,loop,offset=409640,sizelimit=879631488 /dev/sda2 /mnt/mac gets rid of hfsplus: invalid secondary volume header in dmesg | tail

3

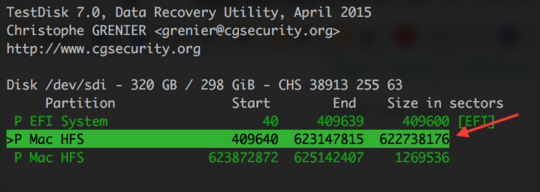

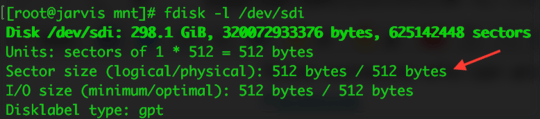

From user edmonde: This recipe worked great for me, but I had to tweak it using the logical sector size (the first of two numbers, in my case 512 versus 4096) as opposed to the physical sector size to calculate the total volume size. I'm not sure why but it worked great.

– fixer1234 – 2016-07-06T18:51:44.007This fixed my problem. Other resources suggested using an

offsetparameter, which didn't work when combined with this, but using onlysizelimitset to the number of bytes (bytes * sectors) worked like a charm, even for non-CoreStorage partitions – cdeszaq – 2016-10-16T16:58:35.970This doesn't work for me. I get

mount failed: Unknown error -1and nothing indmesg.hfsplusis definitely loaded. – Dan – 2017-05-09T16:19:18.580+1 fixed by using logical sector size – Jake – 2017-10-08T05:42:30.047

1This solution was working fine for me till after an update on OSX which stopped this working. Anyone else had this issue? Any advice? – Vik – 2019-04-15T00:05:48.750

Same problem as @Vik; not working here with partitions created under mac osx Mojave. The analysis performed by testdisk does not seem to make sense. – Freddo – 2019-11-18T21:30:47.640