0

I have been recently going through the hyper threading technology of Pentium 4. The number of pipeline stages is high in P4 and is said that it will increase the speed of clock rate. How is that possible?

0

I have been recently going through the hyper threading technology of Pentium 4. The number of pipeline stages is high in P4 and is said that it will increase the speed of clock rate. How is that possible?

4

To answer this question we need to understand a few things about basic digital electronics.

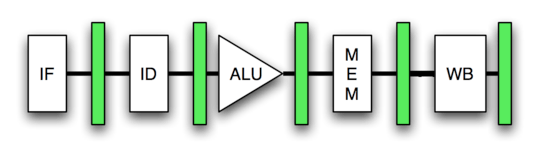

Lets start by taking a look at a typical pipeline.

As we can see that each pipeline stage is followed by a storage register (the green rectangles) which holds the output of each stage. Now each stage of a pipeline consists of a combinational circuit. Combinational circuits are basically combination of various logic gates like NAND, NOR, etc. Each of these logic gates has some latency, i.e.- when you provide some input it takes some time to produce the output (few nanoseconds to picoseconds). So the maximum latency of each stage depends on the longest sequence of this logic gates present in that stage.

Now for a stage to produce a valid output on providing some input we need to provide it with sufficient clock period. So for a stage with large complex combinational circuit the latency is high and hence it will require a long clock period and hence slower clock rate. Similarly for a stage with short and simple combinational circuit the latency is low and hence it will require a shorter clock period and hence higher clock rate.

This is the reason behind, longer the pipeline, higher the processor clock rate. As in a longer pipeline, the stages are divided into more and hence smaller sub-stages, which makes each stage in the pipeline simpler and combinational circuit shorter hence reducing the latency of each stage. This in turn makes room for higher clock rate.

0

its little brief to write up about it ,To understand why different CPUs at different clock speeds perform in different ways, let me brief you how the CPU processes instructions.

From tech Website

A CPU processes instructions in an assembly-line manner, with different instructions existing in different stages of completion as they move down the line. For instance, each instruction on the original Pentium passes through the following, five-stage pipeline:

Prefetch/Fetch: Instructions are fetched from the instruction cache and aligned for decoding. Decode1: Instructions are decoded into the Pentium's internal instruction format. Branch prediction also takes place at this stage. Decode2: Same as above. Also, address computations take place at this stage. Execute: The integer hardware executes the instruction. Write-back: The results of the computation are written back to the register file. An instruction enters the pipeline at stage 1, and leaves it at stage 5. Since the instruction stream that flows into the CPU's front-end is an ordered sequence of instructions that are to be executed one after the other, it makes sense to feed them into the pipeline one after the other. When the pipeline is full, there is an instruction at each stage.

Each pipeline stage takes one clock cycle to complete, so the smaller the clock cycle, the more instructions per second the CPU can push through its pipeline. This is why, in general, a faster clockspeed means more instructions per second and therefore higher performance.

Most modern processors, however, divide their pipelines up into many more, smaller stages than the Pentium. The later iterations of the Pentium 4 had some 21 stages in their pipelines. This 21-stage pipeline accomplished the same basic steps (with some important additions for instruction reordering) as the Pentium pipeline above, but it sliced each stage into many small stages. Because each pipeline stage was smaller and took less time, the Pentium 4's clock cycles were much shorter and its clockspeed much higher.

In a nutshell, the Pentium 4 took many more clock cycles to do the same amount of work as the original Pentium, so its clockspeed was much higher for the equivalent amount of work. This is one core reason why there's little point in comparing clockspeeds across different processor architectures and families—the amount of work done per clock cycle is different for each architecture, so the relationship between clockspeed and performance (measured in instructions per second) is different.

An real world example from quantum thread:

Lets take A VERY simple processor. It is just a programmable calculator - instructions available are add a, b, c and subtract a, b, c. (a, b, c are numbers in memory. no way to load these numbers from constants ). One way to do it would be to do the following all in one clock cycle:

- read the instruction and figure out what we're going to do

- read memory location a

- read memory location b

- perform the add or subtract

- write the result to location c

With this setup, the IPC is exactly 1, because one instruction takes one (VERY long) clock cycle. Now, let's improve this design. We're going to have 5 clock cycles per instruction, and each doing one of the 5 things above. So, on cycle 1, we decide what to do, on cycle 2, we read a, on cycle 3, we read b, and so on. Note that the IPC will be 1/5th. The thing you have to remember is, ideally each of those steps takes 1/5th of the time, so the end result is the SAME performance.

A more advanced implementation is a pipelined processor - multicycle like the one described, but we do more than one thing at a time: 1. read instruction i 2. read a (for instruction i), and read instruction ii 3. read b (for instruction i), a (for instruction ii), and instruction iii 4. do the op for instruction i, read b for instruction ii, read a for instruction iii, and read instruction iv 5. write c for instruction i, operate for ii, read b for iii, read a for iv, and read the instruction v 6. store c for ii, operate for iii, read b for iv, read a for v, and read vi

(note that this requires the ability to do 3 or 4 memory accesses in a cycle, which I didn't have in the other 2, but for the sake of understanding the concepts this can be ignored)

A picture would really help, but I don't have one offhand. To see how this performs, note that a given instruction takes 5 cycles from start to finish, but at any time, multiple instructions are being processed. Also, every single cycle, one instruction is completed (well, from the 5th cycle forward). So, the IPC is 1, even though each individual instruction takes a bunch of cycles, and the actual performance of the machine is 5 times the performance of the original, since the clock is 5 times faster.

Now, a modern processor is MUCH more advanced than this - there are multiple pipelines working on multiple instructions, instructions are executed out of order, etc., so you can't just do a simple analysis like this to see how an Athlon will perform vs. a P4. In general, a longer pipeline lets you do less in each stage, so you can clock the design faster. The P4's 20 stage pipeline lets it run at up to 3ghz currently, whereas the shorter pipeline of the Athlon results in more work per clock, and therefore a slower max clock speed

If you were looking for hardware information,have a reason over here

but if I have the typical 5 stages which last several ps (let's say the highest of them is 300ps) and I divide this stages till the point each one last 1ps, why does the clock rate increase? Because I need more clock cycles to execute the same thing? – Angelixus – 2018-10-28T12:49:16.153