2

Why is it generally considered that RAID 5 systems are not suitable for larger disk sizes? Is the same true for RAID 6?

Reference: http://www.zdnet.com/article/why-raid-5-stops-working-in-2009/

2

Why is it generally considered that RAID 5 systems are not suitable for larger disk sizes? Is the same true for RAID 6?

Reference: http://www.zdnet.com/article/why-raid-5-stops-working-in-2009/

4

The reason why RAID 5 might not be reliable for large disk sizes is that statistically, storage devices (even when they are working normally) are not immune to errors. This is what is termed UBE (sometimes URE), for Unrecoverable Bit Error rate, and it is quoted in full-sector errors per number of bytes read. For consumer rotational hard disk drives, this metric is normally specified at 10^-14, meaning that you will get one failed sector read per 10^14 bytes read. (Because of how exponents work, 10^-14 is the same thing as one per 10^14.)

10^14 bytes might sound like a big number, but it's really just a handful of full read passes over a modern large (say 4-6 TB) drive. With RAID 5, when one drive fails, there exists no redundancy whatsoever, which means that any error is non-correctable: any problem reading anything from any of the other drives, and the controller (whether hardware or software) won't know what to do. At that point, your array breaks down.

What RAID 6 does is add a second redundancy disk to the equation. This means that even if one drive fails entirely, RAID 6 is able to tolerate a read error on one of the other drives in the array at the same time, and still successfully reconstruct your data. This dramatically reduces the probability of a single problem causing your data to become unavailable, although it doesn't eliminate the possibility; in the case of one drive having failed, instead of one additional drive needing to develop a problem for data to be unrecoverable, now two additional drives need to develop a problem in the same sector for there to be a problem.

Of course, that 10^-14 figure is statistical, in the same way as that rotational hard drives commonly have a quoted statistical AFR (Annual Failure Rate) on the order of 2.5%. Which would mean that the average drive should last for 20-40 years; clearly not the case. Errors tend to happen in batches; you might be able to read 10^16 or 10^17 bytes without any sign of a problem, and then you get dozens or hundreds of read errors in short order.

RAID actually makes that latter problem worse by exposing the drives to very similar workloads and environment (temperature, vibration, power impurities, etc.). The situation is worsened further yet by the fact that many RAID arrays are commissioned and set up as a group, which means that by the time the first failure happens, all of the drives in the array will have been active for very near the same amount of time. All this makes correlated failures vastly more likely to happen: when one drive fails, it is very likely to be that case that additional drives are marginal and may fail soon. Merely the stress of the full read pass together with normal user activity may be enough to push an additional drive into failing. As we saw, with RAID 5, with one drive nonfunctional, any read error anywhere else will cause a permanent error and is highly likely to simply bring your array to a halt. With RAID 6, you at least have some margin for further errors during the resilvering process.

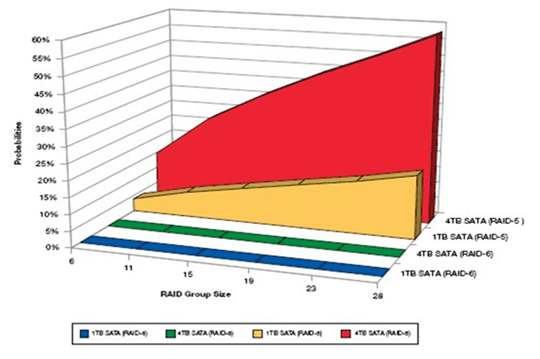

Because the UBE is stated as per number of bytes read, and number of bytes read tends to correlate fairly well with how many bytes can be stored, what used to be a fine setup with a set of 100 MB drives might be a marginal setup with a set of 1 TB drives and might be completely unrealistic with a set of 4-6 TB drives, even if the physical number of drives remains the same. (In other words, ten 100 MB drives vs ten 6 TB drives.)

That is why RAID 5 is generally considered not adequate for arrays of common sizes today, and depending on specific needs RAID 6 or 1+0 is usually encouraged.

And that's not even touching on the detail that RAID is not a backup.

2

See DISK RAID AND IOPS CALCULATOR and An explanation of IOPS and latency

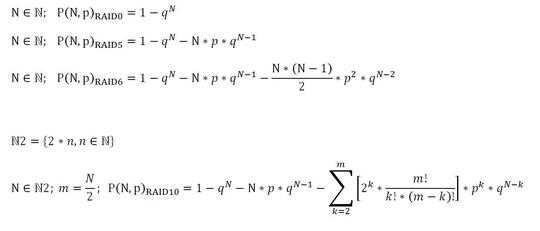

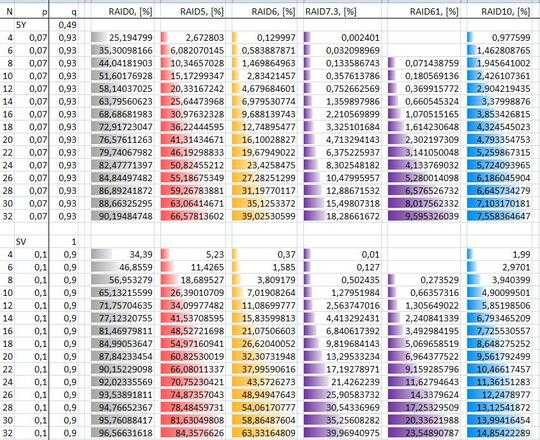

For the calculation of failure RAID, you can use formulas.

The assumption that the probability of failure of the HDD is equal.

For clarity, the probability of failure of different RAID at 5 years of work and after it in the table.

The probability of failure is RAID DP (Synology) failure of RAID 6.

Use p - reliability from Google datacenter search.

The probability of failure recovery procedure RAID 5, depending on the capacity.

1

Answer to your first question. URE. Unrecoverable Read Error. The disk may be OK, but the data cannot be read preventing rebuild which is the same in the end as a failed disk in terms of a rebuild. I thought the article gave the proper insight on a basic level.

Answer to your second question. Same is true for RAID 6 but for larger arrays. I think the point was if you are concerned about a URE for a 12TB array because a spec says you will have 1 URE for every 12TB, then you need an extra redundant disk for every additional 12TB in size to handle all the URE's you should expect to encounter.

That is RAID 5 rebuild of 12TB has same chance of failure (per a 10^14 URE rate) as a RAID 6 24TB array. Again, this is extrapolating on the article.

0

The reason is recovery time. Starting from avg. 2TB of size the time for recovery can become very huge and the probability of failure in the period of recovery increase a lot. With RAID6 you can recover from failure of two disks, but with raise of the size of disks 6 reach the same problem.

0

The UBE reasoning outlined in the other answers is fine enough, but a greater concern is the risk of a second drive failure during the rebuild.

Remember that while the array is being rebuilt the disks are operating at a 100% load, and given the size of modern disks the rebuild can take days. Unless the disks are enterprise grade, they're not really going to like this. This is the primary reason RAID5 is not suitable for larger disk sizes.

You must also consider that when people assemble disk arrays, they usually order the disks from a single vendor. This means that all the disks in the array will be from the same manufacturing batch. If it's a bad batch, this can mean reduced lifespans, reduced reliability, or even multiple drives failing within a short time period. Even if it's not a bad batch, if the drives begin reaching the end of their lifespan, there's an increased chance that multiple drives will fail within a short time of each other. It's a recommended practice when building an array to split the order up over several vendors, or to ask a single vendor to send you disks from different batches if possible. This way the drives are more likely to die at different times, and you're unlikely to get multiple drives from a bad batch. Recalls do happen.

Look into RAIDZ. It's great. Specifically, look at RAIDZ3, and nested RAIDZ. Synology has something called SynologyHybrid Raid, which has some really nice benefits. You can upgrade the drive sizes in your array just by replacing one drive at a time and waiting for the rebuilds to complete, for example.

RAIDZ is nice, but it's 100% tied to ZFS and thus only available on platforms where ZFS is available, which limits you to some flavors of *nix. Particularly, Windows is completely out. While you can run ZFS without using RAIDZ, you cannot use RAIDZ without ZFS. (Also, did you read my answer? I mention most of the things you discuss in yours.) – a CVn – 2015-05-09T17:02:48.513

When do you think that article was written if it was predicting problems were going to occur in 2009? – Jason Aller – 2015-05-09T15:24:35.213

@JasonAller The article was written in mid-2007, which it says right along with the author's byline. The fact that the headline is slightly on the dramatic side (RAID 5 hardly "stopped working") the article does make a valid point. – a CVn – 2015-05-09T17:05:08.377