Overview

Jeff Shattock's answer is correct that this is equivalent (or isomorphic, as mathematicians write) to a combinatorial optimization problem, but it's equivalent to the 1-dimensional bin packing problem, not the knapsack problem.

Lucky for you, I have some code to share that will solve this problem for you, or anyone else, with access to a Windows computer with at least version 3.5 of the .NET Framework installed.

A Rough Solution

First, download and install LINQPad.

Second, download the LINQPad query I just wrote – here's the linq (ha) to the raw file. Save it as a .linq file and open it in LINQPad.

Change the parameters:

Here's the part in the LINQPad query code you should change:

int binSizeMb = 4476; // This is the (floor of the) total size of a DVD+R reported by CDBurnerXP.

string rootFileFolderPath = @"F:\2006 - Polyester Pimpstrap Intergalactic Extravaganza multicam";

Change binSizeMb to the size of your 'bin', e.g. CD, DVD, ex. int binSizeMb = 650; for a CD.

Note – the binSizeMb value is interpreted as what's sometimes referred to as a mebibyte. Contrary to my childhood, when all byte multiples were 'binary', sometimes 'MB' now refers to a 'decimal megabyte' or exactly 1,000,000 bytes, as opposed to the 1,048,576 bytes of a mebibyte (MiB), which is used in my code. If you wish to change this, change the line const int bytesPerMb = 1048576; in the code to const int bytesPerMb = 1000000;.

Change rootFileFolderPath to the full path of the folder containing the files you wish to 'pack into bins', ex. string rootFileFolderPath = @"C:\MySecretBinFilesFolder";.

Run the query by either hitting F5 or clicking the Execute button at the top left of the query tab.

Results

The query code will enumerate all of the files in the rootFileFolderPath folder, recursively, meaning it will include files in all of the subfolders too.

Then it will create 'bins' for the files such that the total size of all the files in each bin is less than or equal to the bin size specified.

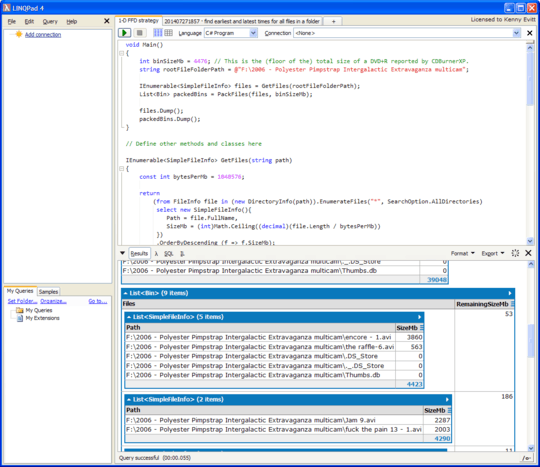

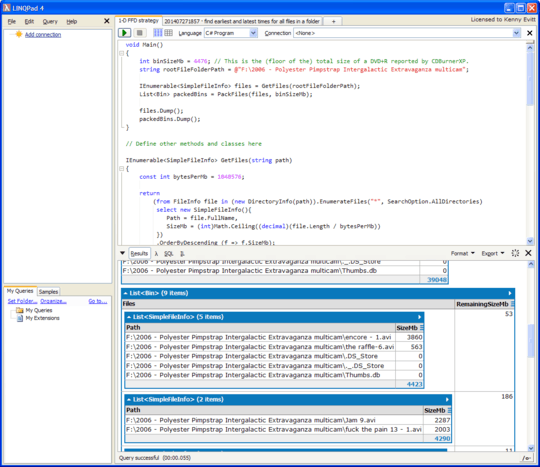

In the LINQPad results pane you'll see two lists.

The first list is of all the files it found, listed in decreasing order by size.

The second list is the bins created by 'packing the files', with a list of the files and their sizes, as well as the remaining size of the bin.

Here's a screenshot showing the second list and the first two bins created:

Cursory Analysis

According to Wikipedia, the algorithm I used – the First Fit Decreasing (FFD) strategy – shouldn't be too bad; Wikipedia states:

In 2007, it was proven that the bound 11/9 OPT + 6/9 for FFD is tight.

'OPT' refers to the optimal strategy (as something potentially unreachable, not any particular actual strategy).

Based on my somewhat fuzzy memories of the mathematical terms involved, this should mean that the FFD strategy should, at worst, pack items into ~1.22 times the number of bins that an optimal strategy would. So, this strategy might pack items into 5 bins instead of 4. I suspect that it's performance is likely to be very close to optimal except for specific 'pathological' item sizes.

The same Wikipedia article also states that there is an "exact algorithm". I may decide to implement that too. I'll have to read the paper that describes the algorithm first.

Just felt that this was a good page for anyone searching: http://www.howtogeek.com/76264/how-to-burn-data-across-multiple-dvd-or-cd-discs/

– Nav – 2013-08-28T04:48:41.9631No, I'm not looking for compression & splitting. I want to distribute the files natively (filesystem) so that each disk may be used directly. – Alex R – 2009-12-19T21:01:30.620