A primary motivation for the PNG format was to create a replacement for GIF that was not only free but also an improvement over it in essentially all respects. As a result, PNG compression is completely lossless - that is, the original image data can be reconstructed exactly, bit for bit - just as in GIF and most forms of TIFF.

PNG uses a 2-stage compression process:

- Pre-compression: filtering (prediction)

- Compression: DEFLATE (see wikipedia)

The precompression step is called filtering, which is a method of reversibly transforming the image data so that the main compression engine can operate more efficiently.

As a simple example, consider a sequence of bytes increasing uniformly from 1 to 255:

1, 2, 3, 4, 5, .... 255

Since there is no repetition in the sequence, it compresses either very poorly or not at all. But a trivial modification of the sequence - namely, leaving the first byte alone but replacing each subsequent byte by the difference between it and its predecessor - transforms the sequence into an extremely compressible set :

1, 1, 1, 1, 1, .... 1

The above transformation is lossless, since no bytes were omitted, and is entirely reversible.

The compressed size of this series will be much reduced, but the original series can still

be perfectly reconstituted.

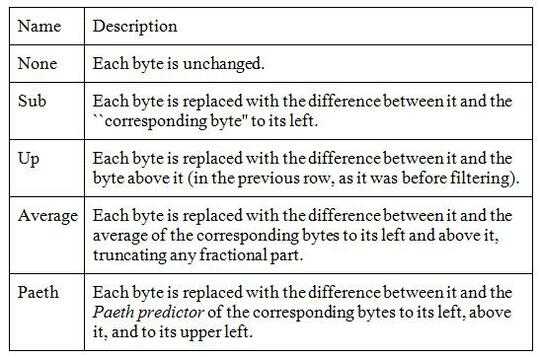

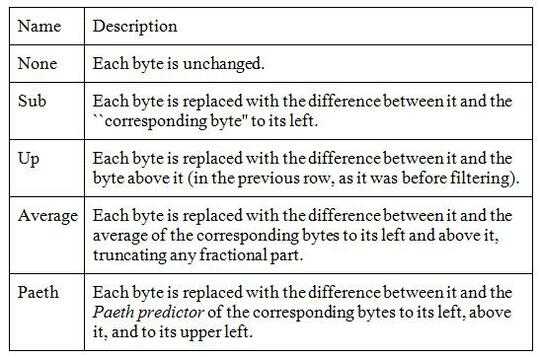

Actual image-data is rarely that perfect, but filtering does improve compression in grayscale and truecolor images, and it can help on some palette images as well. PNG supports five types of filters, and an encoder may choose to use a different filter for each row of pixels in the image :

The algorithm works on bytes, but for large pixels (e.g., 24-bit RGB or 64-bit RGBA)

only corresponding bytes are compared, meaning the red components of the pixel-colors are

handled separately from the green and blue pixel-components.

To choose the best filter for each row, an encoder would need to test all possible combinations.

This is clearly impossible, as even a 20-row image would require testing over 95 trillion combinations, where "testing" would involve filtering and compressing the entire image.

Compression levels are normally defined as numbers between 0 (none) and 9 (best).

These refer to tradeoffs between speed and size, and relate to how many combinations

of row-filters are to be tried. There are no standards as regarding these compression levels,

so every image-editor may have its own algorithms as to how many filters to try when

optimizing the image-size.

Compression level 0 means that filters are not used at all, which is fast but wasteful.

Higher levels mean that more and more combinations are tried on image-rows and only the best

ones are retained.

I would guess that the simplest approach to the best compression is to incrementally test-compress each row with each filter, save the smallest result, and repeat for the next row. This amounts to filtering and compressing the entire image five times, which may be a reasonable trade-off for an image that will be transmitted and decoded many times. Lower compression values will do less, at the discretion

of the tool's developer.

In addition to filters, the compression level might also affect the zlib compression level

which is a number between 0 (no Deflate) and 9 (maximum Deflate). How the specified 0-9

levels affect the usage of filters, which are the main optimization feature of PNG,

is still dependent on the tool's developer.

The conclusion is that PNG has a compression parameter that can reduce the file-size very significantly, all without the loss of even a single pixel.

Sources:

Wikipedia Portable Network Graphics

libpng documentation Chapter 9 - Compression and Filtering

42Most lossless compression algorithms have tunables (like dictionary size) which are generalized in a “how much effort should be made in minimizing the output size” slider. This is valid for ZIP, GZip, BZip2, LZMA, ... – Daniel B – 2014-11-27T10:12:43.480

21The question could be stated differently. If no quality is lost from the compression, then why not always use the compression producing the smallest size? The answer then would be, because it requires more RAM and more CPU time to compress and decompress. Sometimes you want faster compression and don't care as much about compression ratio. – kasperd – 2014-11-27T12:12:50.770

14PNG compression is almost identical to ZIPping files. You can compress them more or less but you get the exact file back when it decompresses -- that's what makes it lossless. – mikebabcock – 2014-11-27T14:31:30.723

13Most compression software such as Zip and Rar allow you to enter "compression level" which allow you to choose between smaller file <--> shorter time. It does not mean these software discard data during compression. This setting (in GIMP, pngcrush, etc) is similar. – Salman A – 2014-11-27T17:50:36.873

Also, from an htg article about this post, I've remembered of a great slightly related video from veritasium + vsauce talking about randomness, entropy, and how they related to data compression: https://www.youtube.com/watch?v=sMb00lz-IfE

– cregox – 2014-11-30T20:54:34.560you should probably mind that there are some caveats to how "lossless" png really is : https://hsivonen.fi/png-gamma/

– n611x007 – 2014-12-02T16:33:19.980recommend reading a book like Managing Gigabytes for those who want to learn more about compression (it is, or was, required reading for Google engineers, I believe)

– nothingisnecessary – 2014-12-06T05:56:12.7772@naxa: There are no caveats to how lossless png really is. It is always 100% lossless. The article only warns you about bugs that some old browsers had in their PNG implementation for handling gamma correction. And that is only meaningful if you need to match the color with CSS colors (which are not gamma corrected). – Pauli L – 2014-12-06T15:58:00.113

@PauliL thanks! I've read it a long time ago I think I remembered the culprit wrong. Your comment brings the relevant information onsite! – n611x007 – 2014-12-08T09:04:21.593

There is a difference between lossless compression (like zip) and lossy compression (like mp3). You can recreate the source from lossless, but not from lossy. – chiliNUT – 2014-12-12T03:13:29.027