A PCIe lane is a pair of high speed differential serial connections, one in each location. A link between devices can be and often is made up of multiple lanes for higher data rates. The data rates of individual lanes also varies by generation, roughly speaking one lane of Gen x provides about the same data rate as two lanes of Gen x-1.

On modern Intel systems some PCIe lanes are provided by the CPU directly, while others are provided by the PCH in the chipset. The link from CPU to chipset is similar to PCIe but there are differences in the details.

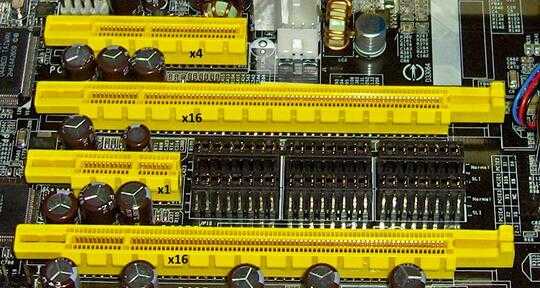

Motherboard vendors have to decide how to allocate the lanes provided by the CPU and PCH to the onboard devices and slots. They can and often do include signal switches to give the user some options but there is a limit to how much signal switching can be affordably implemented.

Intels "mainstream desktop" platforms currently have 16 lanes from the CPU plus up to 24 (depending on which chipset is selected) from the chipset. However the lanes from the chipset are limited by the total bandwidth available from the CPU to the chipset (roughly equivalent to PCIe 3.0 x4 IIRC).

16 lanes from the CPU and 24 from the chipset more than enough for a normal desktop or small server, you can put your graphics card on the 16 lanes from the CPU and then the lanes from the chipset plus the integrated controllers in the chipset are generally enough for storage, networking etc. Even with two GPUs 8 lanes per GPU is enough most of the time.

However when building a high end system with 3+GPUs (or possiblly two top of the lines GPUs), lots of fast storage and/or very fast network interfaces more lanes are desirable. If you want to give each device it's maximum possible capacity you are looking at 16 lanes per GPU,

So for those with higher end needs Intel has a high end desktop socket, currently LGA2066. This socket also covers single socket workstation/server systems, though it seems officially at least you can't use the workstation/server processors in most desktop boards.

Unfortunately while with previous generations of high end desktop the number of PCIe lanes and ram channels was fixed, with LGA2066 the number varies by the processor you select. A desktop LGA2066 CPU can have 16, 28 or 44 PCIe lanes.

This puts motherboard vendors in a tricky position, they have to decide how they will handle giving the true high end customers the full functionality of their CPU while deciding what to disable or throttle for those with lower-end CPUs. System builders in turn must carefully read the manuals for the motherboards to find out what the limitations are before buying.

Grabbing the manual for one of the cheaper X299 boards https://dlcdnets.asus.com/pub/ASUS/mb/LGA2066/TUF_X299_MARK2/E12906_TUF_X299_MARK2_UM_WEB.pdf shows that the main limitation is the x16 slots, On a 44 lane CPU all three slots are usable with two running in x16 mode and one running in x8 mode. On the other hand on a 28 lane CPU you get one x16 one x8 and one unusable and on a 16 lane CPU you only get one x16 or two x8.

Grabbing the manual for a high end X299 board https://dlcdnets.asus.com/pub/ASUS/mb/LGA2066/ROG_RAMPAGE_VI_EXTREME_OMEGA/E15119_ROG_RAMPAGE_VI_EXTREME_OMEGA_UM_V2_WEB.pdf it seems they have decided not to support the 16 lane parts at all. This board does let you use three GPUs on a 28 lane CPU, but the second m.2 slot and the u.2 connector are only available with 44 lane CPUs

Yours is an excellent answer. With it, I am assured that I can buy a PC with the cheaper 5820K, since I would have only one graphics card, one SSD, one HD, and I am not using it for games, but for composing animated-movie, rendering as well as for Java-eclipse programming. – Blessed Geek – 2014-11-21T20:26:55.143

1Two graphics cards probably could help in fast rendering a lot, but I don't know much about rendering animated movies. For Java/Eclipse - it will be more than enough. Eclipse is very slow IDE (I think NetBeans is faster and better for Java), but I'm using Eclipse with Java/Android SDK and on my old laptop (Core 2 Duo T9300, SSD) it works not that bad. – Kamil – 2014-11-21T20:42:25.670

2Storyboard animation and anime is not intensive in rendering. It is intensive in calculating/extrapolating the joints and movement of a character, when the character is made to move. Netbeans is based on Swing/AWT. Eclipse is based on SWT. IBM invented SWT as a way to somewhat allow access to native graphics i/o, which they claim is faster than AWT. – Blessed Geek – 2014-11-21T20:57:09.517

3Single port gigabit networking cards don't need multiple lanes. Even a PCIe 1.0 lane offers 250MBps = 2000Gbps of bandwidth; which is enough to allow for 50% overhead losses while still being able to keep a gigabit port saturated. Multiport gigabit cards can need more than one lane; but if that's what you were referring to you should be more specific since they're generally not something seen outside of a server room. – Dan is Fiddling by Firelight – 2014-11-21T21:38:33.277

@Kamil You should maybe write this up on the Super User blog! – Canadian Luke – 2014-11-21T23:20:03.440

6Slight issue with the lane concept: PCI-e lanes are point-to-point. 28 of those lanes can end at the Core i7. However, some expensive motherboards have a PCI-e switch that allows 2 graphics cards to communicate directly bypassing the CPU. This means you don't have 2x16 lanes ending at the CPU. – MSalters – 2014-11-22T01:09:43.310

@CanadianLuke You mean sharing experience with Eclipse? Sorry about that offtopic. Or you mean this text about PCIe? – Kamil – 2014-11-22T14:49:15.917

The answer itself, explaining about the lanes – Canadian Luke – 2014-11-22T15:29:22.913

@MSalters ... Is that, then, how you have multiple cards, I've seen report of having four (4) SLI cards in one machine ... would make sense. – Pᴀᴜʟsᴛᴇʀ2 – 2014-11-22T17:06:45.760

@Paulster2: That's the usual solution for current high-end systems. The old solution used to be a dedicated cable between the cards. – MSalters – 2014-11-22T19:49:29.917

@MSalters With a switch, the cards are still sharing bandwidth with each other when you need to transfer models/textures/etc. to or from the cards to the CPU or main memory. – reirab – 2014-11-23T03:49:37.380

@DanNeely I think you mean 2 Gbps (2000 Mbps,) not 2000 Gbps. – reirab – 2014-11-23T04:00:45.813

Intellij is much faster than Eclipse and less buggy, surprising for a java application, but it's still a memory hog. – simonzack – 2014-11-23T04:43:36.907

@simonzack You can change jvm parameters and reduce memory for Intellj (or eclipse). Article about... increasing memory :D https://www.jetbrains.com/idea/help/increasing-memory-heap.html

– Kamil – 2014-11-23T07:19:50.0102

Great answer, but I just want to point out that LinusTechTips compared doing PCIe 3.0 SLI with a 28-lane CPU vs. a 40-lane CPU, and the 16x/8x vs. 16x/16x didn't seem to make much of a difference: https://www.youtube.com/watch?v=rctaLgK5stA So it can really depend on the hardware / use case whether or not everything getting full lane bandwidth matters or not.

– Abe Voelker – 2015-08-09T19:34:01.540