Theory

Differences in video probably won't be noticeable for untrained eye. 1080p video would have to be downscaled anyway. It won't be exactly the same, though, because compression and scaling are applied in different order.

Let's assume that original video was 1080p. In this case the 720p video was first scaled, then compressed. On the other hand, 1080p clip was first compressed server-side, then scaled on your machine. 1080p file will obviously be bigger. (otherwise it would offer higher resolution, but at lower quality, ruining the visual experience and invalidating the point of using higher resolution1)

Lossy compression usually causes visual artifacts that appear as square blocks with noticeable edges when video is paused, but aren't visible when you play it with normal framerate. 1080p file will contain more square blocks (caused by compression) than 720p video, but those blocks will be of approximately the same size in both videos.

Doing simple math we can calculate that 1080p video will contain 2,25 times more such blocks, so after scaling it down to 720p those blocks will be 2.25 times smaller than in actual 720p video. The smaller those blocks are, the better quality of the final video is, so 1080p video will look better than 720p video, even on 720p screen. Resized 1080p video will appear slightly sharper than actual 720 clip.

Things get a bit more complicated if source material was bigger than 1080p. The 1080p clip is first scaled to 1080p and compressed before you play it and then scaled once again while playing. The 720p clip is scaled only once and then compressed. The intermediate scaling step which is present in 1080p video case will make its quality slightly worse2. The compression will make 720p even worse, though, so 1080p wins anyway.

One more thing: It's not only video that is compressed, but audio too. When people decide to use higher bitrate1 for video compression, they often do the same with audio. 1080p version of the same video may offer better sound quality than 720p video.

1: A bitrate is the factor that decides how good the compressed video is at the cost of file size. It's specified manually when video is compressed. It specifies how much disk space can be used for every frame (or time unit) of compressed video. Higher bitrate = better quality and bigger file. Using the same bitrate with the same framerate will produce files of (approximately) the same size, no matter what video resolution is, but the higher resolution is used, the less disk space can be spent on single pixel, so increasing output resolution without increasing bitrate can make compressed video look worse than it would with lower output resolution.

2: Try it yourself: open up a photo in any editor and scale it to a bit smaller size, then again and again, save it as PNG. Then open original photo again and scale it to the same size in one step. Second attempt will give better results.

Test

@Raestloz asked for actual videos for comparision in his comment. I couldn't find 1080p and 720p versions of the same video for comparison, so I made one.

I have used uncompressed frames from "Elephant's dream" movie (http://www.elephantsdream.org/) which are available under CC-BY 2.5. I have downloaded frames 1-6000 and converted them into videos using ffmpeg and following batch file:

ffmpeg -i %%05d.png -c:v libx264 -framerate 24 -b:v 500k -an -s 1280x720 -f mp4 _720p_500k.mp4

ffmpeg -i %%05d.png -c:v libx264 -framerate 24 -b:v 700k -an -s 1280x720 -f mp4 _720p_700k.mp4

ffmpeg -i %%05d.png -c:v libx264 -framerate 24 -b:v 1125k -an -s 1280x720 -f mp4 _720p_1125k.mp4

ffmpeg -i %%05d.png -c:v libx264 -framerate 24 -b:v 4000k -an -s 1280x720 -f mp4 _720p_4000k.mp4

ffmpeg -i %%05d.png -c:v libx264 -framerate 24 -b:v 500k -an -f mp4 _1080p_500k.mp4

ffmpeg -i %%05d.png -c:v libx264 -framerate 24 -b:v 700k -an -f mp4 _1080p_700k.mp4

ffmpeg -i %%05d.png -c:v libx264 -framerate 24 -b:v 1125k -an -f mp4 _1080p_1125k.mp4

ffmpeg -i %%05d.png -c:v libx264 -framerate 24 -b:v 4000k -an -f mp4 _1080p_4000k.mp4

- 24 fps

- 1080p and 720p

- four constant bitrates for each resolution:

- 500 kbps

- 700 kbps

- 1125 kbps

- 4000 kbps

500 kbps is low enough for compression artifacts and distortions to appear on 720p video. 1125 kbps is proportional bitrate per pixel for 1080p (500 × 2.25 = 1125, where 2.25 = 1920×1080 / 1280×720). 700 kbps is intermediate bitrate to check if using bitrate much lower than proportional for 1080p makes sense. 4000 kbps is high enough to create mostly lossless video in both resolution for comparison of resized 1080p to actual 720p.

Then I have split videos back into single frames. Extracting all frames is slow and takes a lot of space (true story), so I recommend using ffmpeg's -r switch to extract every 8th frame (ie. -r 3 for 24 fps video)

I can't provide future-proof download links for videos, but these steps can be easily replicated to create clips such as mine. For the record, here are output file sizes: (should be roughly identical for both resolutions, because bitrate is constant per second)

- 500 kbps: 13.6 MB / 13.7 MB

- 700 kbps: 18.8 MB / 19 MB

- 1125 kbps: 29.8 MB / 30.2 MB

- 4000 kbps: 105 MB / 105 MB

Downloads for samples of extracted frames are available at the end of this post.

Increasing bitrate and resolution

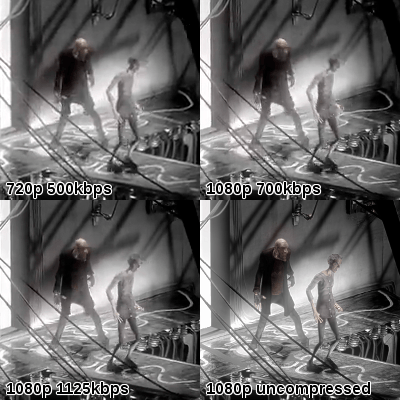

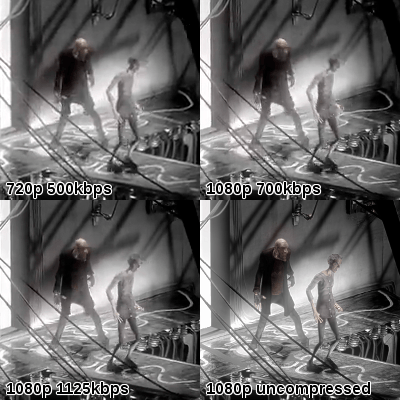

Here's a comparison of the same region cropped from both frames after scaling to 720p (frame 2097). Look at the fingers, heads and the piece of equipment hanging from the ceiling: even going from 500 to 700 kbps makes a noticeable difference. Note that both images are already scaled to 720p.

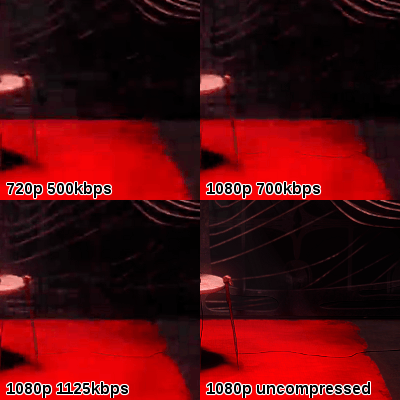

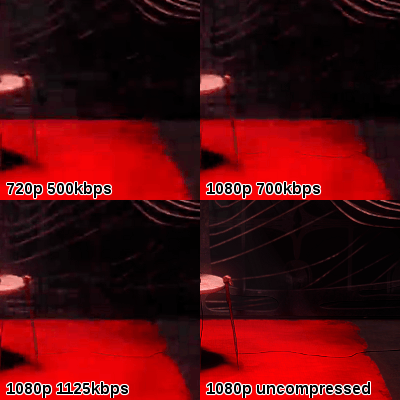

Frame 3705. Notice rug's edge and the cable:

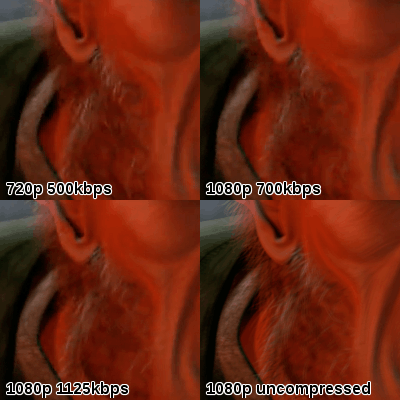

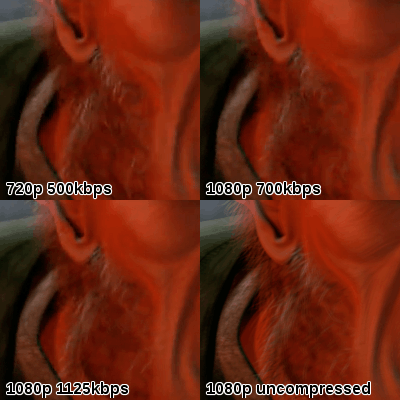

Frame 5697. This is an example of frame that doesn't compress very well. 1080p 700 kbps video is less detailed than 720 500 kbps clip (ear's edge). Skin details are lost on all compressed frames.

GIFs of all three frames, with increasing bitrate. (Unfortunately I had to use dithering because GIMP doesn't support more than 255 colors in GIF, so some pixels are a bit off.)

Constant bitrate, different resolutions

Inspired by @TimS.'s comment, here is the same region from frame 2097 with 720p and 1080p side by side.

For 500 kbps, 720p is a bit better than 1080p. 1080p appears sharper, but these details aren't actually present in uncompressed image (left guy's trousers). With 700 kbps I'd call it a draw. Finally, 1080p wins for 1125 kbps: both stills look mostly identical, but picture on the right has more pronounced shadows (pipes on the back wall and in lower right part).

Very high bitrate

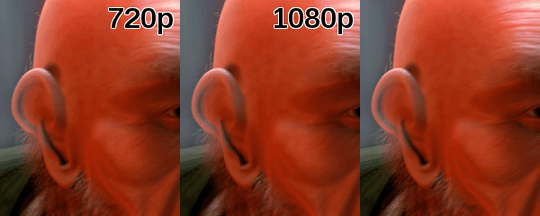

@Noah asked a good question in the comments: will both images look identical with high enough bitrate? Here's 720p 4000 kbps vs. 1080p 4000 kbps vs. uncompressed frame 5697:

Now this is pretty subjective, but here's what I can see:

- Left edge of the ear is pixelated in 720p, but smooth in 1080p, despite identical bitrate.

- 720p preserves cheek skin details better than 1080p.

- Hair looks a bit sharper in 1080p.

It's scaling that starts to play role here. One could intuitively answer that 1080p will look worse than 720p on a 720p screen, because scaling always affects quality. It's not exactly true in this case, because the codec I used (h.264, but also other codecs) has some imperfections: it creates little boxes that are visible on contrasting edges. They appear on the 1080p snapshot too (see links at the bottom), but resizing to 720p causes some details to be lost, in particular smoothes out these boxes and improves quality.

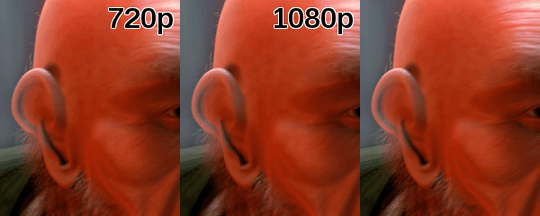

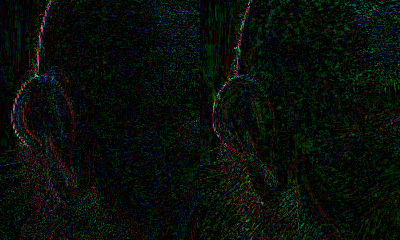

Okay, so let's calculate difference between 720p (left) and 1080p (right) vs. original frame and stretch the contract, so it's clearly visible:

This image gives us even clearer vision of what is going on. Black pixels are represented perfectly in compressed (and resized to 720p) frames, colored pixels are off proportionally to intensity.

- The cheek is way closer to original on 720p half, because scaling smoothed out skin details on the right half.

- Ear's edge isn't that close to uncompressed pixels, but it's better in 1080p. Again, artifacts are visible on 720p half - they would appear on unresized 1080p too, but scaling smoothed them out with quite good results.

- Hair seems to be better on 720p because it's closed to black, but in reality it looks like random noise. 1080p, on the other hand, has its distortions lining up with hair edges, so it actually emphasizes hair lines. It's probably the magic of scaling again: the "noise" increases when scaling, but it also starts to make sense.

Disclaimer

This test is purely synthetic and doesn't prove that real-life 1080p video looks better than 720p when played on smaller screen. However, it shows strong relationship between video bitrate and quality of video resized to screen size. We can safely assume that 1080p video will have higher bitrate than 720p, so it will offer more detailed frames, most of the time enhancing viewer's experience. It's not the resolution that plays most important part, but video bitrate, which is higher in 1080p videos.

Using insanely high bitrate for 720p video won't make it look better than 1080p. Post-compression downscaling can be beneficial for 1080p, because it will shape compression noise and smooth out artifacts. Increasing bitrate doesn't compensate lack of extra pixels to work with because lossy codecs aren't perfect.

In rare cases (very detailed scenes) higher resolution, higher bitrate videos may actually look worse.

What's the difference between this artificial test and real-life video?

- I have assumed at least 40% higher bitrate for 1080p than for 720p. Looking at the results, I guess 20% would be enough to notice quality improvement, but I haven't tested it. Proportional increase in bitrate will provide much better results, even if lower resolution matches what screen uses, but it's unlikely to be used in real life. (still, it's proportional, @JamesRyan)

- Real-life videos usually use variable bitrate (VBR). I went with 1-pass constant bitrate (CBR), hoping that it will make all unpleasant compression side-effects more obvious.

- Different codecs can react in different ways. This test was conducted using popular h.264 codec.

Once again: I don't say that this post proves anything. My test is based on artificially-made video. YMMV for realistic examples. Still, the theory probably is true, there's nothing that would suggest it can be wrong. (except for scaling thing, but the test deals with it)

Concluding, in most cases 1080p video will look better than 720p video, no matter what screen resolution is.

Downloads:

- Frame 2097:

- Frame 3705:

- Frame 5697:

I'd agree on the potentially higher audio bitrate, but I'm not sure about the image quality. Sharper image requires more pixels to actually make it sharper, displaying an HD image on an SD display will not make any difference since the pixels needed to actually make the image sharper is just not there – Raestloz – 2014-10-02T04:58:41.417

4@Raestloz For uncompressed video or lossless codecs that's true, but lossy compression creates those square blocks I talk about, which actually act like big pixels. Scaling 1080p video down to 720p will make them smaller than in actual 720p video. (assuming that bitrate per pixel is approximately identical in both files) – gronostaj – 2014-10-02T06:28:38.943

That'd depend on the way the 720p video was produced I guess, .mkv is merely a container after all. Is there a short video where this phenomenon (blocks visibility on actual 720p vs 1080p scaled down) can be seen? My monitor is actually 1280x1024 so I can test it out (btw I don't have 1080p videos, I figured it'd be just useless for my monitor) – Raestloz – 2014-10-02T07:45:28.677

2@Raestloz I've added synthetic tests with my interpretation of results. – gronostaj – 2014-10-02T21:21:33.383

4I see a lot of wrong when it comes to answering questions like this, but your answer is excellent. Of some note is that lossy compression does not always create "blocks" -- the exact artifacting depends on many factors, but based the knowledge shown in your answer, I figure this was an intentional simplification for clarity. – Charles Burns – 2014-10-02T22:17:05.303

Is the scaling down of 1080p media more-CPU intensive than playing 720p natively? – AStopher – 2014-10-03T07:25:52.227

1@zyboxenterprises Surely it will be more _PU-intensive, the question is which processor will handle it. Playing 1080p video alone is already more demanding and scaling to 720p adds even more computational overhead. I believe it's handled by GPU, because video decoding is offloaded to GPU (on Windows it's usually done with DXVA, I think). I'm not absolutely sure about that, though. – gronostaj – 2014-10-03T08:02:18.480

Does this imply that a 720p video with a "high enough" bitrate will be virtually indistinguishable from a 1080p video with a comparably scaled bitrate? (e.g. is there some sort of bitrate "butter zone" that equalizes both formats?) – Noah – 2014-10-03T18:01:54.397

Would also be interesting to see what a 720p 2250 bitrate version of that same frame looks like. I'd expect it to be better than either of the current ones. – Tim S. – 2014-10-03T19:10:03.090

1Your bitrates are not even remotely proportional or realistic. Basically you have fabricated evidence adding an artificial ceiling on the 720p quality to support your original false assumption. Eg. Youtube bitrate limits allow 24% extra for 1080p, not over double! – JamesRyan – 2014-10-03T22:51:51.790

@Noah I have updated the answer with better tests. Tl;dr: no, high bitrate will not compensate for lacking pixels and codec imperfections. – gronostaj – 2014-10-04T23:41:45.163

@TimS. Interesting idea, I've checked if you're right. In my opinion 1080p looks a bit better with high bitrate, but it's quite subjective. I have added test frames, check what you'll see. – gronostaj – 2014-10-04T23:43:50.990

1@JamesRyan If you have a reliable way of downloading YouTube videos to compare bitrates, I'll be happy to try it (downloading 1080p is impossible since DASH streaming has been disabled). Note that OP's not asking about streaming video, but regular files. I don't call my results evidence and I have explicitly said that it's not a proof, but I have emphasized the disclaimer even more to avoid confusion. – gronostaj – 2014-10-04T23:46:37.923

You can use APNG for the animations. That'll provide much better quality than the old GIFs

– phuclv – 2014-10-05T16:26:27.123@LưuVĩnhPhúc APNG doesn't seem to work in Chrome. GIFs aren't really as bad as I expected, I think I'll stick to them for now unless somebody knows some widely-supported alternative. – gronostaj – 2014-10-05T20:26:19.507

1I've recently seen a talk at a conference which showed that on 4K displays, in some sequences (especially lower bit rates) users actually preferred the 1080p version over the 4K one. (Kongfeng Berger, Yao Koudota, Marcus Barkowsky and Patrick Le Callet. Subjective quality assessment comparing UHD and HD resolution in HEVC transmission chains, QoMEX 2015) – slhck – 2015-06-03T10:49:28.327