8

6

Problem explanation

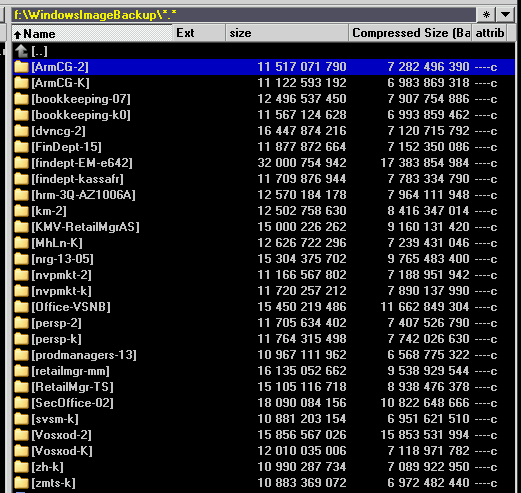

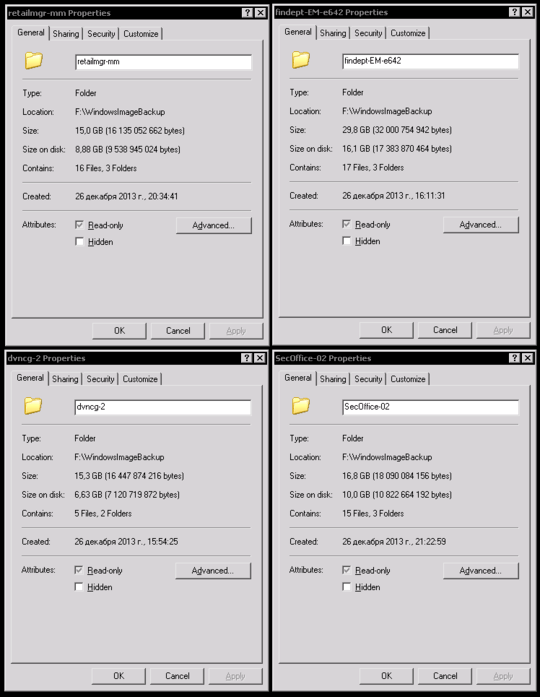

I'm storing windows disk images created with wbadmin on NTFS drive, and I found compressing then with NTFS compression gives 1.5-2× space conservation, still giving full availability for restoring.

But in process of compressing, file get insanely fragmented, usually above 100'000 fragments for system disk image.

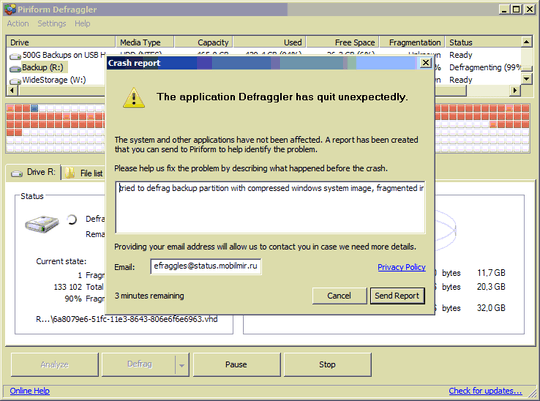

With such fragmentation, defragmenting takes very long (multiple hours per image). Some defragmenters even can't handle it, they just skip the file or crash.

The source of the problem is, I think, that file is compressed by chunks which get saved separately.

The question

Is there good (fast) way to get image file defragmented yet keep it compressed (or compress it without causing extreme fragmentation)? May it be some utility to quickly defragment file to continous free space, or some utility (or method) to create non-fragmented compressed file from existing non-compressed?

Remarks based on comments/answers:

- External (to windows kernel) compression tools are not an option in my case. They can't decompress file on-the-fly (to decompress 10 Gb file I need 10 Gb free, which isn't always at hand; also, it takes a lot of time); they're not accessible when system is boot from DVD for recovery (it's exactly when I need the image available). Please, stop offering them unless they create transaprently compressed file on ntfs, like

compact.exe. - NTFS compression is not that bad for system images. It's rather good except for fragmentation. And decompression does not take much CPU time, still reducing IO bottleneck, which gives performance boost in appropriate cases (non-fragmented compressed file with significant ratio).

- Defragmentation utilities defragment files without any regard if they are compressed. The only problem is number of fragments, which causes defragmentation failure no matter if fragmented file compressed or not. If number of fragments isn't high (about 10000 is already ok), compressed file will be defragmented, and stay compressed and intact.

NTFS compression ratio can be good, depending on files. System images are usually compressed to at most 70% of their original size.

Pair of screenshots for those do not believe, but ofc, you can make your own tests.

I actually did restorations from NTFS-compressed images, both fragmented and non-fragmented, it works, please either trust me or just check it yourself. rem: as I found around year ago, it does not work in Windows 8.1. It sill works in Windows 7, 8, and 10.

Expected answer:

an working method or an program for Windows to either:

compress file (with NTFS compression, and keep it accessible to Windows Recovery) without creating a lot of fragments (maybe to another partition or make a compressed copy; it must be at least 3x faster on HDD than

compact+defrag),or

to quickly (at least 3x faster than windows defrag on HDD) defragment devastately fragmented file, like one containing 100K+ fragments (it must stay compressed after defrag).

@DoktoroReichard it depends on the content of the files. Text files and sparse files will have very good compression ratio. Typically I avoid files that are already compressed like zip files, images, audio/video files... and after compressing I often find 10-20% decreased in size – phuclv – 2016-11-28T14:34:49.767

I find it quite odd for NTFS to compress that much (as real-world tests show only a 2 to 5% decrease). Also, NTFS has some safeguards regarding file fragmentation (such as journaling). How big are the files (before and after)? Also, from the picture, it seems Defraggler can't defragment compressed files. – Doktoro Reichard – 2013-12-26T13:54:42.740

1>

Also, compression ratio is off-topic, but here are real images of real freshely-installed Windows 7 Professional, (mostly 32-bit, 3 or 4 64-bit) systems with standard set of software: http://i.imgur.com/C4XnUUl.png

– LogicDaemon – 2013-12-26T20:47:22.743