Previous answers are wrong by an order of magnitude!

The best compression algorithm that I have personal experience with is paq8o10t (see zpaq page and PDF).

Hint: the command to compress files_or_folders would be like:

paq8o10t -5 archive files_or_folders

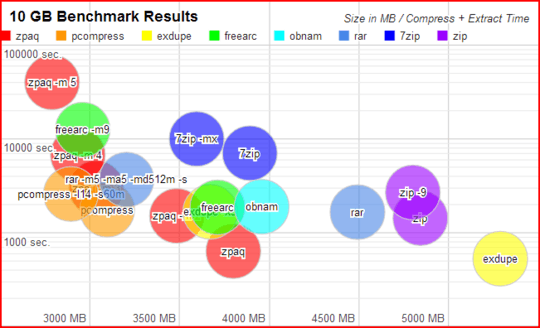

Source: Incremental Journaling Backup Utility and Archiver

You can find a mirror of the source code on GitHub.

A slightly better compression algorithm, and winner of the Hutter Prize, is decomp8 (see link on prize page). However, there is no compressor program that you can actually use.

For really large files lrzip can achieve compression ratios that are simply comical.

An example from README.benchmarks:

Let's take six kernel trees one version apart as a tarball,

linux-2.6.31 to linux-2.6.36. These will show lots of redundant

information, but hundreds of megabytes apart, which lrzip will be very

good at compressing. For simplicity, only 7z will be compared since

that's by far the best general purpose compressor at the moment:

These are benchmarks performed on a 2.53Ghz dual core Intel Core2 with

4GB ram using lrzip v0.5.1. Note that it was running with a 32 bit

userspace so only 2GB addressing was posible. However the benchmark

was run with the -U option allowing the whole file to be treated as

one large compression window.

Tarball of 6 consecutive kernel trees.

Compression Size Percentage Compress Decompress

None 2373713920 100 [n/a] [n/a]

7z 344088002 14.5 17m26s 1m22s

lrzip 104874109 4.4 11m37s 56s

lrzip -l 223130711 9.4 05m21s 1m01s

lrzip -U 73356070 3.1 08m53s 43s

lrzip -Ul 158851141 6.7 04m31s 35s

lrzip -Uz 62614573 2.6 24m42s 25m30s

igrimpe: Many compression algorithms index patterns. A billion A's is an A a billion times. You can compress that to [A]{1, 1000000000}. If you have a billion random numbers, it becomes difficult to do pattern matching since each consecutive number in a given subset decreases the probability of a matching subset exponentially. – AaronF – 2016-09-20T22:46:25.017

Nice idea ... but where do you get such files from anyways? – Robinicks – 2009-08-22T11:42:14.583

3I've seen 7zip compress server log files (mainly text) down to about 1% of their original size. – Umber Ferrule – 2009-10-20T13:07:31.137

2Open Notepad. Type 1 Billion times "A". Save, then compress. WOW! Create an app that writes 1 Billion (true) random numbers to a file. Compress that. HUH? – igrimpe – 2012-12-28T08:51:00.357