Short Answer:

YES; you'll always pay for the USB power with at least that much more power from the wall. Not only is this required by the laws of thermodynamics, it's also inherent in the way power supplies work.

Longer Answer:

We'll take the whole system of the computer, its internal power supply, its operating circuits and the USB port circuitry to be one big, black box called the Supply. For the purposes of this illustration, the whole computer is one oversized USB charger, with two outputs: the computer operating power, which we will call Pc, and the output USB power, which we will call Pu.

Converting power from one form, (voltage, current, frequency), to another, and conducting power from one part of a circuit to another, are all physical processes which are less than perfect. Even in an ideal world, with superconductors and yet-to-be-invented components, the circuit can be no better than perfect. (The importance of this subtle message will turn out to be the key to this answer). If you want 1W out of a circuit, you must put in at least 1W, and in all practical cases a bit more than 1W. That bit more is the power lost in the conversion and is called loss. We will call the loss power Pl, and it is directly related to the amount of power delivered by the supply. Loss is almost always evident as heat, and is why electronic circuits which carry large power levels must be ventilated.

There is some mathematical function, (an equation), which describes how the loss varies with output power. This function will involve the square of output voltage or current where power is lost in resistance, a frequency multiplied by output voltage or current where power is lost in switching. But we don't need to dwell on that, we can wrap all that irrelevant detail into one symbol, which we will call f(Po), where Po is the total output power, and is used to relate output power to loss by the equation Pl = f(Pc+Pu).

A power supply is a circuit which requires power to operate, even if it is delivering no output power at all. Electronics engineers call this the quiescent power, and we'll refer to it as Pq. Quiescent power is constant, and is absolutely unaffected by how hard the power supply is working to deliver the output power. In this example, where the computer is performing other functions besides powering the USB charger, we include the operating power of the other computer functions in Pq.

All this power comes from the wall outlet, and we will call the input power, Pw, (Pi looks confusingly like Pl, so I switched to Pw for wall-power).

So now we are ready to put the above together and get a description of how these power contributions are related. Well firstly we know that every microwatt of power output, or loss, comes from the wall. So:

Pw = Pq + Pl + Pc + Pu

And we know that Pl = f(Pc+Pu), so:

Pw = Pq + f(Pc+Pu) + Pc + Pu

Now we can test the hypothesis that taking power from the USB output increases then wall power by less than the USB power. We can formalise this hypothesis, see where it leads, and see whether it predicts something absurd, (in which case the hypothesis is false), or predicts something realistic, (in which case the hypotheses remains plausible).

We can write the hypothesis first as:

(Wall power with USB load) - (Wall power without USB load) < (USB power)

and mathematically as:

[ Pq + f(Pc+Pu) + Pc + Pu ] - [ Pq + f(Pc) + Pc ] < Pu

Now we can simplify this by eliminating the same terms on both sides of the minus sign and removing the brackets:

f(Pc+Pu) + Pu - f(Pc) < Pu

then by subtracting Pu from both sides of the inequality (< sign):

f(Pc+Pu) - f(Pc) < 0

Here is our absurdity. What this result means in plain English is:

The extra loss involved in taking more power from the supply is negative

This means negative resistors, negative voltages dropped across semiconductor junctions, or power magically appearing from the cores of inductors. All of this is nonsense, fairy tales, wishful thinking of perpetual-motion machines, and is absolutely impossible.

Conclusion:

It is not physically possibly, theoretically or otherwise, to get power out of a computer USB port, with less than the same amount of extra power coming from the wall outlet.

What did @zakinster miss?

With the greatest respect to @zakinster, he has misunderstood the nature of efficiency. Efficiency is a consequence of the relationship between input power, loss and output power, and not a physical quantity for which input power, loss and output power are consequences.

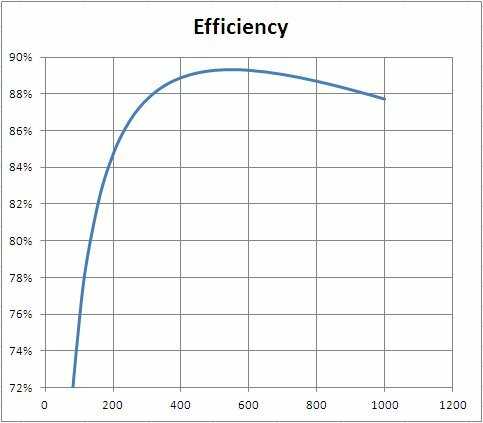

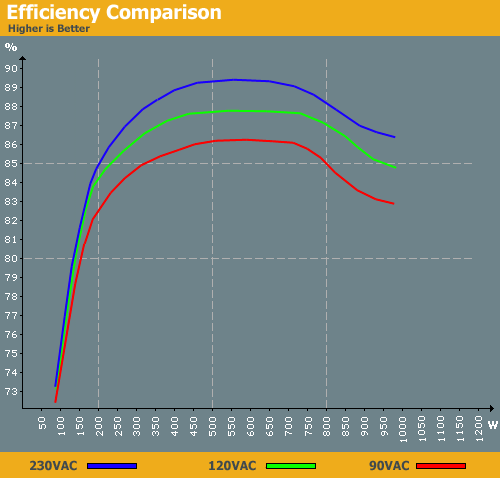

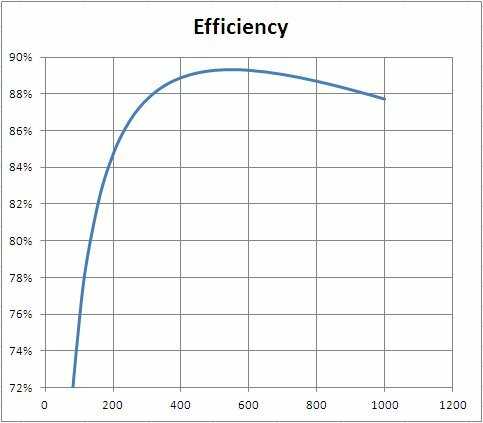

To illustrate, let's take the case of a power supply with a maximum output power of 900W, losses given by Pl = APo² + BPo where A = 10^-4 and B = 10^-2, and Pq = 30W. Modelling the efficiency (Po/Pi) of such a power supply in Excel and graphing it on a scale similar to the Anand Tech curve, gives:

This model has a very steep initial curve, like the Anand Tech supply, but is modelled entirely according to the analysis above which makes free power absurd.

Let's take this model and look at the examples @zakinster gives in Case 2 and Case 3. If we change Pq to 50W, and make the supply perfect, with zero loss, then we can get 80% efficiency at 200W load. But even in this perfect situation, the best we can get at 205W is 80.39% efficiency. To reach the 80.5% @zakinster suggests is a practical possibility requires a negative loss function, which is impossible. And achieving 82% efficiency is still more impossible.

For summary, please refer to Short Answer above.

1Probably not enough to measure, without lab-quality meters. – Daniel R Hicks – 2013-06-06T10:34:33.353

15@DanielRHicks If he plugs five devices at 0.5A each, that makes 16W (with a 80% efficiency). That may not be relevant for the electricity bill, but it's easily measurable with a $15 watt-meter. – zakinster – 2013-06-06T11:52:43.510

@zakinster: However, the additional current drawn from the mains by just one charging USB device is about 10 mA. That could easily be within the error of an inexpensive multimeter measuring up to 2 A. Moreover, the power consumption of a pc could very well vary by 16 W in a few seconds, regardless of whether USB devices are plugged in. – Marcks Thomas – 2013-06-06T13:31:37.010

I can measure the difference with an EUR 25 (roughly US $30) 'kill-a-watt' meter. At least some of these things are both cheap and sensitive enough. (Power usage is stable at 154 Watt when my host is idle. No applications running and monitoring for a few minutes to make sure I get a good reading rather than spiked values). – Hennes – 2013-06-06T14:03:25.930

7

Randall Munroe briefly discusses your question here: http://what-if.xkcd.com/35/

– Eric Lippert – 2013-06-06T15:19:04.9536No. You can start making profit by pumping energy from SUB sockets. – Val – 2013-06-06T16:29:03.763

7My UPS has a power meter, and when I suspend my computer with no USB devices plugged in, the power usage measures 0 watts. If I plug in a tablet and two phones for charging (the USB ports are always powered while the computer is suspended), the power usage reads 7 watts. I don't know how accurate the UPS power meter is, but there's definitely measurable power used. I haven't checked USB power usage while the computer is powered on, but the computer hovers around 80W while idle, so I'm assuming that USB charging would push it to around 87W. – Johnny – 2013-06-06T17:18:13.670

2Good question. ~Is putting an extra item in your fridge making it use more electricity? – tymtam – 2013-06-07T07:39:48.310

@Tymek, Fridge obviously is heated when you put a warm thing into it and should pump more heat into to cool thing down. So, the right question is: does your microwave oven reduce consumption when there is no food in it? When there is a metal solid in it, which becomes white-hot instantly? – Val – 2013-06-09T11:28:04.273

@Val What if the inside of the fridge just gets warmer and the fridge cooler doesn't start because it's still below a threshold? I think you're too quick to dismiss my question, but I like your microwave example. – tymtam – 2013-06-09T23:50:02.000