58

10

Can I run Windows' defrag on an SSD drive ?

Well I believe the short answer is yes, I've heard that SSD drives require special and specific tailored defrag programs.

Is it true and, if so, where can I get it?

58

10

Can I run Windows' defrag on an SSD drive ?

Well I believe the short answer is yes, I've heard that SSD drives require special and specific tailored defrag programs.

Is it true and, if so, where can I get it?

111

Since there seems to be some controversy about this I thought it would be helpful to give a detailed explanation:

You should never defrag an SSD. Don't even think about it. The reason is that physical data placement on an SSD is handled solely by the SSD's firmware, and what it reports to Windows is NOT how the data is actually stored on the SSD.

This means that the physical data placement a defragger shows in it's fancy sector chart has nothing to do with reality. The data is NOT where Windows thinks it is, and Windows has no control over where the data is actually placed.

To even out usage on its internal memory chips SSD firmware intentionally splits data up across all of the SSD's memory chips, and it also moves data around on these chips when it isn't busy reading or writing (in an attempt to even out chip usage.)

Windows never sees any of this, so if you do a defrag Windows will simply cause a whole bunch of needless I/O to the SSD and this will do nothing except decrease the useful life of the SSD.

does needless (billions and billions) of read and writes , hurts the SSD drive life time ? (as opposed to HDD which obviously will loose lifetime )... – Royi Namir – 2013-05-29T06:35:03.583

8@Royi Actually reading doesn't hurt an SSD, the writing does. – Little Helper – 2013-05-29T06:37:53.670

54Furthermore, SSDs have no seek time, so there would be no performance benefit to laying out your files in consecutive blocks. – 200_success – 2013-05-29T09:04:14.473

@RoyiNamir: actually, writing lifetime of an SSD is far, far below billions : It is usually said the lifetime is around 50'000 to 100'000 write lifecycle, but this means: you can write 100'000 common document (not 100'000 times the whole disk capacity). I heard (for ex: http://www.storagesearch.com/ssdmyths-endurance.html) that individual cell can only accept up to 2'000 - 3'000 cycles on the cheap SSD versions... So be very careful what you place on those: no tmp files, disable boot optimization, disable defrag, etc.

– Olivier Dulac – 2013-05-29T09:44:31.220@OlivierDulac - Those limits of increase significantly your source link is not current. – Ramhound – 2013-05-29T11:16:41.957

5

@OlivierDulac: while your data is correct, conclusions are completely wrong. It's 3K cycles per cell, SSDs have advanced chips making sure to distribute writes equally over cells and prevent hotspotting. And with TLC it's even just 1000 cycles per cell. Which means that 256GB TLC SSD, with 10GB a day of writes with 3x amplification, will last 12 years. 256GB MLC SSD under same conditions will last 70 years. And even that are very conservative estimates, in real life they seem to last much, much longer http://www.anandtech.com/show/6459/samsung-ssd-840-testing-the-endurance-of-tlc-nand

– vartec – 2013-05-29T11:34:10.1334@OlivierDulac: even if you write a lot of data (at least 7GB) per day to SSD, the flash memory should last for tens, if not hundreds, of years. It's far more likely that the controller hardware or software will fail, given the number of failures that have been reported by manufacturers and users. – Little Helper – 2013-05-29T11:42:17.243

@vartec: I already precised "individual cells can only accept up to 2000 "... and before that I mention 100000 for the whole disk (as, indeed, it'll try very hard to widespread the write across multiple cells. But can't do magic if a person regularly update the whole disk contents, which can happen (video editors, etc). So I still want people to be aware of this and "be very careful what you place on those". – Olivier Dulac – 2013-05-29T12:27:52.923

@Roberts: thanks for those numbers, which give an hint on what is possible with those drives with 'everyday usage' figures. I was more pointing out that those are not the same as HDDs : on HDDs you have less incentive to try to tune down writings (even though HDDs overall are less reliable, they can support more writing per "section". It all depends on the usage pattern which is safer to use, and for what) – Olivier Dulac – 2013-05-29T12:37:36.417

an interresting thing to keep in mind that can help performance AND life duration of every kind of disks : Disabling (in each windows you use) the "update Last Access" setting in NTFS : http://msdn.microsoft.com/en-us/library/ms940846%28v=winembedded.5%29.aspx (of course only usefull if you use NTFS on those disks. And beware of possible differences on recent/older windows: this trick works on XP, and probably many other flavors). Another (easier) way: FSUTil: http://www.techrepublic.com/blog/window-on-windows/improve-windows-xp-pros-ntfs-performance-by-disabling-the-accessed-timestamp/564

– Olivier Dulac – 2013-05-29T12:41:03.7902@OlivierDulac: the source you quote says "100,000 in 1997" and "The typical range today for flash SSDs is from 1 to 5 million. The technology trend has been for this to get better. When this article was published, in March 2007 [...]" – vartec – 2013-05-29T12:58:19.633

6New reliability poll shows surprising SSD failure rate (2012-09-12) – Gary S. Weaver – 2013-05-29T14:05:30.437

1

However, you can significantly lower your SSD failure risk by purchasing wisely and taking precautions against power failure, etc. See this answer on ServerFault, though I'm not sure if I'd follow the advice on manufacturers.

– Gary S. Weaver – 2013-05-29T14:17:46.417I read somewhere (IIRC one of the many Anandtech articles from a few years ago), the the flash cells in an SSD will die of old age within a decade even if you don't hit the write limit sooner. – Dan is Fiddling by Firelight – 2013-05-29T15:10:55.920

5

@200_success Actually, SSDs do have seek time. More specifically, SSDs read and write faster when you are use data sequentially. This person explains it much better than me: http://www.dpreview.com/forums/post/40353067 I don't know if this speed increase is because of some sort of "cache miss", because changing the address takes time, or something I can't imagine, but SSDs do have a delay for non-sequential uses. And that, to me people, means they have a seek time.

– Patrick M – 2013-05-29T15:15:21.697Microsofts defragmentation doesn't defrag, but clears unused space on an SSD so that wear leveling (from the SSD firmware) works better. – Sylwester – 2013-05-29T15:23:31.010

1@PatrickM - Agree. It worth pointing out that SSD seek time can almost always be ignored unlike with an HDD where seek/rotation time is the dominating factor (and reading sequentially is almost "for free"). With a typical queue depth (QD=32) for an IO-intensive application reading 4K blocks randomly takes roughly double the time of a sequential read on SSD. On an HDD, seek time is ~10ms (and read 1 MB sequentially is ~20ms) but a 1 MB read randomly in 4K blocks would take ~2560 ms (100+ times slower reading randomly than sequentially). – dr jimbob – 2013-05-29T20:04:48.780

2what it reports to Windows is NOT how the data is actually stored on the SSD. True, but the physical layout is irrelevant unless you are using a SEM to access the data. In normal, real-life situations, what matters is the layout it reports to the OS, and since files do get fragmented, when it comes to data-recovery, SSDs suffer from the same drawback of fragmentation as all storage: fragmented files are much harder, and often impossible to recover. – Synetech – 2013-08-06T01:21:02.570

26

Someone (200_success) made a comment that is much more relevant to the question and it worths a better detailing.

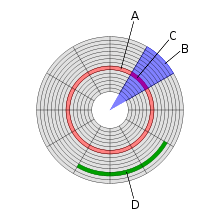

First of all: HDD means hard disk drive, and it really has a disk inside it. This disk is divided is small pieces, called sectors, where information is stored. Take a look at this picture:

A sector is indicated by the letter C.

Now, this sector is very small: only 512 bytes, in general. So, to store a common file of 10k, you'll need to use many sectors.

Imagine that those sectors are one following the another, like the green representation, letter D, in the picture. When you need to read the file, the hard-drive head will be positioned at the beginning of the first sector and will read all them, while the disk is spinnning.

That's how things should work.

Now it might happen to the file be spread along many sectors, each one in one part of the disk. What does that means? That to read your file again, the hard-disk head will be positioned at the beginning of the first sector, read it, then it'll have to move to the beginning of the second sector (that is somewhere else in the disk), will read it, and so on...

This will take a long time. We're talking about a physical move of the head. The more the head moves, the longer it takes.

So, you defrag the disk: the program try to moves all pieces of the file so that they end up in sequence, being easier and faster to read, since there will be less physical movement of the head to read everything.

Ok so far? So we begin to talk about SSD: they are a bunch of memory chips on a board. When you save or read something from them, the controller chip just need to activate some bits and, voilà, the correct chip is read from the memory. And it doesn't matter where it is stored, the action of accessing a memory chip is much faster than the physical move of the HDD. So, roughly speaking, you wouldn't notice that time in a fragmented file in a SDD.

And, being more detailist and correct, the controller chip will spread your file among many chips to take advantage of parallel readings and so on, so it knows how to handle your files so that they are always stored in the best (optimized about speed and wear of those memory chips) than Windows could know.

Fragmentation is not only a problem of the physical disk, but also of the file system managing your data. Highly fragmented files have a lot of extends/runs stored in their NTFS record, which need to be processed when you read the entire file from disk. That's not different on a SSD.

– Gene – 2013-05-29T14:04:06.007Defragmentation solving some minor NTFS organization issue is a different class of thing from defragmentation compensating for the performance problems due to spinning disks with moving heads. The only reason NTFS even has these extents is for the sake of spinning disks with moving heads, right? – Kaz – 2013-05-29T15:59:31.740

@Gene : He didn't say he's using NTFS... Yes, defrag helps performance, if you're a top-user :-). But not that astonishing result that someone had when he defrag a disk in the Win95 era. And running defrag often just to earn those .01 microseconds doesn't worth the wear. Worrying about defrag in SSD and, in few days, we'll be discussing if changing the interleave of the sectors in SSD will be worth as it was in those floppies. ;-) – woliveirajr – 2013-05-29T16:23:25.803

2@woliveirajr - I remember changing the interleave on my HDD back in the late 1980's, and my PC's speed more than doubled. I occasionally wonder if interleave would make any difference on today's PCs. – Paddy Landau – 2013-05-29T16:51:13.983

@PaddyLandau :) As far as I know, all HDDs actually have no need for interleave (all sectors are read in a row, no need to set an interleave) – woliveirajr – 2013-05-29T16:57:29.460

14

The built-in defragmentation tool in Windows 8 will not defragment your SSD, but send a bunch of trim commands to the device. For more details on this, see this question. As Roberts already pointed out, you don't want to defragment your SSD at all.

2That's cool, I wasn't aware that Windows 8 could optimize SSDs. For my Intel drive on Windows 7 you can use a program called SSD Toolbox from Intel to optimize the performance of the drive. I have never noticed that it really makes any difference though. – Tim B – 2013-05-29T12:44:49.720

And it gets much much worse with "cheap" flash storage like USB and SD: https://www.google.com/search?q=how%20to%20damage%20flash%20storage

– MarcH – 2015-02-23T22:12:42.2706In reallity, you don't need / don't want to defrag a SSD drive... it wouldn't make your access faster, etc. – woliveirajr – 2013-05-29T12:49:51.070

5

Possible duplicate of Why can't you defragment Solid State Drives? and Do I need to run defrag on an SSD?

– Breakthrough – 2013-05-29T15:49:03.677I think just about all of these superuser SSD fragmentation answers are wrong... at least as far as fragmented writes go. Yes, wear leveling intentionally fragments files. However, that happens in increments of the erase block size. If the minimum filesystem chunk is smaller than the erase block (almost always, and by a large factor), then a fragmented file when being written will result in many more erase blocks being rewritten. It can actually be a pretty substantial write performance hit if the chunks/clusters/inodes/etc in various erase blocks are heavily fragmented. – darron – 2013-05-29T19:55:28.060