Opposed to the FTP protocol, HTTP does not know the concept of a directory listing. Thus, wget can only look for links and follow them according to certain rules the user defines.

That being said, if you absolutely want it, you can abuse wgets debug mode to gather a list of the links it encounters when analyzing the HTML pages. It sure ain't no beauty, but here goes:

wget -d -r -np -N --spider -e robots=off --no-check-certificate \

https://tcga-data.nci.nih.gov/tcgafiles/ftp_auth/distro_ftpusers/anonymous/tumor/ \

2>&1 | grep " -> " | grep -Ev "\/\?C=" | sed "s/.* -> //"

Some sidenotes:

- This will produce a list which still contains duplicates (of directories), so you need to redirect the output to a file and use

uniq for a pruned list.

--spider causes wget not to download anything, but it still will do a HTTP HEAD request on each of the files it deems to enqueue. This will cause a lot more traffic than is actually needed/intended and cause the whole thing to be quite slow.-e robots=off is needed to ignore a robots.txt file which may cause wget to not start searching (which is the case for the server you gave in your question).- If you have

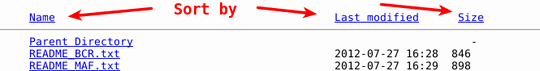

wget 1.14 or newer, you can use --reject-regex="\?C=" to reduce the number of needless requests (for those "sort-by" links already mentioned by @slm). This also eliminates the need for the grep -Ev "\/\?C=" step afterwards.