The answer depends on what you mean by "full disk encryption".

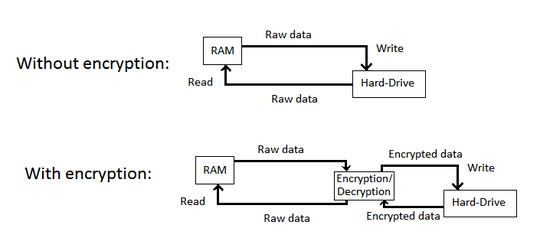

If you simply mean that all files and filesystem metadata are encrypted on the disk, then no, it should have no impact on SSD lifespan.

However, if you mean a more traditional "The entire contents of the disk, including unused space, is encrypted" then yes, it will reduce the lifespan, perhaps significantly.

SSD devices use "wear levelling" to spread the writes across the device so as to avoid wearing out a few sections prematurely. They can do this because modern filesystem drivers specifically tell the SSD when the data in a particular sector is no longer being used (has been "discard"ed), so then the SSD can set that sector back to zero and proceed to use whatever sector has the least amount of use for the next write.

With a traditional, full-disk encryption scheme, none of the sectors are unused. The ones that do not contain your data are still encrypted. That way an attacker doesn't know what part of your disk has your data, and what part is just random noise, thereby making decryption much more difficult.

To use such a system on an SSD, you have two options:

- Allow the filesystem to continue performing discards, at which point the sectors that don't have your data will be empty and an attacker will be able to focus his efforts on just your data.

- Forbid the filesystem to perform discards, in which case your encryption is still strong, but now it can't do significant wear levelling, and so the most-used sections of your disk will wear out, potentially significantly ahead of the rest of it.

this is an exact duplicate of http://superuser.com/questions/57573/does-full-volume-encryption-put-an-ssd-into-a-fully-used-state?rq=1

– None – 2012-07-15T19:59:48.8631

@JarrodRoberson you mean this question? http://superuser.com/questions/39719/what-is-the-lifespan-of-an-ssd-drive Either way, the related questions all have inferior answers, so I wouldn't close them as dupes of those

– Ivo Flipse – 2012-07-15T20:10:54.840@IvoFlipse the crux of the question I flagged as a duplicate states *"...Will this effectively put the drive into a fully used state and how will this effect the wear leveling and performance of the drive?..."* that is exactly this same question. – None – 2012-07-15T20:16:42.460