38

5

I'm wondering what the theoretical speedup is from hyper threaded CPUs. Assuming 100% parallelization, and 0 communication - two CPUs would give a speedup of 2. What about hyper threaded CPU?

38

5

I'm wondering what the theoretical speedup is from hyper threaded CPUs. Assuming 100% parallelization, and 0 communication - two CPUs would give a speedup of 2. What about hyper threaded CPU?

59

As others have said, this depends entirely on the task.

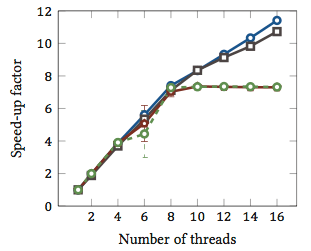

To illustrate this, let’s look at an actual benchmark:

This was taken from my master thesis (not currently available online).

This shows the relative speed-up1 of string matching algorithms (every colour is a different algorithm). The algorithms were executed on two Intel Xeon X5550 quad-core processors with hyperthreading. In other words: there were a total of 8 cores, each of which can execute two hardware threads (= “hyperthreads”). Therefore, the benchmark tests the speed-up with up to 16 threads (which is the maximum number of concurrent threads that this configuration can execute).

Two of the four algorithms (blue and grey) scale more or less linearly over the whole range. That is, it benefits from hyperthreading.

Two other algorithms (in red and green; unfortunate choice for colour blind people) scale linearly for up to 8 threads. After that, they stagnate. This clearly indicates that these algorithms don’t benefit from hyperthreading.

The reason? In this particular case it’s memory load; the first two algorithms need more memory for the calculation, and are constrained by the performance of the main memory bus. This means that while one hardware thread is waiting for memory, the other can continue execution; a prime use-case for hardware threads.

The other algorithms require less memory and don’t need to wait for the bus. They are almost entirely compute bound and use only integer arithmetic (bit operations, in fact). Therefore, there is no potential for parallel execution and no benefit from parallel instruction pipelines.

1 I.e. a speed-up factor of 4 means that the algorithm runs four times as fast as if it were executed with only one thread. By definition, then, every algorithm executed on one thread has a relative speed-up factor of 1.

20

The problem is, it depends on the task.

The notion behind hyperthreading is basically that all modern CPU's have more than one execution issue. Usually closer to a dozen or so now. Divided between Integer, floating point, SSE/MMX/Streaming (whatever it is called today).

Additionally, each unit has different speeds. I.e. It might take an integer math unit 3 cycle to process something, but a 64 bit floating point division might take 7 cycles. (These are mythical numbers not based on anything).

Out of order execution helps alot in keeping the various units as full as possible.

However any single task will not use every single execution unit every moment. Not even splitting threads can help entirely.

Thus the theory becomes by pretending there is a second CPU, another thread could run on it, using the available execution units not in use by say your Audio transcoding, which is 98% SSE/MMX stuff, and the int and float units are totally idle except for some stuff.

To me, this makes more sense in a single CPU world, there faking out a second CPU allows for threads to more easily cross that threshold with little (if any) extra coding to handle this fake second CPU.

In the 3/4/6/8 core world, having 6/8/12/16 CPU's, does it help? Dunno. As much? Depends on the tasks at hand.

So to actually answer your questions, it would depend on the tasks in your process, which execution units it is using, and in your CPU, which execution units are idle/underused and available for that second fake CPU.

Some 'classes' of computational stuff are said to benefit (vaguely generically). But there is no hard and fast rule, and for some classes, it slows things down.

2Although I was looking for something like "1.7 time speedup" this answer is very nice as it doesn't slap a black and white look on this problem. – Mikhail – 2011-05-05T16:00:24.037

@Mikhail: The point is that there is no simple factor - it depends, like often in life :-). – sleske – 2011-05-05T16:28:49.380

4The gist is right. One quibble, though: there is no a priori reason why a single core should benefit more from hyperthreading than multiple cores. For the wrong task, neither profit. For the right task, both profit by the same factor. – Konrad Rudolph – 2011-05-05T20:45:30.800

@Konrad: I think the point I was getting at, is the difference between one core and two cores might be more valuable than the difference between 4 and 8 or 2 and 4. I.e. Having a second core, for a poorly threaded app might help a bit more. – geoffc – 2011-05-06T01:17:45.450

“for a poorly threaded app” – that’s the important bit. But realistically, most applications’ threading support is poor so you have a point. – Konrad Rudolph – 2011-05-06T07:00:27.280

5

I have some anecdotal evidence to add to geoffc’s answer in that I actually have a Core i7 CPU (4-core) with hyperthreading and have played a bit with video transcoding, which is a task that requires an amount of communication and synchronisation but has enough parallelism that you can effectively fully load up a system.

My experience with playing with how many CPUs are assigned to the task generally using the 4 hyperthreaded "extra" cores equated to an equivalent of approximately 1 extra CPU worth of processing power. The extra 4 "hyperthreaded" cores added about the same amount of usable processing power as going from 3 to 4 "real" cores.

Granted this is not strictly a fair test as all the encoding threads would likely be competing for the same resources in the CPUs but to me it did show at least a minor boost in overall processing power.

The only real way to show whether or not it truly helps would be to run a few different Integer/Floating Point/SSE type tests at the same time on a system with hyperthreading enabled and disabled and see how much processing power is available in a controlled environment.

1Well a clear point - it's application dependent. I'm sure high communication computing could be sped up since core 0 and core 0-h would communicate through the same cache, without using slow RAM. – Mikhail – 2011-05-05T16:37:20.573

1

@Mikhail, then the problem is that if both threads require a large amount of processing power then they will both be competing for the same resources and would be much better off communicating through either the CPUs shared L3 cache (the i7 has L1 & L2 cache per core and a shared L3 cache) or even system memory and doing their tasks separately. It's all a massive swings-and-roundabouts exercise...

– Mokubai – 2011-05-05T16:43:45.1773

It depends a lot on the CPU and workload as others have said.

Measured performance on the Intel® Xeon® processor MP with Hyper-Threading Technology shows performance gains of up to 30% on common server application benchmarks for this technology

(This seems a bit conservative to me.)

And there's another longer paper (that I've not read all of yet) with more numbers here. One interesting take-away from that paper is that hyperthreading can make thins slower for some tasks.

AMD's Bulldozer architecture could be interesting. They describe each core as effectively 1.5 cores. It's kind of extreme hyperthreading or sub-standard multi-core depending on how confident you are of its likely performance. The numbers in that piece suggest a comment speed-up of between 0.5x and 1.5x.

Finally, performance is also dependent on the operating system. The OS will, hopefully, send processes to real CPUs in preference to the hyperthreads that are merely masquerading as CPUs. Otherwise in a dual-core system, you may have one idle CPU and one very busy core with two threads thrashing. I seem to recall that this happened with Windows 2000 though, of course, all modern OSes are suitably capable.

1The OS has to make sure that the threads don't clock-block each other :) – Mikhail – 2011-05-06T15:28:42.087

Very late to this party, but still: Very nice answer, I finally understood what's the deal with this hyperthreading! Thanks! – sebhofer – 2017-09-12T15:51:23.933

Late to the party as well, but my understanding is that AMD CPUs handle hyper-threading in a different manner from Intel CPUs. I would be interested to see how this test looks when run on AMD hardware. Disclaimer: I do not work for or represent AMD, I'm just very curious. – dgnuff – 2018-12-14T00:10:08.390

Best answer :-) – Sklivvz – 2011-05-05T22:18:19.907

1What are the actual speeds of the algorithms, plotted against number of cores? I.e. what is the speed gain for the fastest algorithm in these tests? Just wondering :). – crazy2be – 2011-05-05T23:23:18.323

@crazy2be For the blue line (Horspool’s algorithm), the running time goes from 4.16 seconds down to 0.35 seconds with 16 threads. So the speed-up is 11.74. However, that’s with hyper-threading. When plotted against the number of cores, the speed-up of this algorithm is 7.17 on 8 cores.

– Konrad Rudolph – 2011-05-06T06:58:31.370@Konrad - That's an interesting paper, but this graph doesn't help us without the corresponding graph of run time against number of threads. Comparing algorithms by speedup factor only really works if they are of comparable speed in the first place. If the

Naivealgorithm takes twice as long to run as theShift-oralgorithm (for instance) then the hyper-threading might just be recouping some of the loss from using a less efficient algorithm. – Mark Booth – 2011-05-06T10:06:02.963@Mark I’m showcasing relative speed-up. The actual speed of these algorithms is really irrelevant for comparability. In particular, the input data is big enough so that there is guaranteed to be no “recouping some of the loss from using a less efficient algorithm” for multi-threading in general. The naive algorithm cannot magically recoup loss either, there has to be some mechanism for this, and in this case it’s reduced memory load. – Konrad Rudolph – 2011-05-06T10:30:55.690

@Mark But I get what you’re saying. I should mention that for the naive and Horspool’s algorithm the memory load fills the bus so the bandwidth is completely exploited. For the bit parallel algorithms this is no longer the case. – Konrad Rudolph – 2011-05-06T10:38:45.870

5the only problem with this answer is i can only upvote it once. Its a staggeringly objective answer for a subjective question ;) – Journeyman Geek – 2011-05-06T10:43:01.373

@Konrad - I understand that, but relative speed up is just one factor. If one algorithm runs 12x as fast with 16 hyper-threads, but still takes twice as long to run on one core as another which only scales up to the number of physical cores (7x), then the latter is still the better one to use, as it gets you your results 17% faster. I know my scientists would rather have data analysis complete in an hour than in 70 minutes. *8') – Mark Booth – 2011-05-06T10:50:20.320

@Mark “then the latter is still the better one to use” – of course. But that wasn’t the question (neither here nor in my thesis). ;-) – Konrad Rudolph – 2011-05-06T11:09:23.690

@Konrad - The question was "How much speedup does a hyper-thread give?" and your (IMHO correct) answer is "This depends entirely on the task". The problem is, you then go on to justify this with only half of the data needed. It is possible that for some algorithms, hyper-threading will result in a slow down (i.e. it will be slower using 16 hyper-threads than just using 8 real threads), which is why many people turn off hyper-threading in their BIOS, as they have benchmarked and found hyper-threading to be a disadvantage for their application. – Mark Booth – 2011-05-06T12:09:53.077

@Konrad- great answer! Could you please update your post with some useful information from the comments? On the side note - I think the timesdomatter. Bringing the speed down below 1 second makes the overhead comparable. If you could use the same algorithm but with heavier load such that max speed up resulted in 10 seconds - that would be, IMO, a better fraction to look at. – Mikhail – 2011-05-06T15:27:12.1202

@Konrad, could I interest you in writing a blog post about this answer?

– Ivo Flipse – 2011-05-12T14:02:12.160Spectacular answer @KonradRudolph! And interesting read for a thesis. I second @Ivo's request for a blog post regarding this. – James Mertz – 2011-05-12T18:41:08.060

@Ivo Angry Birds for Chrome just came out! But sure, I’ll see whether I can find some free time on the weekend. ;-) – Konrad Rudolph – 2011-05-13T07:13:21.893

The link to the thesis take 'forever' to load, and Firefox eventually gives up. – tshepang – 2013-11-25T20:16:23.450

@Tshepang Yes, unfortunately the website has been dead for months because my host’s technical contact doesn’t reply to requests and I’m too busy to take care of it, I currently haven’t got a replacement space for it. – Konrad Rudolph – 2013-11-25T20:20:09.723