10

2

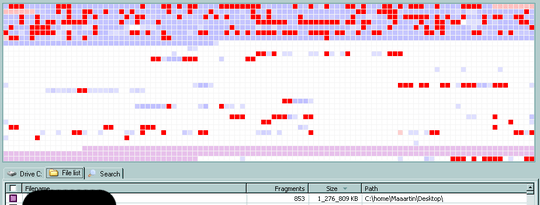

On Windows XP 64, I downloaded a 1.2 GB file and it ended up fragmented, as the image shows. Unfortunately, before taking the snapshot from Piriform Defraggler I defragmented the other files, so you can't see the exact state at the point the file was written. However, the disk was all the time about as empty as now (25% used) and hardly fragmented.

What block allocation algorithm does NTFS use? It looks like random or maybe putting it where the disk head actually stands.

UPDATE:

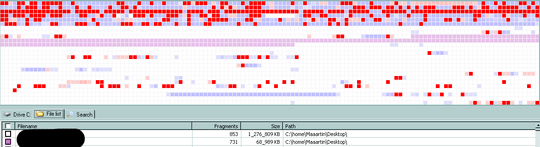

This is what happened today after writing 67 MiB of a new file. It got split into 731 fragments, average size of only 95 KiB. The file was used to fill some gaps, but not all of them, it doesn't use the huge continuous free space either. Strange, isn't it?

UPDATE 2:

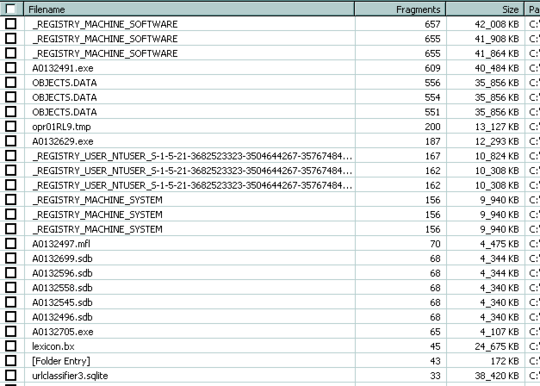

Unlike PC Guru, I really don't think Opera is the culprit. I think it (as opposed to Google Chrome) doesn't tell Windows the expected size, however, there are many cases when it's not possible and it's the responsibility of the OS to handle it in a sane way. The following picture shows what happened after a couple of days with me doing nearly nothing on this partition - the TEMP directory and all my data (except for those managed by Windows) are both located elsewhere. Windows itself seems not to use SetEndOfFile and fragments its own files in a terrible way (600 fragments for a couple of small file of about 40 MB). NTFS doesn't seem to use the first available sector, as there are again files in the middle and also near the end of the quite empty disk (usage 23%), so the exact algorithm is still unknown.

But it looks like NTFS filled all the gaps and spread the rest sort of uniformly all over the disk. As I said, the disk was never much fuller than now, you parts of the file got written to some of the last sectors (i.e., to the worst locations). Other parts were squeezed in small areas between two occupied sectors although there was a lot of free place elsewhere. – maaartinus – 2011-04-25T15:10:06.203

Did you call setEndOfFile before writing the file to the disk? if you didn't, NTFS has no way of knowing the actual size of the file so it will grow the file using the available storage. – ReinstateMonica Larry Osterman – 2011-04-25T17:00:22.753

It wasn't me, it was Opera. Most probably not. Nonetheless, it's no reason for doing anything that strange. – maaartinus – 2011-04-25T19:12:38.553

What do you mean "that strange"? If NTFS knows the size of the file you're writing, it will do smart things about the file. If it doesn't know the file size, it can't do nearly as good a job of storage allocation. – ReinstateMonica Larry Osterman – 2011-04-26T00:53:23.430

@ Larry Osterman: Sure, without knowing the file size it's hard to do it right. But doing it that bad is hard, too. – maaartinus – 2011-04-27T19:01:41.353