I'm going to premise-challenge a bit here. I understand the frustration of a slow copy, but interrupting the copy is not how you check copy progress.

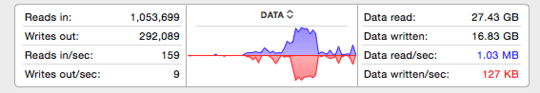

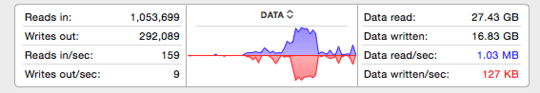

Look at the disk I/O history chart

Fire up Performance Monitor and look at disk I/O activity history. You should do this with the system quiescent; if it's doing a lot of other stuff you could be looking at that disk activity. However, that will be spiky; you're looking for the "baseline" activity that is there all the way across the chart. Mine on the Mac splits it into reads and writes; obviously you're looking at writes. (most other activity will be reads).

Older Mac version. Windows' is more robust. The first lump is rsync planning the copy, the second lump is three fat MP4 video files, and the last lump is a bazillion little files.

In my experience, In practice, this bar will jump up and down quite a bit. When copying large single files - videos and TIFF files, it pegs at whatever the hardware maximum throughput is.*

But when copying bazillions of little files, MBps throughput tanks ... because the drives must read (and write) a directory entry for each file. You don't have head seek times and wait-for-sector-to-come-around delays on Flash memory keyfobs, but you do on physical hard drives - bigtime. Hard drives and robust OS's have some strategies to ease the pain, but there's still big pain.

- This is why I turn all my collections of little files into ZIP packages, tarballs, or disk images.

Look at the files themselves arriving on the destination drive

Use your windows (or a command prompt) to browse the destination directories. Find the "leading edge" of the copy, i.e. the directories being added into right now. Watch the activity and see if it's normal.

Listen to the hard drive

If your drive is going

tick... tick... tick...

That's a copy going well. The drive is doing big block transfers, using its formatted sector arrangement to best advantage, etc. If on the other hand you here

shikkita shikkita shikkita

That's disk thrash. Something has gone wrong with your backup, and it's killing throughput. That sound means the drive is seeking the head a lot - and when the head's moving, it's not copying. Worse, when it arrives, it must stabilize and then wait an average of 0.5 disk platter revolutions for the desired sector to come around. Drives spin at 5400-10,000 RPM, so that's 3-6 ms every seek.

In the earlier diagram, indeed, the fat section had the drive quiet as a mouse, and in the later section, shickkita.

For instance, once I had a nest of 5.5 million tiny (30-500 byte) files in the middle of a big backup. When the drive started sounding like a World War I melee, I checked the graph and saw the bad news. At that rate, the copy would've taken days.

So I quit apps, created an ample RAMdisk, and copied the 5.5M files to the RAMdisk. Reading from HDD, this benefited from disk cache; writing was instant of course. This took a half hour. Then I wrote it back to hard drive as a ZIP file, which wrote as one continuous data stream, so "tick...tick...tick...". That was even faster.

Deleting the 5.5M files took an age, but then, the ZIP file backed up in less than a minute. Big improvement over a day!

As far as when to interrupt the copy, you do that when the above is telling you the copy is not working properly, and you have a plan or want to experiment to fix it.

Never, ever, ever multi-thread

The meters show you'll get best throughput when streaming a single large file like a video. The drive's sector optimization is working at peak performance: the next needed sector appears under the disk head just as it's needed.

But suppose you copy 2 large videos at the same time. The disk is trying to do both at once: It seeks to video 1, writes a block, seeks to video 2, writes a block, seeks to video 1, writes a block, ad nauseum. Suddenly we're seeking instead of writing, and throughput does the obvious thing.

So don't do that. And the disk I/O history chart will tell you why.

For instance I "rsync" from internal HDD to External 2. I also rsync from External 1 to External 2. These are all set up in scripts. I don't run both at once, because they'll fight over External 2, and it'll slow both copies down.

Now what you did, with /MT:32, is tell it to thread 32 copies at once, which is the very thing I'm here telling you not to do. *Maybe multi-threading helps on hybrid, RAM disks, RAIDs, or good elevator-seeking algorithms that play nice with the particular data-set. That doesn't sound like it's here. But regardless, don't take my word on it -- you should be using the graphs to experiment and find the happy number for your hardware. (Which I fully expect will be "1").

* lowest of a) computer USB, b) device USB, c) read drive throughput and d) write drive throughput.

Comments are not for extended discussion; this conversation has been moved to chat.

– Sathyajith Bhat – 2020-01-08T06:07:50.5776You're not really asking "is it OK to stop robocopy", but "will robocopy resume after being aborted". I suggest modifying the title to that effect – Lightness Races with Monica – 2020-01-08T17:24:33.017

1At first, I read it as "is it OK to stop Robocop?" – Criggie – 2020-01-09T02:43:26.203