2

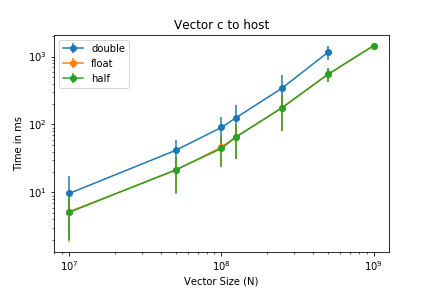

I have a simple cuda kernel (adding two vectors of size N) pretty similar to to this cuda blog here. I only changed a few things, e.g. running the measurement over various sample. So, let this run for, lets say, 1000 times and writing this measurement to a txt afterwards. If I plot now the measurements for transfering a vector to the device I get the following:

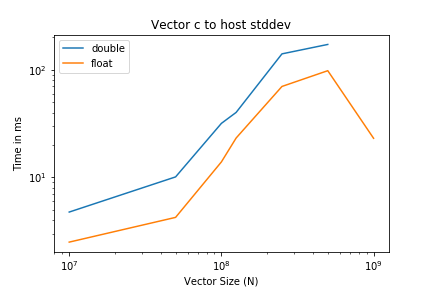

Now, if we take a look at the stddev drawn as vertical errorbars, then it should be clear, that for some reason, the data movements fluctuation scale with the size, because the errorbars are kinda constant in a log-log plot. This can be validated when only the stddev is plotted

If I take the very same programm from the cuda blog, then I get for every 10-th run or so also bandwidth fluctuations. Where does this come from? I observed the same behaviour on two different GPUs, a V100 and a RTX2080. Sorry for the inconvenience regarding the images, but I don't have enough reputation points.