0

1

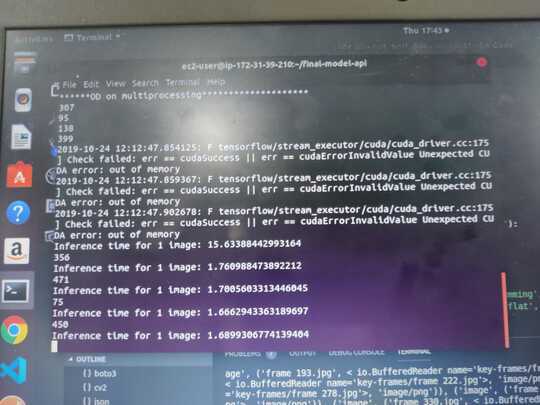

I am trying to run a complete inference pipeline which takes a number of images and first runs Object Detection (multiprocessing) and further runs a Classifier (multiprocessing). I have tested the code on my local machine (CPU with 8 cores - No GPU) and it works perfectly. But when I tried to run the same code on EC2 (Deep Learning AMI (Amazon Linux) Version 24.1 - Instance type: p2.xlarge - 4 vCPUs (2 cores - 2 threads per core) - 1 GPU core - CUDA enabled), It throws CUDA - out of memory error.

import multiprocessing as mp

pool_od=mp.Pool()

results_OD=pool_od.map_async(localize_it, TEST_IMAGE_PATHS)

# TEST_IMAGES_PATH is the list of path of all images

pool_od.close()

pool_od.join()

final_results.append(results_OD.get())

In the starting, I was specifying the number of cores in mp.Pool() but then I removed that so that the machine can decide for itself. As well as I removed the chunksize argument too. But still, I am getting the same error. Can you put some insight into it? Why this error is coming and what could be done?