2

0

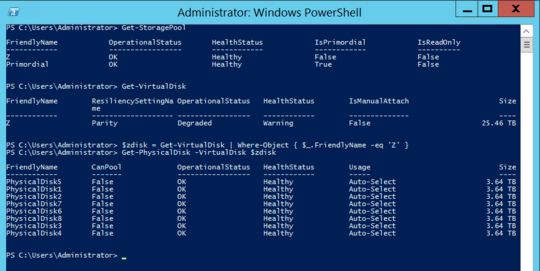

On a machine running Windows Server 2012 R2, I have a virtual disk consisting of 8 physical disks in a parity configuration. One drive died, so I replaced the dead drive and followed these instructions for repairing the virtual disk: Replacing a Failed Disk in Windows Server 2012 R2 Storage Spaces with PowerShell.

Everything seemed to be working great, with one discrepancy: despite completing the whole repair process with no errors, the virtual disk still is marked as "degraded", even though it once again has 8 healthy drives. What gives?

The file system on the virtual drive (which is backed up) is still accessible, but I'd like to know if I again have resiliency against a drive failure or not, but I can't find any information about why the virtual disk is still degraded, or how to determine if it really is degraded, or how to fix it.

Here is some powershell output that I hope will be useful. Thank you!

And again as text:

PS C:\Users\Administrator> Get-StoragePool

FriendlyName OperationalStatus HealthStatus IsPrimordial IsReadOnly

------------ ----------------- ------------ ------------ ----------

Z OK Healthy False False

Primordial OK Healthy True False

PS C:\Users\Administrator> Get-VirtualDisk

FriendlyName ResiliencySettingNa OperationalStatus HealthStatus IsManualAttach Size

me

------------ ------------------- ----------------- ------------ -------------- ----

Z Parity Degraded Warning False 25.46 TB

PS C:\Users\Administrator> $zdisk = Get-VirtualDisk | Where-Object { $_.FriendlyName -eq 'Z' }

PS C:\Users\Administrator> Get-PhysicalDisk -VirtualDisk $zdisk

FriendlyName CanPool OperationalStatus HealthStatus Usage Size

------------ ------- ----------------- ------------ ----- ----

PhysicalDisk5 False OK Healthy Auto-Select 3.64 TB

PhysicalDisk1 False OK Healthy Auto-Select 3.64 TB

PhysicalDisk2 False OK Healthy Auto-Select 3.64 TB

PhysicalDisk7 False OK Healthy Auto-Select 3.64 TB

PhysicalDisk6 False OK Healthy Auto-Select 3.64 TB

PhysicalDisk8 False OK Healthy Auto-Select 3.64 TB

PhysicalDisk3 False OK Healthy Auto-Select 3.64 TB

PhysicalDisk4 False OK Healthy Auto-Select 3.64 TB

PS C:\Users\Administrator>

In case it matters, PhysicalDisk4 is the new replacement disk that I added to replace the dead disk.

1Maybe this would be a better question for serverfault? – Brionius – 2019-09-15T17:57:12.173

The explanation might be that another disk is truly faulty and status is not updated. I suggest to first study the Event Logs for warnings and errors. This PowerShell command run as Admin might help:

Get-WinEvent -ProviderName *Disk*,*Ntfs*,*Spaces*,*Chk*,*Defrag* | ?{$_.Level -eq 2 -or $_.Level -eq 3}. Look also at the SMART data of the individual disks, via a utility or perhaps viaGet-PhysicalDisk | Get-StorageReliabilityCounter | Format-List. – harrymc – 2019-09-17T15:40:22.063@harrymc Wow, that was really helpful. One of the other disks in the array has a bad sector - I guess that's what is causing the continuing degraded message. Thanks! Make that comment an answer, and I'd be willing to award the bounty. – Brionius – 2019-09-18T13:04:35.500

Done as requested. – harrymc – 2019-09-18T13:13:57.340