0

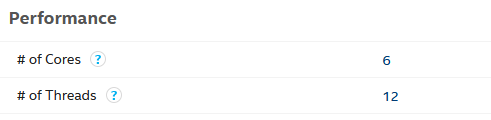

I am running a TensorFLow/Keras machine learning python script on a computer which has 12 CPUs.

When I execute: taskset -c cpu_list main.py in my Ubuntu terminal,I find that the optimal number of CPUs for the script is 5.

The difference is fairly significant, around 200% decrease in time when changing from 12 CPUs to 5.

Moreover 1 CPU has a similar runtime to that of the 12.

I am confused as to why this is the case and why it isn't that using all 12 gives the fastest runtime as it would have more CPU's available for computation?

Could be hitting a thermal limit and throttling. What CPU? – Mokubai – 2019-08-02T11:19:53.080

@Mokubai 12 of Intel Xeon CPU E5-2620 at 2.4GHz – Matthew – 2019-08-02T11:45:35.690