0

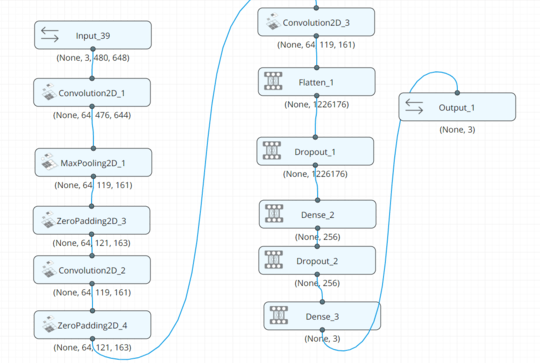

I have a simple neural net that is running on Keras with Deep learning studio as an interface. The problem I'm seeing is that for 80% of the time, the python script is only using 1 core (and maxing it out at that). You can see this exact phenomenon below (click to enlarge).

All settings are default, and Keras is not utilizing my GPU. I suspect that maybe some operations are only single-core utilizable? My training network is pretty average, with the exception that it's relatively simple.

The Keras translation for this is as follows:

import keras

from keras.layers.convolutional import Convolution2D

from keras.layers.pooling import MaxPooling2D

from keras.layers.convolutional import ZeroPadding2D

from keras.layers.core import Flatten

from keras.layers.core import Dropout

from keras.layers.core import Dense

from keras.layers import Input

from keras.models import Model

from keras.regularizers import *

def get_model():

aliases = {}

Input_39 = Input(shape=(3, 480, 648), name='Input_39')

Convolution2D_1 = Convolution2D(name='Convolution2D_1',nb_col= 5,nb_row= 5,nb_filter= 64)(Input_39)

MaxPooling2D_1 = MaxPooling2D(name='MaxPooling2D_1',pool_size= (4, 4))(Convolution2D_1)

ZeroPadding2D_3 = ZeroPadding2D(name='ZeroPadding2D_3')(MaxPooling2D_1)

Convolution2D_2 = Convolution2D(name='Convolution2D_2',nb_col= 3,nb_row= 3,nb_filter= 64)(ZeroPadding2D_3)

ZeroPadding2D_4 = ZeroPadding2D(name='ZeroPadding2D_4')(Convolution2D_2)

Convolution2D_3 = Convolution2D(name='Convolution2D_3',nb_col= 3,nb_row= 3,nb_filter= 64)(ZeroPadding2D_4)

Flatten_1 = Flatten(name='Flatten_1')(Convolution2D_3)

Dropout_1 = Dropout(name='Dropout_1',p= .25)(Flatten_1)

Dense_2 = Dense(name='Dense_2',output_dim= 256,activation= 'relu' )(Dropout_1)

Dropout_2 = Dropout(name='Dropout_2',p= 0.25)(Dense_2)

Dense_3 = Dense(name='Dense_3',output_dim= 3)(Dropout_2)

model = Model([Input_39],[Dense_3])

return aliases, model

from keras.optimizers import *

def get_optimizer():

return Adadelta()

def is_custom_loss_function():

return False

def get_loss_function():

return 'categorical_crossentropy'

def get_batch_size():

return 256

def get_num_epoch():

return 12

def get_data_config():

return '{"kfold": 1, "datasetLoadOption": "full", "shuffle": false, "numPorts": 1, "mapping": {"Category": {"port": "OutputPort0", "options": {}, "type": "Categorical", "shape": ""}, "Image": {"port": "InputPort0", "options": {"Width": "240", "shear_range": 0, "width_shift_range": 0, "Height": "120", "Normalization": false, "vertical_flip": true, "horizontal_flip": true, "pretrained": "None", "rotation_range": "180", "Resize": false, "Scaling": 1, "height_shift_range": 0, "Augmentation": true}, "type": "Image", "shape": ""}}, "samples": {"validation": 97, "split": 3, "test": 97, "training": 457}, "dataset": {"name": "Training_greyscale", "type": "private", "samples": 653}}'

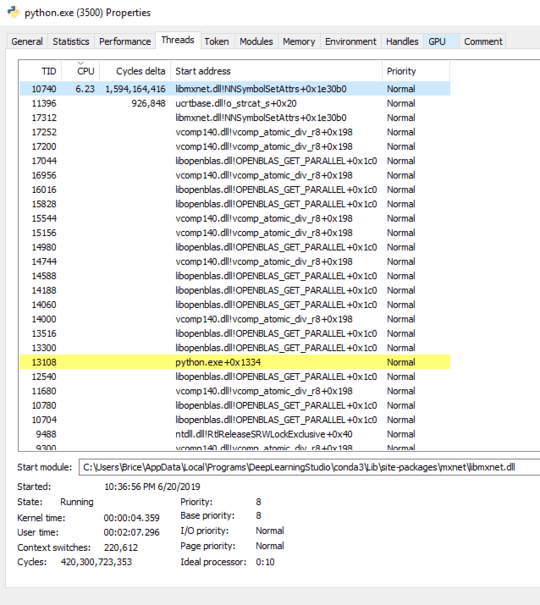

With this, I've decided to analyze the threads, and I found the thread that is using most of the compute time. It seems to be a function which is only there to set attributes? here is the analysis:

Things I've ruled out:

R/W stalls. The data is on a NVMe disk, and only takes up 90MB. Reads and writes should not be of concern.

Anything pertaining to memory; The model has more than enough memory to operate as it stands.

And with this, about 5 samples per second is averaged, and the program has a miserable core use ratio. What can I do to speed this process along, aside from using additional hardware?

If additional details are needed, please do not down vote; I'm happy to provide them in a timely matter.