It depends entirely on whether you're doing sequential or random I/O, and how often you want / need to flush to disk...

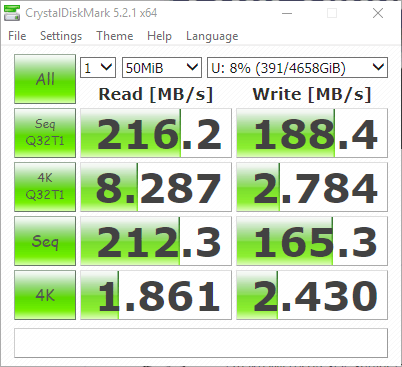

Both 20 KB/s and 100 KB/s are negligible with today's hardware. From the CrystalDiskMark screenshot, and your concern I'd suspect you're dealing with a spinning disk... why not use an SSD?

max simultaneous writes before the drive throttles and becomes slower

It's not a matter of the drive throttling, but rather that the physical movement of the head takes time to complete. With random I/O this is exacerbated as the size of each written block shrinks, and the seek time between writes increases.

let's assume the OS, Filesystem or the Application is not a part of the bottleneck

Without knowing the state of the filesystem in terms of fragmentation and free space, you cannot assume this, and you certainly can't assume it over the life of a product or installation.

If you're suffering from performance issues, then you'll want to make use of buffered I/O - i.e: writing to a file actually collects data into a buffer, before writing a larger block to disk at once.

Writing 100 KB/s for a period of 10 seconds can be presented to the storage as any of the following (or wider):

- a block of 1 KB every 10ms

- a block of 10 KB every 100ms

- a block of 100 KB every 1 second

- a block of 1,000 KB every 10 seconds

Are we discussing the regular (red), or infrequent (green)?

Each of the colors will "write" the same amount of data over the same timeframe.

Writing larger blocks at once will help with throughput and filesystem fragmentation, though there is a trade-off to consider.

- Writing larger blocks, less frequently - will improve throughput, but requires more RAM, and in the event of power loss or crash, a larger portion of data will be lost

- Writing smaller blocks, more frequently - will degrade throughput, but requires less RAM, and less data is held in volatile memory.

The filesystem or OS may impose rules about how frequently the file cache is written to disk, so you may need to manage this caching within the application... Start with using buffered I/O, and if that doesn't cut it, review the situation.

let's pretend 1,000 users are uploading 1GB file at 20KB/sec

You're comfortable with users uploading a 1 GB file over ~14.5 hours? With all of the issues that failures incur (i.e: re-uploading from the beginning).

1Why not throw in an SSD and bypass the problem - SSDs dont significantly suffer from differences in sequential vs random reads and are much faster all round. (Meaning the answer becomes much closer to speed/users) – davidgo – 2019-01-30T18:30:11.607