0

1

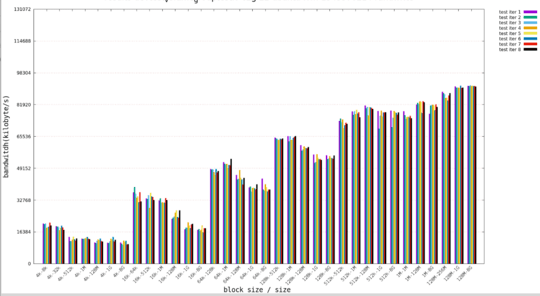

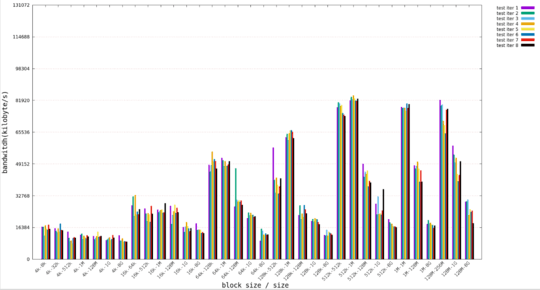

I'm measuring random write speeds for a EMMC device with fio.

I'm testing multiple block sizes with multiple io sizes.

After multiple iterations for all test cases on a raw emmc device, I create a logical volume using the entire emmc device and run the same tests on that volume.(such as /dev/new_vol_group/new_logical_volume)

I was expecting a slight performance overhead with LVM but the strangest thing happened.

For small io sizes random write speeds are quite similar between raw and LVM. When IO size is increased(specially when the size is double amount of ram), random write speeds for raw device is quite decreased but that's not the case with LVM. There is no decrease in random write speeds with LVM when file size is increased.

So LVM is much faster than raw device for random write access specially for large filesizes. This is only true for random write. I didn't see this behaviour with sequential read-write or random read tests.

This is my fio file

ioengine=libaio

direct=1

buffered=0

iodepth=1

numjobs=1

ramp_time=5

startdelay=5

runtime=90

time_based

refill_buffers

randrepeat=1

Only reason I could think of was caching, but caching can't affect the tests this much when io size is double the amount ram on the host.

Note: I use fio-3.1 for benchmarking, Ubuntu 18.04 LTS as a host.

I detected this behaviour on multiple devices.

Edit: Added graphs representing the speed differences.

If you're still caching half the data, it's speed could be 2-6GB/s, averaging that with the disk's real speed would still increase the result a lot – Xen2050 – 2018-12-26T22:09:18.707

Then same caching should apply to non-lvm partitions, right? – Can – 2018-12-30T16:16:02.147

1I think it depends on the testing method, just using files would probably get cached anywhere, but devices probably don't. A decent testing program probably avoids caching anyway, but I'm not sure of all the details of lvm's. What were the actual speed results with/without lvm anyway? Repeated testing gave the same differences, maybe it's just "random noise"? – Xen2050 – 2018-12-30T20:54:35.323

1Hmm, are you using fio against a filesystem or are you using fio against the raw device mapper devices directly? Additionally your fio job file looks incomplete - are you specifying things on the command line too? Finally what is the different fio output from the different runs (e.g. I can't see latency stats from raw partition vs LVM in your current question)? – Anon – 2019-01-01T12:20:44.887

Hi @Anon,

I added histograms graphs for speed comparisions between raw device and LVM mapper device. You're right i'm specifying things on the command line too, i didn't think they mattered. – Can – 2019-01-03T05:45:56.510

Ah I didn't realise you were doing a spread of runs. The only things that initially come to mind are: perhaps alignment is better with LVM (if you put an aligned partition down on the raw disk do things get better?), or there's more optimal I/O submission going on (check that the I/O scheduler being used is the same). On the runs where the difference between physical and LVM is largest what does the raw I/O look like on the physical disk itself from an iostat perspective. PS: direct and buffered are antonyms of each other - you don't need to specify both :-) – Anon – 2019-01-03T07:03:23.737