2

1

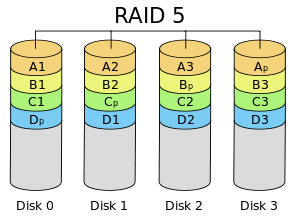

Two days ago, one of the Barracudas in my Synology (3 disks as RAID-5) triggered some "bad sector" warnings. No data lost. They're not many (62 over 24 hours, then none more) and according to SMART info, the drive is "just fine". But still, it is enough for me to replace the disk. Your mileage may vary, but for me, anything non-zero in terms of bad sector is NG.

So... thanks to a large online bookstore which also sells harddrives, I got same-size replacement disks (Ironwolf) literally over night.

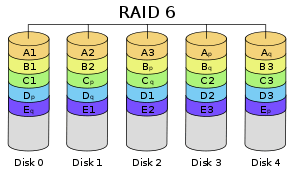

First plugged one in the 4th slot, and changed the array type to RAID-6 while the old disk is still alive and kicking, to add some extra redundancy. Better be on the safe side, just in case. Once that's done, next step will be replacing the old disks one by one.

So... it's currently resyncing.

I've changed settings from "less impact" to "resync faster", which apparently meddles with IO priorities. The impact is very noticeable, trying to access a share is very, very slow now (but of course still works). That's fine, after all we want the resync to finish soon before something more drastic may happen. Still, disk usage is only 60-56% on each disk in resource monitor. Well, that's not so bad, I guess.

The new disk is able to sustain sequential 150 MB/s writes and the old disks shouldn't have any trouble delivering that while reading sequentialy (even moreso as there's three of them, cutting down necessary bandwidth). 60% of that is something around 90 MB/s. They're 4TB disks.

Let's be pessimistic and assume we only get 50 MB/s throughput total. So, that is 4*(1024*1024)/50 seconds to perform the resync, or just about a little more than 23 hours.

I've left the thing alone doing its work over night, and it is meanwhile running for 26 hours. Looking at the status window, it shows 11% complete.

Not like there's anything I could do about it anyway, but seriously... what's wrong? 11% after 26 hours means it's going to take almost two weeks. What the?

This is beyond my comprehension. Is there any technical reason why it would take that long?

2You really shouldn't of changed it to RAID6, as now it'll be resyncing all the disks. Instead you should of added the extra disk as a hot spare, failed the bad disk causing the hotspare to take over and allow the whole thing to sync nicely. – djsmiley2k TMW – 2018-09-19T15:02:07.397

And infact the reason for the slowness maybe because you're trying to resync, while re-arranging the entire drive. If you've got a offline backup of this (you should do, raid is not a backup). I'd nuke it and restore from backup. – djsmiley2k TMW – 2018-09-19T15:03:17.330

@djsmiley2k: Sure, but restoring from backup takes forever, too. Plus, I'd have to set up all the shares again, etc etc. Because, well, the only way to do this without a resync happening anyway is by low level formatting the disks. Which, of course, also kills the whole setup. The plan (and the obvious, straightforward way) was to just plug in a new disk, wait a day, preferrably only a few hours, and be done. That's why we have the "R" in RAID and why such a thing as hot-plug was invented. We have these only so things just work and keep running. – Damon – 2018-09-19T17:16:03.480

1"If you've got a offline backup of this (you should do, raid is not a backup)" - Couldn't agree more with @djsmiley2k – That Brazilian Guy – 2018-09-19T17:23:35.730

Restoring from backup is not a solution because it will take just as long copying data (probably much longer). If you just toss in 3 new disks (or 4 of them, doesn't matter) you need to reinstall the firmware (which is mirrored on every disk in the array), then restore all settings, and then copy over all data. Which happens while the system is performaing a background resync (no way to avoid), so it is excrutiatingly slow. To top it off with sugar, during those, whatever, 2 days, the thing is "unusable" because data is incomplete. Letting the RAID susbsystem do the lifting "just works". – Damon – 2018-09-19T18:40:34.197

The question is not about finding an alternative, but about why the resync is so darn slow when it doesn't seem like it should be (strictly sequential operation). – Damon – 2018-09-19T18:42:29.090

I think it's a combination of a re-sync and a build a new array format however, I'm not confident enough to answer :O – djsmiley2k TMW – 2018-09-19T19:59:43.470

If you'd added the drive as the hot spare, and then failed the bad disk, it would strictly be a resync and likely be much faster. – djsmiley2k TMW – 2018-09-19T20:01:32.060