0

1

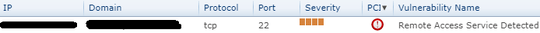

This is an item that I am failing in my PCI compliance scan report:

I use port 22 for SFTP connections to transfer files from my local computer to the server and vice versa. I tried to dispute this failure by sending this email:

Our username is [username]. Our latest scan results ran today for IP [IP address] show that we failed the following item:

Remote Access Service Detected

It doesn't appear this one is actually a failure. If it is, please provide any relevant information as to why it is.

We are using port 22 to connect remotely using SFTP and that is normal.

The reply from the PCI Compliance support team was:

Thank you for contacting PCI compliance support.

PCI compliance requires that all remote access services be turned off when not in use, however, if you require them to be on at all times for the business to be ran, you can file a dispute.

Does this mean that I need to enable port 22 only when I have an SFTP connection request and immediately disable port 22 when that connection is done transferring files? I thought it was a binary behaviour where I either had port 22 enabled on the server, or disabled, but not dynamically enabled/disabled based on the SFTP connection requests. Is that how it works?

UPDATE 1: I found the following information in "Appendix D: ASV Scan Report Summary Example" of the document at https://www.pcisecuritystandards.org/documents/ASV_Program_Guide_v3.0.pdf:

VPN Detected

Note to scan customer:

Due to increased risk to the cardholder data environment when remote access software is present, please 1) justify the business need for this software to the ASV and 2) confirm it is either implemented securely per Appendix C or disabled/ removed. Please consult your ASV if you have questions about this Special Note.

Does port 22 need to be enabled/disabled dynamically only when SFTP remote connections are requested for example, or is it a binary configuration on the server where it is either enabled or disabled?

UPDATE 2: I was reading the following at https://medium.com/viithiisys/10-steps-to-secure-linux-server-for-production-environment-a135109a57c5:

Change the Port We can change the default SSH Port to add a layer of opacity to keep your server safe .

Open the /etc/ssh/sshd_config file

replace default Port 22 with different port number say 1110

save & exit from the file

service sshd restart Now to login define the port No.

ssh username@IP -p 1110

That seems to be "security through obscurity". Is it an acceptable solution or a workaround? Would the PCI Compliance scan pass just by changing the port from 22 to a different random number?

The way I'm reading it, they don't think you should have RAS software at all. the scan will always fail if you do. The "turned off when not in use" part sounds like a compromise they didn't want to make, so the bar for justification may be high. I'd guess it involves physically accessing the server to enable the service, using it, and then disabling it again, rather than any kind of automated response, as those are vulnerable to attack as well (so its just security through obscurity). – Frank Thomas – 2018-07-23T20:02:07.990

@FrankThomas The option of running it on an alternate port is security by obscurity. The requirement to actually physically access the server to get files onto it is not, it's a simple requirement for use of physical access control over digital access control (and it's a lot easier to secure physical access to a system than digital access). – Austin Hemmelgarn – 2018-07-23T20:35:40.233

@AustinHemmelgarn, I was referring to technologies like port-knocking or other firewall magic that might start a service or open a port on some kind of demand. in that case you are obscuring the method of requesting the port become active, which is an improvement, but not secure when under scrutiny. – Frank Thomas – 2018-07-23T20:40:30.133

@FrankThomas How would I transfer files using SFTP? Does this mean, do not use SFTP? Is there an alternative way to transfer files? I do it with FileZilla or with SSH and then SFTP commands from the terminal. – Jaime Montoya – 2018-07-23T21:20:47.730

@AustinHemmelgarn Without using SFTP (no digital access, only physical access), how could I transfer files from the server to my local computer or upload files from my local computer to the server? – Jaime Montoya – 2018-07-23T21:22:52.960