0

Summary: Random disks on one datanode of an Hadoop cluster keeps getting read-only. Jobs fails but there is no hardware alert on server.

Hello,

I am administring an Hadoop cluster which runs on CentOS 7 (7.4.1708).

The datascience team was getting some failed jobs for a long time. By the time we were also getting our storage disks (on one specific datanode) read-only.

Since initial exception we were receiving was misleading we couldn't relate both (it was actually, we couldn't find a proof that they were related). I run fsck (with an -a tag for automatic fixing) everytime one disk gets read-only but it only fixes logical blocks but does not find any hardware errors.

We have established the relation between two problems as we found out that all failed jobs were using that specific node for Application Master.

Although there a lot of disk errors on OS level there is no hardware errors/alerts reported on servers (led signals / hardware interface). Is getting such hardware problem reports mandatory for a problem to be called as hardware issue?

Thanks in advance.

OS: CentOS 7.4.1708

Hardware: HPE Apollo 4530

Hard Disk: HPE MB6000GEFNB 765251-002 (6TB 6G hot-plug SATA 7.2K 3.5in 512e MDL LP HDD) - (Informed as Smart is not supported)

You can find application and system logs for detail.

We have found out below exceptions in Yarn NodeManager logs of the problematic node:

2018-06-04 06:54:27,390 ERROR yarn.YarnUncaughtExceptionHandler (YarnUncaughtExceptionHandler.java:uncaughtException(68)) - Thread Thread[LocalizerRunner for container_e77_1527963665893_4250_01_000009,5,main] threw an Exception.

org.apache.hadoop.yarn.exceptions.YarnRuntimeException: java.lang.InterruptedException

at org.apache.hadoop.yarn.event.AsyncDispatcher$GenericEventHandler.handle(AsyncDispatcher.java:259)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ResourceLocalizationService$LocalizerRunner.run(ResourceLocalizationService.java:1138)

Caused by: java.lang.InterruptedException

at java.util.concurrent.locks.AbstractQueuedSynchronizer.acquireInterruptibly(AbstractQueuedSynchronizer.java:1220)

at java.util.concurrent.locks.ReentrantLock.lockInterruptibly(ReentrantLock.java:335)

at java.util.concurrent.LinkedBlockingQueue.put(LinkedBlockingQueue.java:339)

at org.apache.hadoop.yarn.event.AsyncDispatcher$GenericEventHandler.handle(AsyncDispatcher.java:251)

... 1 more

2018-06-04 06:54:27,394 INFO localizer.ResourceLocalizationService (ResourceLocalizationService.java:run(1134)) - Localizer failed

java.lang.RuntimeException: Error while running command to get file permissions : java.io.InterruptedIOException: java.lang.InterruptedException

at org.apache.hadoop.util.Shell.runCommand(Shell.java:947)

at org.apache.hadoop.util.Shell.run(Shell.java:848)

at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:1142)

at org.apache.hadoop.util.Shell.execCommand(Shell.java:1236)

at org.apache.hadoop.util.Shell.execCommand(Shell.java:1218)

at org.apache.hadoop.fs.FileUtil.execCommand(FileUtil.java:1077)

at org.apache.hadoop.fs.RawLocalFileSystem$DeprecatedRawLocalFileStatus.loadPermissionInfo(RawLocalFileSystem.java:686)

at org.apache.hadoop.fs.RawLocalFileSystem$DeprecatedRawLocalFileStatus.getPermission(RawLocalFileSystem.java:661)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ResourceLocalizationService.checkLocalDir(ResourceLocalizationService.java:1440)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ResourceLocalizationService.getInitializedLocalDirs(ResourceLocalizationService.java:1404)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ResourceLocalizationService.access$800(ResourceLocalizationService.java:141)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ResourceLocalizationService$LocalizerRunner.run(ResourceLocalizationService.java:1111)

Caused by: java.lang.InterruptedException

at java.lang.Object.wait(Native Method)

at java.lang.Object.wait(Object.java:502)

at java.lang.UNIXProcess.waitFor(UNIXProcess.java:396)

at org.apache.hadoop.util.Shell.runCommand(Shell.java:937)

... 11 more

at org.apache.hadoop.fs.RawLocalFileSystem$DeprecatedRawLocalFileStatus.loadPermissionInfo(RawLocalFileSystem.java:726)

at org.apache.hadoop.fs.RawLocalFileSystem$DeprecatedRawLocalFileStatus.getPermission(RawLocalFileSystem.java:661)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ResourceLocalizationService.checkLocalDir(ResourceLocalizationService.java:1440)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ResourceLocalizationService.getInitializedLocalDirs(ResourceLocalizationService.java:1404)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ResourceLocalizationService.access$800(ResourceLocalizationService.java:141)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ResourceLocalizationService$LocalizerRunner.run(ResourceLocalizationService.java:1111)

And there is some rare exceptions like below on HDFS logs of the node:

2018-06-10 06:55:27,280 ERROR datanode.DataNode (DataXceiver.java:run(278)) - dnode003.mycompany.local:50010:DataXceiver error processing WRITE_BLOCK operation src: /10.0.0.17:50095 dst: /10.0.0.13:50010

java.io.IOException: Premature EOF from inputStream

at org.apache.hadoop.io.IOUtils.readFully(IOUtils.java:203)

at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.doReadFully(PacketReceiver.java:213)

at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.doRead(PacketReceiver.java:134)

at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.receiveNextPacket(PacketReceiver.java:109)

at org.apache.hadoop.hdfs.server.datanode.BlockReceiver.receivePacket(BlockReceiver.java:500)

at org.apache.hadoop.hdfs.server.datanode.BlockReceiver.receiveBlock(BlockReceiver.java:929)

at org.apache.hadoop.hdfs.server.datanode.DataXceiver.writeBlock(DataXceiver.java:817)

at org.apache.hadoop.hdfs.protocol.datatransfer.Receiver.opWriteBlock(Receiver.java:137)

at org.apache.hadoop.hdfs.protocol.datatransfer.Receiver.processOp(Receiver.java:74)

at org.apache.hadoop.hdfs.server.datanode.DataXceiver.run(DataXceiver.java:251)

at java.lang.Thread.run(Thread.java:745)

Linux system (dmesg) logs:

[ +0.000108] Buffer I/O error on device sdn1, logical block 174931199

[ +0.756448] JBD2: Detected IO errors while flushing file data on sdn1-8

[Jun11 14:57] hpsa 0000:07:00.0: scsi 1:0:0:2: resetting Direct-Access HP LOGICAL VOLUME RAID-0 SSDSmartPathCap- En- Exp=3

[Jun11 14:58] hpsa 0000:07:00.0: scsi 1:0:0:2: reset completed successfully Direct-Access HP LOGICAL VOLUME RAID-0 SSDSmartPathCap- En- Exp=3

[ +0.000176] hpsa 0000:07:00.0: scsi 1:0:0:4: resetting Direct-Access HP LOGICAL VOLUME RAID-0 SSDSmartPathCap- En- Exp=3

[ +0.000424] hpsa 0000:07:00.0: scsi 1:0:0:4: reset completed successfully Direct-Access HP LOGICAL VOLUME RAID-0 SSDSmartPathCap- En- Exp=3

[Jun11 15:24] EXT4-fs error (device sdo1): ext4_mb_generate_buddy:757: group 32577, block bitmap and bg descriptor inconsistent: 31238 vs 31241 free clusters

[ +0.013631] JBD2: Spotted dirty metadata buffer (dev = sdo1, blocknr = 0). There's a risk of filesystem corruption in case of system crash.

...

...

[Jun12 04:56] sd 1:0:0:11: [sdm] tag#163 FAILED Result: hostbyte=DID_OK driverbyte=DRIVER_SENSE

[ +0.000016] sd 1:0:0:11: [sdm] tag#163 Sense Key : Medium Error [current]

[ +0.000019] sd 1:0:0:11: [sdm] tag#163 Add. Sense: Unrecovered read error

[ +0.000004] sd 1:0:0:11: [sdm] tag#163 CDB: Write(16) 8a 00 00 00 00 00 44 1f a4 00 00 00 04 00 00 00

[ +0.000002] blk_update_request: critical medium error, dev sdm, sector 1142924288

[ +0.000459] EXT4-fs warning (device sdm1): ext4_end_bio:332: I/O error -61 writing to inode 61451821 (offset 0 size 0 starting block 142865537)

[ +0.000004] Buffer I/O error on device sdm1, logical block 142865280

[ +0.000216] EXT4-fs warning (device sdm1): ext4_end_bio:332: I/O error -61 writing to inode 61451821 (offset 0 size 0 starting block 142865538)

[ +0.000003] Buffer I/O error on device sdm1, logical block 142865281

[ +0.000228] EXT4-fs warning (device sdm1): ext4_end_bio:332: I/O error -61 writing to inode 61451821 (offset 0 size 0 starting block 142865539)

[ +0.000002] Buffer I/O error on device sdm1, logical block 142865282

[ +0.000247] EXT4-fs warning (device sdm1): ext4_end_bio:332: I/O error -61 writing to inode 61451821 (offset 0 size 0 starting block 142865540)

[ +0.000002] Buffer I/O error on device sdm1, logical block 142865283

[ +0.000297] EXT4-fs warning (device sdm1): ext4_end_bio:332: I/O error -61 writing to inode 61451821 (offset 0 size 0 starting block 142865541)

[ +0.000003] Buffer I/O error on device sdm1, logical block 142865284

[ +0.000235] EXT4-fs warning (device sdm1): ext4_end_bio:332: I/O error -61 writing to inode 61451821 (offset 0 size 0 starting block 142865542)

[ +0.000003] Buffer I/O error on device sdm1, logical block 142865285

[ +0.000241] EXT4-fs warning (device sdm1): ext4_end_bio:332: I/O error -61 writing to inode 61451821 (offset 0 size 0 starting block 142865543)

[ +0.000002] Buffer I/O error on device sdm1, logical block 142865286

[ +0.000223] EXT4-fs warning (device sdm1): ext4_end_bio:332: I/O error -61 writing to inode 61451821 (offset 0 size 0 starting block 142865544)

[ +0.000002] Buffer I/O error on device sdm1, logical block 142865287

[ +0.000210] EXT4-fs warning (device sdm1): ext4_end_bio:332: I/O error -61 writing to inode 61451821 (offset 0 size 0 starting block 142865545)

[ +0.000003] Buffer I/O error on device sdm1, logical block 142865288

[ +0.000227] EXT4-fs warning (device sdm1): ext4_end_bio:332: I/O error -61 writing to inode 61451821 (offset 0 size 0 starting block 142865546)

[ +0.000002] Buffer I/O error on device sdm1, logical block 142865289

[ +0.000192] Buffer I/O error on device sdm1, logical block 142865290

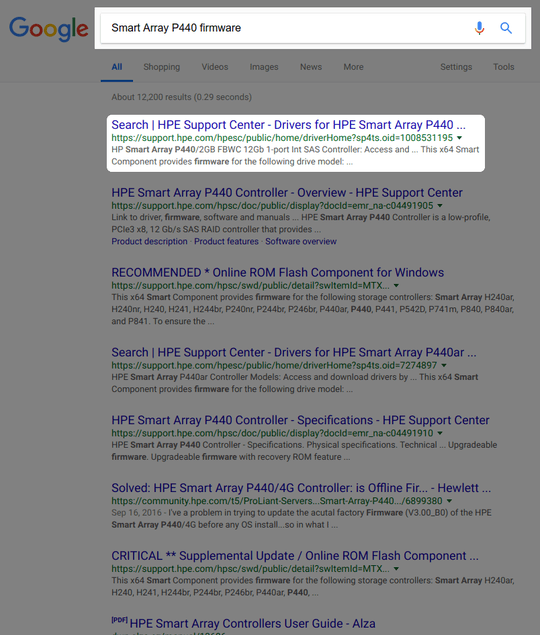

Thanks, @Deltik. I have checked our firmware version. It is 4.52 Good to know that, though. – Sedat Kestepe – 2018-07-03T14:22:03.367

@SedatKestepe: There have been some important updates since the advisory, actually, including more lockups, so it may still be worth trying a firmware update.

– Deltik – 2018-07-03T15:48:28.077