35

4

Does anyone out there know of a way to brute force values at a particular offset in a file? It's 4 consecutive bytes which would need to be brute forced. I know the correct SHA-1 of the corrupt file. So, what I'd like to do is compare the complete file SHA-1, each time it changes the byte value.

I know the exact 4 bytes which were changed, because the file was given to me by a data recovery expert, as a recovery challenge. For those who are interested in knowing, the rar file has 4 bytes which were intentionally changed. I was told the offsets of the changed 4 bytes and the original SHA-1. The person said it's IMPOSSIBLE to recover the exact file in the archive once the 4 bytes were changed. Even if it was only a few bytes and you knew exactly where the corruption was located. Since it doesn't have a recovery record. I'm trying to see if there is a way for those particular 4 bytes to be filled in correctly so the file will decompress without error. The file size is around 5mb.

Example:

I uploaded photos so it's more clearly defined of exactly what I'm looking to do. I believe someone can post them here for me with more rep.

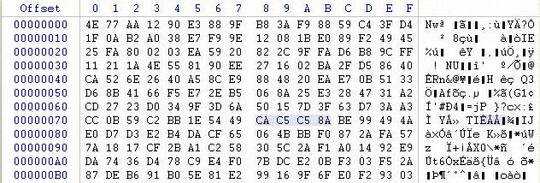

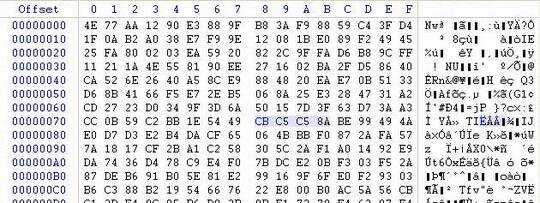

The example offset I'm focusing on is 0x78 where the first pic shows the value as CA

I want the script to the take the value up by 1 so it becomes CB as shown in the second pic. I want it to keep increasing the value by 1 and then compare the whole file SHA-1 each time. Only making changes to those 4 bytes at the specified offset.

It will try CAC5C58A and compare the SHA-1. If doesn't match, then it will try CBC5C58A.Then once the first value reaches FF it will then go to 00C6C58A and so on. Basically, I would like it to be able to go from 00000000-FFFFFFFF but to also have the option to choose where you want it start and end. I know it could take some time but I would still like to try it. Keep in mind I know the exact offset of the bytes which are corrupt. I just need the correct values.

If you search on Google: "How to fix a corrupted file by brute force" There's a person that wrote a Linux program. However, it only works against the files included with the program. I'm looking for some way to use the same process with my file.

3

Welcome to Super User! I have edited your question to remove the request for a program, which would be off-topic. Can you edit your question to include (some of) the examples you saw? It's good that you have done research, but showing us exactly what research that is would be helpful :)

– bertieb – 2018-04-19T10:21:51.117Thanks for head up Bertieb! I added some more details. – Sbt19 – 2018-04-19T10:48:58.570

20could i ask how you ended up with this file and how you can be sure that those are the only 4 corrupt bytes? – Edoardo – 2018-04-19T12:38:22.867

1Do you know the file format ? If you do you might be able to work out the correct values or limit the ranges, rather than trying to brute force them. In general, however, I'd suggest any corrupted file should be dumped for security reasons. – StephenG – 2018-04-19T14:59:40.867

11@eddyce I'm really interested in the second part of your question - why those 4 bytes? – Craig Otis – 2018-04-19T15:26:55.160

1

I guess the blog post you are alluding to is https://conorpp.com/how-to-fix-a-corrupted-file-by-brute-force

– tripleee – 2018-04-19T15:40:03.0502Out of curiosity, how did the file get corrupted? And how do you know it was those four bytes? – JohnEye – 2018-04-19T16:49:17.650

@CraigOtis i never asked why those 4 bytes, “how can you be sure those are the only 4 corrupt ones” is the thing – Edoardo – 2018-04-19T19:27:43.507

The program "ghex" is useful for things like this. – Lee Daniel Crocker – 2018-04-19T19:28:04.020

@LeeDanielCrocker Could you elaborate on how it's useful? Are you going to manually save 4 billion files in ghex, run sha on them and see if it matches? A bit tedious. – pipe – 2018-04-19T21:46:37.577

The question was about patching a few bytes in a single file. – Lee Daniel Crocker – 2018-04-19T22:55:58.137

1@LeeDanielCrocker No, the question is about patching it until the checksum gets the expected value, and just like pipe alrealy asked, we now wonder if you didn't read the question properly, or if

ghexcan actually do this. – tripleee – 2018-04-20T03:26:30.3271I added more details about the file in question. It's only a data recovery test file. – Sbt19 – 2018-04-20T05:12:00.813

@eddyce: It's pretty easy to get into this situation if you accidentally save an edit in your hex editor and then it discards its undo buffer when it saves. (I've used ones that do that.) – user541686 – 2018-04-20T07:37:31.187

1Note that, due to the pigeon-hole principle, there might be more than one sequence of bytes that make the hash match. One of those sequences might be more "valid" for whatever type of file this is. – Roger Lipscombe – 2018-04-20T08:34:13.467

It looks like you are searching for a hex-editor. https://softwarerecs.stackexchange.com/ is the correct place to ask

– Mawg says reinstate Monica – 2018-04-20T09:58:58.340@mehrdad ok, its some kind of challenge then :) advice: make sure you are checking the file against the SHA-1 that was given to you and not only by unpacking the RAR archive, because - maybe - the changed 4 bytes are part of the RAR CRC records... – Edoardo – 2018-04-20T15:00:00.627

You might time how long it takes to sha1 the current file and multiply that by 2^32 for the worst case search time. If each sha1 evaluation takes 0.01 seconds you’re looking at worst case 1.36 years unless you parallelize the search. On average, half of that. – rrauenza – 2018-04-20T16:51:42.720

Related https://math.stackexchange.com/questions/1410509/probability-number-of-guesses-to-get-the-correct-item-from-a-set-after-repet

– rrauenza – 2018-04-20T16:53:25.953Let’s Enhance! How we found @rogerkver’s $1,000 wallet obfuscated private key – Vlastimil Ovčáčík – 2018-04-25T09:33:05.893