16

6

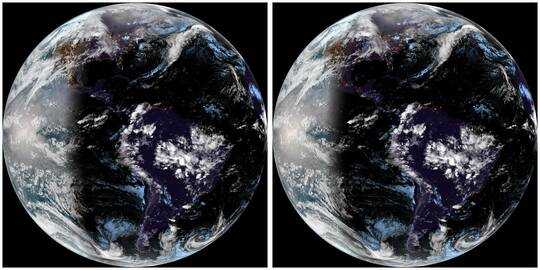

I'm dealing with a large archive of satellite images of the Earth, each one taken 15 minutes apart over the same area, therefore they are quite similar to each other. Two contiguous ones look like this:

Video algorithms do very well compressing multiple similar images. However, this images are too large for video (10848x10848) and using video encoders would delete the metadata of the images, so extracting them and restoring the metadata would be cumbersome even if I get a video encoder to work with such large images.

To make some tests I've reduced the 96 images of one day to 1080x1080 pixels, totaling 40.1MB and try different compression with the folowing results:

- zip: 39.8 MB

- rar: 39.8 MB

- 7z : 39.6 MB

- tar.bz2: 39.7 MB

- zpaq v7.14: 38.3 MB

- fp8 v2: 32.5 MB

- paq8pxd v45: 30.9 MB

The last three, are supposed to take much better advantage of the context and indeed work better than traditional compression, but the compression ratio is still pretty poor compared with mp4 video that can take it to 15 MB or even less preserving the image quality.

However, none of the algorithms used by those compression utilities seem to take advantage of the similarity of the images as video compression do. In fact, using packJPG, that compress each image separately, the whole set get down to 32.9 MB, quite close to fp8 and paq8pxd but without taking at all advantage of the similarities between images (because each image is compressed individually).

In another experiment, I calculated in Matlab the difference of the two images above, and it looks like this:

Compressing both original images (219.5 + 217.0 = 436.5 kB total) with fp8 get them down to 350.0 kB (80%), but compressing one of them and the difference image (as a jpg of the same quality and using 122.5 kB), result in a file of 270.8 kB (62%), so again (as revealed by the mp4 and packJPG comparison), fp8 doesn't seem to take much advantage of the similarities. Even compressed with rar, one image plus the difference do better than fp8 on the original images. In that case, rar get it down to 333.6 kB (76%).

I guess there must be a good compression solution for this problem, as I can envision many applications. Beside my particular case, I guess many professional photographers have many similar shots due to sequential shooting, or time-lapse images, etc. All cases that would benefit from such compression.

Also, I don't require loseless compression, at least not for the image data (metadata must be preserved).

So... Is there a compression method that do exploit the similarities between the images been compressed?

The two images of the above test can be downloaded here, and the 96 images of the first test here.

1More feedback from the people who put the question on hold would be appreciated. I feel the question is general enough and can be answered without pointing to a specific product, but to a method, algorithm or technique. – Camilo Rada – 2018-04-09T17:09:11.893

1Peanut gallery (I didn't vote to close) but

Is there a compression utility that take advantage of the similarities between images better than zpaq and fp8?andIs there a updated/maintained version of the fp8 utility?are likely the offending lines. Contrast that with e.g.Is there a compression *method, algorithm or technique* that take advantage of the similarities between images better than zpaq and fp8?The focus is arguably much different. Asking for software is probably redundant anyway, since specific software (if applicable) will almost certainly be mentioned in any answer given. – Anaksunaman – 2018-04-09T22:45:22.420@Anaksunaman OK, I updated and edited the question. So, if you consider it appropriate please vote to reopen it. (I have to say that after it was closed I posted a similar one in Software Recommendations SE, but I still feel this is a better place for it) – Camilo Rada – 2018-04-10T00:14:07.897

1I agree. And done. Good luck. =) – Anaksunaman – 2018-04-10T00:35:01.727

2

"Too big for video"? Not sure I agree with this. Some codecs have very high or unlimited max resolutions. You're not trying to build a watchable video, just compress some static images. Could you encode the metadata as subtitles or other data?

– benshepherd – 2018-04-11T10:07:49.113@benshepherd Yes, I'll explore that more, I've tried it but without success, but it could be just the way I'm trying or a limitation of the software I've used and not the codecs. Thanks – Camilo Rada – 2018-04-12T00:24:34.907

1To add to the list of applications, I would need this to store original frames of a time lapse project that will get additional parts in the future. The current 10 000 x 4K JPG images take 25 GB of space, where a MP4 composed of them takes only 85 MB. – Akseli Palén – 2019-10-15T12:39:05.530