-1

1

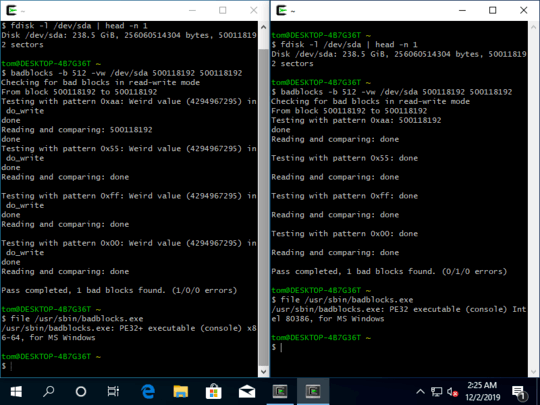

This is the second time that I'm receiving this error running badblocks, approximately 2 years apart from the last time, and the vast majority of factors from hardware (cables, etc.) to software (the installation of the operating system itself) have changed since, with the only relevant common factors being Cygwin and the badblocks program itself, making it highly likely that the issue is between those.

When running badblocks in destructive mode (i.e. with the -w switch), I get the error:

Weird value (4294967295) in do_writerrors

...at each stage of writing the patterns to the drive.

As far as I can tell, I seem to get this error only when running the command with the specified last block reported by fdisk -l:

$ fdisk -l /dev/sda

Disk /dev/sda: 931.5 GiB, 1000204886016 bytes, 1953525168 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

$ badblocks -b 512 -vws /dev/sda 1953525168 1953525168

Checking for bad blocks in read-write mode

From block 1953525168 to 1953525168

Testing with pattern 0xaa: Weird value (4294967295) in do_writerrors)

done

Reading and comparing: 1953525168ne, 0:00 elapsed. (0/0/0 errors)

done

Testing with pattern 0x55: Weird value (4294967295) in do_writerrors)

done

Reading and comparing: done

Testing with pattern 0xff: Weird value (4294967295) in do_writerrors)

done

Reading and comparing: done

Testing with pattern 0x00: Weird value (4294967295) in do_writerrors)

done

Reading and comparing: done

Pass completed, 1 bad blocks found. (1/0/0 errors)

$ badblocks -b 512 -vws /dev/sda 1953525168 1950000000

Checking for bad blocks in read-write mode

From block 1950000000 to 1953525168

Testing with pattern 0xaa: Weird value (4294967295) in do_writerrors)

done

Reading and comparing: 1953525168ne, 0:49 elapsed. (0/0/0 errors)

done

Testing with pattern 0x55: Weird value (4294967295) in do_writerrors)

done

Reading and comparing: done

Testing with pattern 0xff: Weird value (4294967295) in do_writerrors)

done

Reading and comparing: done

Testing with pattern 0x00: Weird value (4294967295) in do_writerrors)

done

Reading and comparing: done

Pass completed, 1 bad blocks found. (1/0/0 errors)

As can be seen, this also results in a false positive of a bad block, whereas this supposed bad block is nowhere to be found via CrystalDiskInfo:

At this point the drive has been zeroed multiple times and had badblocks write to its last few blocks tens of times, so there's been plenty of opportunity for the SMART values to have picked up a bad sector in block 1953525168 if one existed.

I believe 4294967295 is the partition size limitation (FF FF FF FF) on Windows. On Windows, there is a 2 TB limitation. It is in the number of sectors. Please see https://blogs.technet.microsoft.com/askcore/2010/02/18/understanding-the-2-tb-limit-in-windows-storage/ Given these values, you could try writing 0s (current Windows formatting system does this) to the disk and remap, but I recommend you to back it up before you try out anything. C6 indicates there is a problem on disk surface or perhaps a mechanical issue. This disk seems not recoverable.

– Epoxy – 2018-04-03T01:46:39.070When looking at the power on hours, it does not seem old (probably less than 2 years) and in that case, you may still have the warranty as well. – Epoxy – 2018-04-03T01:46:41.800

@Epoxy Doesn't that partition size limitation apply only to NTFS? It's not NTFS, but a proprietary filesystem, and I've had no previous issues with using it or using the machine I'm currently using to run

badblockson it. – Hashim – 2018-04-03T01:52:57.277@Epoxy The drive itself is a DiamondMax 10, released in 2005, the low power on hours are due to the fact that the machine it's in simply isn't used that often. – Hashim – 2018-04-03T01:54:14.873

@Epoxy Also, what do you mean by "remap" here? – Hashim – 2018-04-03T01:58:29.603

1If there is a compatible with the diagnostic utility with respect to the file system it will tell something different. In case if there is a firmware bug and/or if the software is unable to determine the file system there is a chance that it reports false statistics. I have seen it happen sometimes (rare). The proprietary file system may also have certain disk standard and limitations similar to that which I am not aware of. However, with these stats. it is still difficult to determine what caused it. – Epoxy – 2018-04-03T02:23:52.383

1If a read error occurs, it might mark the sector as bad but something else can cause read errors as well. Could be the moving heads or the circuitry issues. You could further test with something like Maxtor PowerMax. This will help you to get more valid results. (remap: mapping workable sectors and mark the bad blocks to prevent further use) – Epoxy – 2018-04-03T02:24:48.293

Recently ran into this error again on a completely different drive, this time a SATA one. Circumstances are completely different except that I'm using

badblocksover Cygwin again, so I'm pretty sure this is a problem withbadblocksat this point - maybe the Cygwin repo version of it since it seems to be such a rare error. Adding a bounty to see if someone can figure it out. – Hashim – 2019-11-28T22:38:25.770I suggest you change the cable between the board and the disk before you keep testing the disk. (or test the disk on another computer) – Eduardo Trápani – 2019-11-29T12:10:04.897

I thought this was clear enough from my last comment and from the note on the bounty itself, but I have now drastically overhauled the question to make it clear that this is not an issue with any one particular drive nor is it very likely to be a hardware issue. I am only looking to award the bounty to questions that actually answer the larger question here of what the error is, what it's caused by and how it can be fixed. – Hashim – 2019-12-01T22:12:18.793

1Have you tried to boot a live Linux distro and run

badblockson it? – dirdi – 2019-12-01T23:23:41.867I'll try that as soon as I can turn off my machine, but I'm pretty confident I won't be able to reproduce it, as this problem seems limited to the Cygwin repo's version of

badblocksbased on what I've seen of others running the same commands on their machines. Will report back once I have. – Hashim – 2019-12-01T23:27:14.477For what is worth, you should be testing with

1953525167.1953525168is "last block +1" in your case. – Tom Yan – 2019-12-02T10:47:20.650