75

9

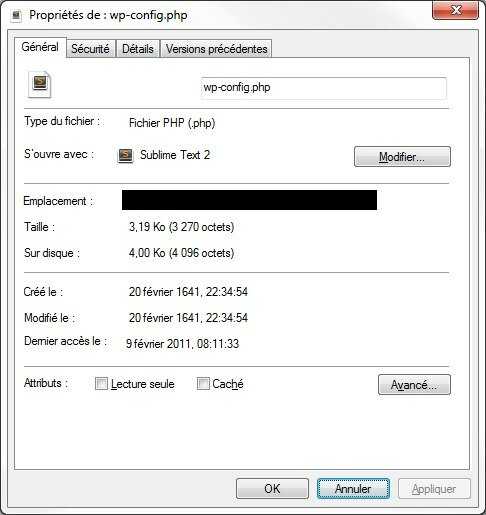

A few years ago, I stumbled on this file on our fileserver.

And I wonder how can a file say it's been created in 1641? As far as I know, time on pc is defined by the number of seconds since Jan 1st, 1970. If that index glitches out, you can get Dec 31, 1969 (the index probably says -1) but I'm stumped at this seemingly random date, that predates even the founding of the United States of America.

So how could a file be dated in 1641?

PS: Dates are in french. Février is February.

29"As far as I know, time on pc is defined by the number of seconds since Jan 1st, 1970." - Even for systems where it is, dates before 1970 are easily represented by making that number (known as a unix timestamp) negative. For example, unix time -1000000000 corresponds to 1938-04-24 22:33:20. – marcelm – 2018-03-27T15:50:08.213

1@marcelm Yes, but the minimum possible date there is in 1901 due to the limited range of 32-bit integers. – slhck – 2018-03-27T15:53:58.663

14

@slhck: I think marcelm was assuming a 64-bit timestamp, because that's what current Unix / Linux filesystems, kernels, and user-space software use. See the

– Peter Cordes – 2018-03-27T16:03:21.127clock_gettime(2)man page for a definition ofstruct timespec, which is whatstatand other system calls use to pass timestamps between user-space and the kernel. It's a struct with atime_tin seconds, and along tv_nsecnanoseconds. On 64-bit systems, both are 64-bit, so the whole timestamp is 128 bits (16 bytes). (Sorry for too much detail, I got carried away.)@PeterCordes I actually appreciate the level of detail! I know 64-bit systems use a different

time_tnow; in hindsight I probably shouldn't even have brought up the year 2038 issue. – slhck – 2018-03-27T16:14:33.0533You got a good answer to how a date in the 1600s can be stamped to the file, now it is time to ponder how it happened. I'd look at the contents of that wp file very closely to see what might have been added, as that might shed light on how it happened. I'd look at the installed plugins and validate none are shady. I am thinking something modified that file and tried to manually stamp modified/created dates to hide that the file was modified but specified a unix time instead of a windows time. – Thomas Carlisle – 2018-03-28T12:35:38.440

1FYI, in linux you can backdate something with

touch -d "20 Feb 1641" file. It's occasionally useful when testing code like build systems or source repos which use timestamps to determine some behavior. – Karl Bielefeldt – 2018-03-29T14:04:07.3272King Louis XIII the Just was demonstrating the royal line's commitment to PHP? – Jesse Slicer – 2018-03-29T14:29:04.673

1It may have involved a Delorean. Just saying. – phyrfox – 2018-03-31T00:59:57.983