RDP before Windows 10 had its own graphics driver to convert the rendered

screen into network packets to send to the client, which used exclusively the CPU. Window 8 was the first to start using the GPU.

Since Windows 10 build 1511 and Windows Server 2016, RDP uses the AVC/H.264

codec in order to support larger screens than full HD.

This codec uses the GPU,

but only under certain conditions and for full desktop sessions,

but otherwise falls back to using the CPU as before.

Using AVC/H.264 is now the default, but you may disable it using

the Group Policy Editor (gpedit.msc) and drilling down to :

Computer Configuration -> Administrative Templates -> Windows Components -> Remote Desktop Services -> Remote Desktop Session Host -> Remote Session Environment.

Set the following policies to Disabled,

to disable the use of the AVC/H.264 codec :

- Configure H.264/AVC hardware encoding for Remote Desktop connections

- Prioritize H.264/AVC 444 Graphics mode for Remote Desktop connections

In any case, non-full desktop sessions should not currently

use the GPU (but this could change without notice).

References :

The last reference contains this text :

This policy setting lets you enable H.264/AVC hardware encoding support for Remote Desktop Connections. When you enable hardware encoding, if an error occurs, we will attempt to use software encoding. If you disable or do not configure this policy, we will always use software encoding.

1RDP uses its own video driver to render remote sessions. How did you confirm the GPU use is due to RDP? – I say Reinstate Monica – 2018-03-25T12:07:43.987

@TwistyImpersonator, I have 2 GPUs installed and only the first one's resources are used when remoting into the host. When I connect via terminal no resources are used. So, my current RDP session definitely consumes GPU resources on the host machine. – Matthias Wolf – 2018-03-25T12:09:55.920

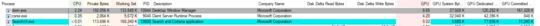

You should use Process Explorer to confirm the TermSrv (RDP session host service) is consuming GPU. Do this by opening the process's details then look on the GPU tab.

– I say Reinstate Monica – 2018-03-25T12:14:42.790@TwistyImpersonator, I added a screenshot to show which apps consume GPU resources. Those apps are not consuming GPU resources when connecting via terminal, only. – Matthias Wolf – 2018-03-25T12:25:05.723

that's not remote desktop, that's the desktop window manager. Perhaps the correct question to ask is why it's using GPU in the RDP session, though even then I'm not sure what's abnormal about that. – I say Reinstate Monica – 2018-03-25T13:44:48.320

That may be true but clearly rdp uses gpu resources. When logging gpu resources then during each rdp session a significant uptake of gpu resources is noticeable. – Matthias Wolf – 2018-03-26T16:20:02.123