41

14

So here's what's happening.

I started a backup of a drive on my server through a Linux live USB. I started copying the first drive with the dd command vanilla; just sudo dd if=/dev/sda of=/dev/sdc1 and then I remembered that this just leaves the console blank until it finishes.

I needed to run a different backup to the same drive anyway, so I started that one as well with sudo dd if=/dev/sdb of=/dev/sdc3 status=progress and then I got a line of text that shows the current rate of transfer as well as the progress in bytes.

I was hoping for a method that shows a percentage of the backup instead of doing the math of how many bytes are backed up out of 1.8TBs. Is there an easier way to do this than status=progress?

Thank you for the help! this will definitely help with the whole process. I will try the pv < /dev/sda > /dev/sdc3 method and hope that its faster as it reports. I had to cancel the last run of this and turn the server back on today because everyone in my office had been complaining, however this will help with having a definite percentage to fall back on when I am not sure how much time left that I should tell them. Im interested to see the ETA when I get it going again this friday! hahaha. – None – 2018-01-30T23:14:16.663

9you'll almost certainly find that using 'bs' on the dd command hugely speeds it up. Like dd if=/dev/blah of=/tmp/blah bs=100M to transfer 100M blocks at a time – Sirex – 2018-01-31T01:49:15.330

1@Sirex Of course you have to set the bs to optimize the transfer rate in relation with your hardware... In the answer is just repeated the commandline of the OP. :-) – Hastur – 2018-01-31T08:05:27.830

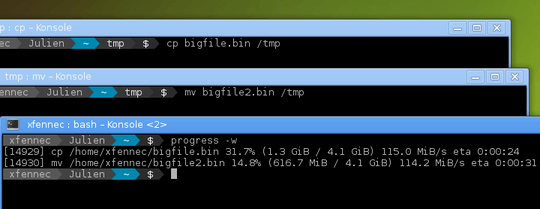

Note that you can also just do

pv /dev/sdadirectly if you want. Also, on the output, you may want to addoflag=sync, otherwise the command completes really quickly, and then sits there silently flushing for ages. The sync flag makes it wait for the data to actually write to disk. – MathematicalOrchid – 2018-01-31T09:15:13.173Excellent answer. Do note that signalling USR1 to dd can take a while to process. I've done it writing to a USB drive, and the answer only appeared after the writes were finished. – Criggie – 2018-02-01T01:25:12.923

@Hastur bs doesn’t matter in OP’s case, only if e.g. he is piping his dd B(uffer)S(size) to something data-mangling, like gz, xz, gpg, foo... bs is internally 64k and there’ll be no extra love for making bs bigger when just writing to a similar drive - if anything, there will be a delay in between reads and writes. – user2497 – 2018-02-01T06:03:17.670

@Sirex:

100Mis way too large, especially if writing to a pipe. Pipe buffers are much smaller than 100MB, so there's no point making awrite()system call with that size; it will return early.bs=1Mis ok. I often usebs=128k, which is half of L2 cache size on my CPU; it's a tradeoff between more system calls and reading memory that's still hot in cache from being written. – Peter Cordes – 2018-02-01T10:53:18.6973@Criggie: that's maybe because

ddhad already finished all thewrite()system calls, andfsyncorclosewas blocked waiting for the writes to reach disk. With a slow USB stick, the default Linux I/O buffer thresholds for how large dirty write-buffers can be leads to qualitatively different behaviour than with big files on fast disks, because the buffers are as big as what you're copying and it still takes noticeable time. – Peter Cordes – 2018-02-01T11:00:42.0135Great answer. However, I do want to note that in OpenBSD the right kill signal is SIGINFO, not SIGUSR1. Using -USR1 in OpenBSD will just kill dd. So before you try this out in a new environment, on a transfer that you don't want to interrupt, you may want to familiarize yourself with how the environment acts (on a safer test). – TOOGAM – 2018-02-02T05:17:06.217

1the signals advice for

ddis really great info, especially for servers where you can't/don't want to installpv– mike – 2018-02-03T11:48:04.767