On a (digital) TV, sharpness controls a peaking filter that enhances edges. That is not so useful on a display if used as a computer monitor.

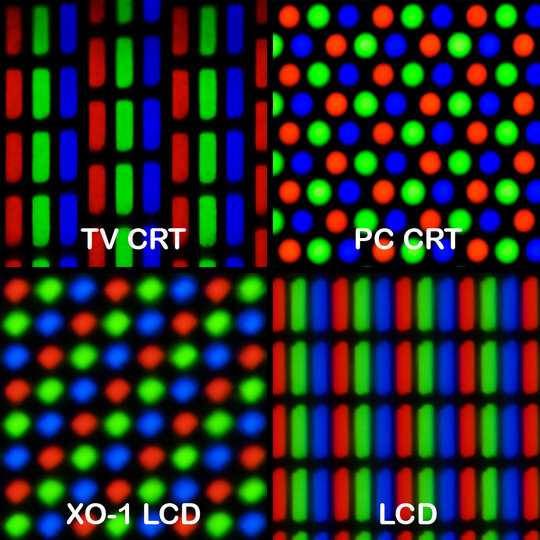

In the previous century, on a high-end analog CRT monitor, sharpness may have controlled the focus voltage of the electron gun. This affects the spot size with which the picture is drawn. Set the spot size too small (too sharp) and the line structure becomes too visible. Also there may be annoying "Moiré" interference with the structure of the shadow mask. The optimum setting depends on the resolution (sample rate) of the picture, as many CRT monitors were capable of multiple resolutions without scaling (multi-sync). Set it just sharp enough.

High-end CRT TVs had Scan Velocity Modulation, where the scanning beam is slowed down around a vertical edge, and also a horizontal and vertical peaking filters and perhaps a horizontal transient improvement circuit. Sharpness may have controlled any or all.

Sharpening in general enhances edges by making the dark side of the edge darker, the bright side brighter, and the middle of the edge steeper. A typical peaking filter calculates a 2nd order differential, in digital processing e.g. (-1,2,-1). Add a small amount of this peaking to the input signal. If you clip off the overshoots then it reduces to "transient improvement".

On some digital devices, the sharpness of a scaler may be controlled, e.g. in my digital satellite TV receivers. This sets the bandwidth of the polyphase filters of a scaler, which converts from a source resolution to the display resolution. Scaling cannot be perfect, it is always a compromise between artefacts and sharpness. Set it too sharp and annoying contouring and aliasing are visible.

This may be the most plausible answer to your question, but only if the monitor is scaling. It would do nothing for an unscaled 1:1 mode.

Source: 31 years of experience in signal processing for TV.

30Funny, last time I saw sharpness was on a CRT. Back then it let you control the timing between red, green, and blue pulses to line them up appropriately. If it was slightly too fast or too slow, the three phosphors would be kind of smeared. If you lined them up just right, the image was as sharp as possible. Obviously that doesn’t apply at all to modern displays. – Todd Wilcox – 2018-01-05T04:31:06.530

8You're putting on a lot of answers that they don't answer your question it might help if you said why they don't answer the question. Its worth noting that the monitor is not required by law to display what the software asks it to. It could for example change every third pixel to green, or invert them. So the reason that the monitor can "fuzzify" images is because enough customers wanted it, it could just as easily stretch the image but no-one wanted that, so they don't. So there are only 2 types of answer: how is this technically done, or why do people want this? – Richard Tingle – 2018-01-05T10:25:14.783

You have two questions : "What sense does it make...?" and "Where exactly does the degree of freedom...". I think that is causing confusion – Christian Palmer – 2018-01-05T10:31:17.170

3@RichardTingle: I suppose the answer to "why do people want this" could potentially also answer my question, yeah. Though I'm more trying to understand what sense this makes from a technical perspective. To give you an example, it actually makes sense to me to have a sharpness parameters for displaying RAW photos, because the mapping from the sensor data to a pixels is inherently an under-determined problem (i.e. doesn't have a unique solution) with degrees of freedom like sharpness, noise level, etc. But bitmaps are pixels, so where is the room for adjusting things like "sharpness"? – user541686 – 2018-01-05T10:32:49.273

@ChristianPalmer: Possibly? They're the same question to me though. See here as to how/why.

– user541686 – 2018-01-05T10:35:43.3572@ToddWilcox: For what it's worth, at least HP EliteDisplay monitors (LCD) have a "Sharpness" setting, and it works even with a digital input (i.e. not VGA). – sleske – 2018-01-05T11:35:42.700

2I might add that I have had to adjust "Sharpness" to compensate for users who have eyeglasses or contacts in some cases. – PhasedOut – 2018-01-05T19:13:40.500

Digital cameras also let you adjust the "sharpness" of the image. Assuming the camera is capable of taking an in-focus image, why would this be needed? User preference... – JPhi1618 – 2018-01-05T20:42:34.197

1Most modern displays with analog inputs can adjust the clock and phase automatically to ensure that the image is sharp, and I assume this is what you're referring to. If it's not an analog input, then it's almost certainly some form of post-processing. – bwDraco – 2018-01-06T18:52:09.283

Another topic that should not be forgotten here is accessibility. You might not care about being able to set sharpness/brightness/contrast but there are certainly people who require being able to set it to make sure that they can see the image correctly. – Bobby – 2018-01-07T10:02:38.493

Too much sharpness can be tiring to the eyes, especially if you have sensitive eyes. Personally, I soften the appearance most of the time; maybe because I have pretty sensitive eyes (able to see light flicker at frequencies invisible to many/most other persons), and softening might be a method to reduce sensoric load. – phresnel – 2018-01-08T10:34:47.703

One-word answer: legacy. Longer: CRTs needed the setting, now it's a matter of aesthetics. – Chuck Adams – 2018-01-09T23:28:38.623