46

13

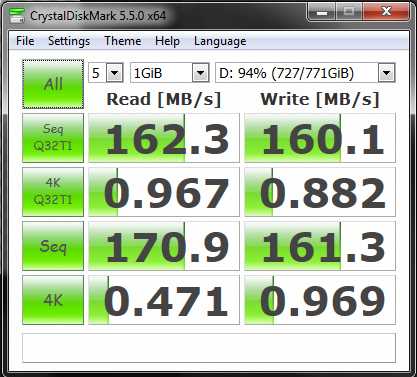

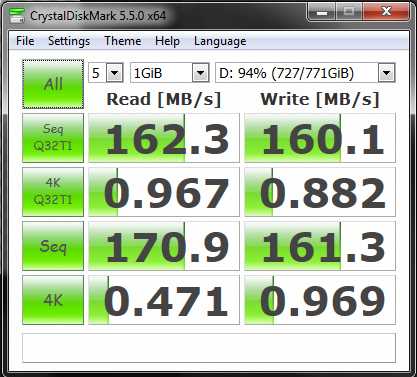

What is wrong with my speed at 4K? Why is it so slow? Or is it supposed to be like that?

Is that speed okay? Why do I have such low speed at 4K?

46

13

What is wrong with my speed at 4K? Why is it so slow? Or is it supposed to be like that?

Is that speed okay? Why do I have such low speed at 4K?

86

What you are running into is typical of mechanical HDDs, and one of the major benefits of SSDs: HDDs have terrible random access performance.

In CrystalDiskMark, "Seq" means sequential access while "4K" means random access (in chunks of 4kB at a time, because single bytes would be far too slow and unrealistic1).

There are, broadly, two different ways you might access a file.

Sequential access means you read or write the file more or less one byte after another. For example, if you're watching a video, you would load the video from beginning to end. If you're downloading a file, it gets downloaded and written to disk from beginning to end.

From the disk's perspective, it's seeing commands like "read block #1, read block #2, read block #3, read byte block #4"1.

Random access means there's no obvious pattern to the reads or writes. This doesn't have to mean truly random; it really means "not sequential". For example, if you're starting lots of programs at once they'll need to read lots of files scattered around your drive.

From the drive's perspective, it's seeing commands like "read block #56, read block #5463, read block #14, read block #5"

I've mentioned blocks a couple of times. Because computers deal with such large sizes (1 MB ~= 1000000 B), even sequential access is inefficient if you have to ask the drive for each individual byte - there's too much chatter. In practice, the operating system requests blocks of data from the disk at a time.

A block is just a range of bytes; for example, block #1 might be bytes #1-#512, block #2 might be bytes #513-#1024, etc. These blocks are either 512 Bytes or 4096 Bytes big, depending on the drive. But even after dealing with blocks rather than individual bytes, sequential block access is faster than random block access.

Sequential access is generally faster than random access. This is because sequential access lets the operating system and the drive predict what will be needed next, and load up a large chunk in advance. If you've requested blocks "1, 2, 3, 4", the OS can guess you'll want "5, 6, 7, 8" next, so it tells the drive to read "1, 2, 3, 4, 5, 6, 7, 8" in one go. Similarly, the drive can read off the physical storage in one go, rather than "seek to 1, read 1,2,3,4, seek to 5, read 5,6,7,8".

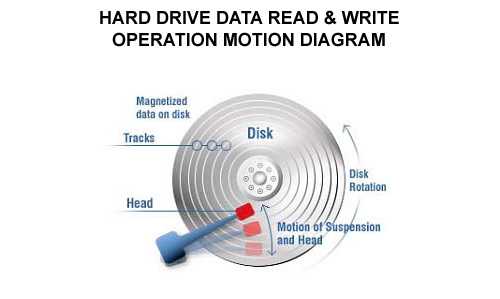

Oh, I mentioned seeking to something. Mechanical HDDs have a very slow seek time because of how they're physically laid out: they consist of a number of heavy metalised disks spinning around, with physical arms moving back and forth to read the disk. Here is a video of an open HDD where you can see the spinning disks and moving arms.

Image from http://www.realtechs.net/data%20recovery/process2.html

This means that at any one time, only the bit of data under the head at the end of the arm can be read. The drive needs to wait for two things: it needs to wait for the arm to move to the right ring ("track") of the disk, and also needs to wait for the disk to spin around so the needed data is under the reading head. This is known as seeking2. Both the spinning and the moving arms take physical time to move, and they can't be sped up by much without risking damage.

This typically takes a very very long time, far longer than the actual reading. We're talking >5ms just to get to where the requested byte lives, while the actual reading of the byte averages out to about 0.00000625ms per sequential byte read (or 0.003125ms per 512 B block).

Random access, on the other hand, don't have that benefit of predictability. So if you want to read 8 random bytes, maybe from blocks "8,34,76,996,112,644,888,341", the drive needs to go "seek to 8, read 8, seek to34, read 34, seek to76, read 76, ...". Notice how it needs seek again for every single block? Instead of an average of 0.003125ms per sequential 512 B block, it's now an average of (5ms seek + 0.003125ms read) = 5.003125ms per block. That's many, many times slower. Thousands of times slower, in fact.

Fortunately, we have a solution now: SSDs.

A SSD, a solid state drive, is, as its name implies, solid state. That means it has no moving parts. More, the way a SSD is laid out means there is (effectively3) no need to look up the location of a byte; it already knows. That's why a SSD has much less of a performance gap between sequential and random access.

There still is a gap, but that can be largely attributed to not being able to predict what comes next and preloading that data before it's asked for.

1 More accurately, with LBA drives are addressed in blocks of 512 bytes (512n/512e) or 4kB (4Kn) for efficiency reasons. Also, real programs almost never need just a single byte at a time.

2 Technically, seek only refers to the arm travel. The waiting for the data to rotate under the head is rotational latency on top of the seek time.

3 Technically, they do have lookup tables and remap for other reasons, e.g. wear levelling, but these are completely negligible compared to a HDD...

@KamilMaciorowski I'm actually rethinking that simplification now, because it does throw off my seek+read time calculation. Oh well. It's not too important for the concepts. – Bob – 2017-12-11T05:58:24.407

You should correct the random part: Notice how it needs to look for every single byte? : replace byte with block (and change the exemple accordingly). The drive seeks the 4k part (which could be further be dispersed into 512bytes chunk, but no lower than this. It does not seek between every bytes! it seeks between every blocks if the next block is not right behind (which happens a lot on fragmented disks). And seeking (moving the head around the platter, and waiting for the block to pass underneath it) is what takes very long (a few milliseconds) – Olivier Dulac – 2017-12-11T09:34:52.883

2A small dienote to 4 kiB/512B. 4kiB is also size of page on, well, almost everything so OS $ is likely to readahead full 4 kiB block even if LBA drivers read in 512 B chunks. Also I don't think the problem is that HDD needs to 'find' any byte any more than SDD than that it needs to physically rotate to correct position. If you access block again you need to seek to it again as HDD is spinning continously. Any block remapping is likely to be a secondary effect (and remapped block is usually just after damaged one anyway I belive to minimize seek). – Maciej Piechotka – 2017-12-11T09:35:10.077

(Possibly a complete side note - I'm not sure about NAND/NOR but at least DDR addressing also is not completly random as name would indicate but works in 'burst' of addresses. In most cases this is 64 B due to it being a size of $ line of most CPUs but can be much larger for other applications.) – Maciej Piechotka – 2017-12-11T09:37:50.547

@OlivierDulac I'm aware it's not completely accurate, but I thought dealing with bytes would be easier for the OP to understand. That's why the footnote's there. I might still revise it to blocks when I have a bit of time, but then I'd need to properly explain the what and the why :\ – Bob – 2017-12-11T09:42:00.023

@Bob maybe just s/byte/block/ in the whole answer could be enough? The byte 67 becomes block (number) 67, and it works too? – Olivier Dulac – 2017-12-11T09:49:25.377

1@OlivierDulac I still think introducing blocks is potentially confusing, but I've tried to explain it. Answer updated. – Bob – 2017-12-11T09:58:21.167

@MaciejPiechotka I was using "find" in the layman's sense - of course the drive internally knows which platter, track and sector it's on, but it still needs to physically 'find' it. I've updated the answer to use the more technical seek. Not sure if that'll be easier or harder to understand - again, from the perspective of someone who knows nothing about drives' internals at all. – Bob – 2017-12-11T10:00:18.887

Rotating disk random access performance is not "terrible" in the grand scheme of things. It certainly is poor compared to solid-state memories, but 5-10 milliseconds is still a lot better than seek times on the media that rotating disks replaced. With tapes, seek times were frequently measured in minutes. Now that's terrible for random access (and still better than sneaker-net to the library). – Ben Voigt – 2017-12-11T17:30:56.080

3

As already pointed out by other answers, "4K" almost certainly refers to random access in blocks of size 4 KiB.

Every time a hard disk (not a SSD) is asked to read or write data, there are two significant delays involved:

Both of these are of a relatively constant amount of time for any given drive. Seek latency is a function of how fast the head can be moved and how far it needs to be moved, and rotational latency is a function of how fast the platter is spinning. What's more, they haven't changed much over the last few decades. Manufacturers actually used to use average seek times e.g. in advertisements; they pretty much stopped doing that when there was little or no development in the area. No manufacturer, especially in a high-competition environment, wants their products to look no better than those of their competitors.

A typical desktop hard disk spins at 7200 rpm, whereas a typical laptop drive might spin at around 5000 rpm. This means that each second, it goes through a total of 120 revolutions (desktop drive) or about 83 revolutions (laptop drive). Since on average the disk will need to spin half a revolution before the desired sector passes under the head, this means that we can expect the disk to be able to service approximately twice that many I/O requests per second, assuming that

So we should expect to be able to perform on the order of 200 I/O per second if the data it is being asked to access (for reading or writing) is relatively localized physically, resulting in rotational latency being the limiting factor. In the general case, we would expect the drive to be able to perform on the order of 100 I/O per second if the data is spread out across the platter or platters, requiring considerable seeking and causing the seek latency to be the limiting factor. In storage terms, this is the "IOPS performance" of the hard disk; this, not sequential I/O performance, is typically the limiting factor in real-world storage systems. (This is a big reason why SSDs are so much faster to use: they eliminate the rotational latency, and vastly reduce the seek latency, as the physical movement of the read/write head becomes a table lookup in the flash mapping layer tables, which are stored electronically.)

Writes are typically slower when there is a cache flush involved. Normally operating systems and hard disks try to reorder random writes to turn random I/O into sequential I/O where possible, to improve performance. If there is an explicit cache flush or write barrier, this optimization is eliminated for the purpose of ensuring that the state of the data in persistent storage is consistent with what software expects. Basically the same reasoning applies during reading when there is no disk cache involved, either because none exists (uncommon today on desktop-style systems) or because the software deliberately bypasses it (which is often done when measuring I/O performance). Both of those reduce the maximum potential IOPS performance to that of the more pessimistic case, or 120 IOPS for a 7200 rpm drive.

Which just so happen to match your numbers almost exactly. Random I/O with small block sizes is an absolute performance killer for rotational hard disks, which is also why it's a relevant metric.

As for purely sequential I/O, throughput in the range of 150 MB/s isn't at all unreasonable for modern rotational hard disks. But very little real-world I/O is strictly sequential, so in most situations, purely sequential I/O performance becomes more of an academic exercise than an indication of real-world performance.

This is a great answer, and reads so much better than mine :) Just a small note, at least Seagate still specs average seek latency in their datasheets. WD doesn't seem to.

– Bob – 2017-12-13T05:58:40.527@Bob Thank you. I actually meant in advertisements and similar; I have edited the answer to clarify that. I think it's safe to say that very few people read the datasheets, even though doing so probably would be a sobering experience for many... – a CVn – 2017-12-13T08:31:42.350

2

4K refers to random I/O. This means the disk is being asked to access small blocks (4 KB in size) at random points within the test file. This is a weakness of hard drives; the ability to access data across different regions of the disk is limited by the speed at which the disk is rotating and how quickly the read-write heads can move. Sequential I/O, where consecutive blocks are being accessed, are much easier because the drive can simply read or write the blocks as the disk is spinning.

A solid-state drive (SSD) has no such problem with random I/O as all it needs to do is look up where the data is stored in the underlying memory (typically NAND flash, can be 3D XPoint or even DRAM) and read or write the data at the appropriate location. SSDs are entirely electronic and do not need to wait on a rotating disk or a moving read-write head to access data, which makes them much faster than hard drives in this regard. It is for this reason that upgrading to an SSD dramatically increases system performance.

Side note: sequential I/O performance on an SSD is often much higher than on a hard drive as well. A typical SSD has several NAND chips connected in parallel to the flash memory controller, and can access them simultaneously. By spreading data across these chips, a drive layout akin to RAID 0 is achieved, which greatly increases performance. (Note that many newer drives, especially cheaper ones, use a type of NAND called TLC NAND which tends to be slow when writing data. Drives with TLC NAND often use a small buffer of faster NAND to provide higher performance for smaller write operations but can slow down dramatically once that buffer is full.)

IIRC, some NVMe SSDs even use a DRAM cache. – timuzhti – 2017-12-11T15:11:24.887

1Most do. Dramless SSDs are kinda on the low end. – Journeyman Geek – 2017-12-12T13:54:55.627

13

That's normal and expected. "4K" in this context means random read/write (in blocks of 4 kilobytes, hence the "4K"), which mechanical HDDs perform terribly on. That's where you'd want a SSD. See here for a more in-depth explanation.

– Bob – 2017-12-11T04:55:08.3474Kb is used because it's the typical size of a disk cluster, and on many modern HDDs, of the actual sector (the low level structure on the disk itself). That is, the smallest amount of data likely to be transferred at a time in any read or write, even if the requested data is smaller. Interesting that NO answer on this page so far even mentions clusters or sectors. – thomasrutter – 2017-12-11T22:57:40.243

2@thomasrutter Because it's not relevant to the answer. The important part is that this test involves random seeking. It's not relevant (to some extent) how much data is being transferred and whether or not that's a multiple of the sector size of the disk; the important part is that the test transfers a minimal amount of data in order to measure seek performance. – Micheal Johnson – 2017-12-12T08:50:49.213

Is this test on a partition, or on the entire disk? Partition-level tests can perform much worse for 4K accesses if you have a disk with 4K physical sectors but 1K logical sectors, and misalign the partition boundary to straddle sectors. – Toby Speight – 2017-12-12T08:52:56.673

Modern partitioning tools tend to ensure that partitions start and end on a sector boundary; even 1MB granularity is common now. Gone are the days of the old "63 512-byte sectors" which would cause problems for 4Kb native sectors. – thomasrutter – 2017-12-12T11:13:23.813

What brand/model is this hdd? – i486 – 2017-12-13T12:18:58.970