Performance in games tends to be determined by single core speed,

In the past (DOS era games): Correct.

These days, it is no longer true. Many modern games are threaded and benefit from multiple cores. Some games are already quite happy with 4 cores and that number seems to rise over time.

whereas applications like video editing are determined by number of cores.

Sort of true.

Number of cores * times speed of the core * efficiency.

If you compare a single identical core to a set of identical cores, then you are mostly correct.

In terms of what is available on the market - all the CPUs seem to have

roughly the same speed with the main differences being more threads or

more cores. For example:

Intel Core i5 7600k, Base Freq 3.80 GHz, 4 Cores

Intel Core i7 7700k, Base Freq 4.20 GHz, 4 Cores, 8 Threads

AMD Ryzen 1600x, Base Freq 3.60 GHz, 6 Cores, 12 Threads

AMD Ryzen 1800x, Base Freq 3.60 GHz, 8 Cores, 16 Threads

Comparing different architectures is dangerous, but ok...

So why do we see this pattern of increasing cores with all cores having

the same clock speed?

Partially because we ran into a barrier. Increasing clock speed further means more power needed and more heat generated. More heat meant even more power needed. We have tried that way, the result was the horrible pentium 4. Hot and power hungry. Hard to cool. And not even faster than the smartly designed Pentium-M (A P4 at 3.0GHz was roughly as fast as a P-mob at 1.7GHz).

Since then, we mostly gave up on pushing clock speed and instead we build smarter solutions. Part of that was to use multiple cores over raw clock speed.

E.g. a single 4GHz core might draw as much power and generate as much heat as three 2GHz cores. If your software can use multiple cores, it will be much faster.

Not all software could do that, but modern software typically can.

Which partially answers why we have chips with multiple cores, and why we sell chips with different numbers of cores.

As to clock speed, I think I can identify three points:

- Low power CPUs makes sense for quite a few cases which raw speed is not needed. E.g. Domain controllers, NAS setups, ... For these, we do have lower frequency CPUs. Sometimes even with more cores (e.g. 8x low speed CPU make sense for a web server).

- For the rest, we usually are near the maximum frequency which we can do without our current design getting too hot. (say 3 to 4GHz with current designs).

- And on top of that, we do binning. Not all CPU are generated equally. Some CPU score badly or score badly in part of their chips, have those parts disabled and are sold as a different product.

The classic example of this was a 4 core AMD chip. If one core was broken, it was disabled and sold as a 3 core chip. When demand for these 3 cores was high, even some 4 cores were sold as the 3 core version, and with the right software hack, you could re-enable the 4th core.

And this is not only done with the number of cores, it also affects speed. Some chips run hotter than others. Too hot and sell it as a lower speed CPU (where lower frequency also means less heat generated).

And then there is production and marketing and that messes it up even further.

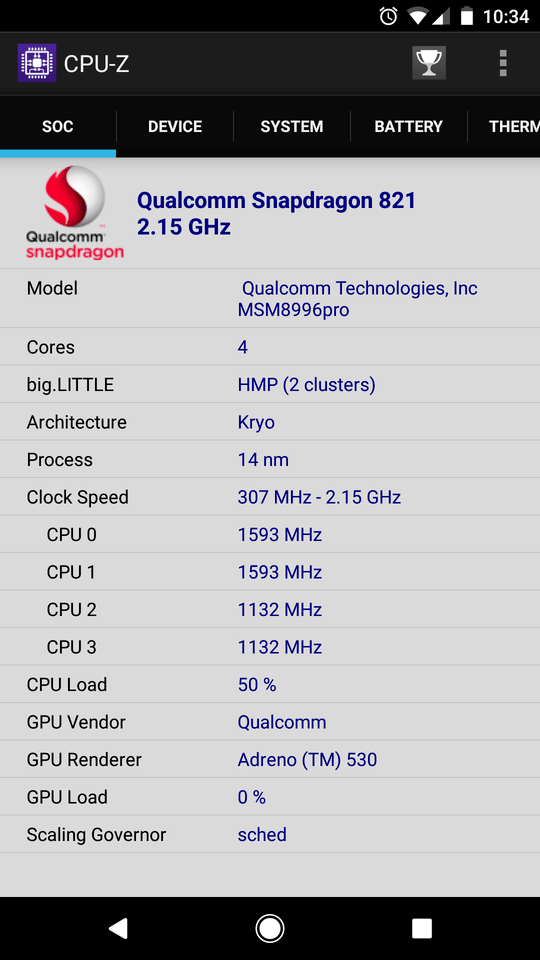

Why do we not have variants with differing clock speeds? ie. 2 'big' cores

and lots of small cores.

We do. In places where it makes sense (e.g. mobile phones), we often have a SoC with a slow core CPU (low power), and a few faster cores. However, in the typical desktop PC, this is not done. It would make the setup much more complex, more expensive, and there is no battery to drain.

15There are many mobiles with fast and slow cores, and on nearly all modern multi core servers the CPU core speeds clock independent depending on the load, some even switch off cores when not used. On a general purpose computer where you do not design for saving energy however having only two types of cores (CPU and GPU) just makes the platform more flexible. – eckes – 2017-06-24T13:29:30.547

5Before the thread scheduler could make an intelligent choice about which core to use it would have to determine if a process can take advantage of multiple cores. Doing that reliably would be highly problematic and prone to error. Particularly when this can change dynamically according to the needs of the application. In many cases the scheduler would have to make a sub optimal choice when the best core was in use. Identical cores makes things simpler, provides maximum flexibility, and generally has the best performance. – LMiller7 – 2017-06-24T15:34:49.447

33Clock speeds cannot reasonably be said to be additive in the manner you described. Having four cores running at 4 Ghz does not mean you have a "total" of 16 GHz, nor does it mean that this 16 Ghz could be partitioned up into 8 processors running at 2 Ghz or 16 processors running at 1 GHz. – Bob Jarvis - Reinstate Monica – 2017-06-24T23:43:05.780

1

In a similar manner - consider how dreadnought battleships, which had a uniform main battery, replaced the pre-dreadnought battleships which had a main battery of the largest guns, an intermediate battery that was smaller, and an anti-torpedo-boat battery which was smaller still.

– Bob Jarvis - Reinstate Monica – 2017-06-24T23:54:55.7274 cores@4GHz doesn't mean that it's running at 16GHz. Parallel processing doesn't work that way. And AFAIK AMD has supported different clock speeds for different cores for a very long time – phuclv – 2017-06-25T03:06:41.840

16The premise of the question is simply wrong. Modern CPUs are perfectly capable of running cores at different speeds – phuclv – 2017-06-25T03:17:05.373

1Voted to reopen. Also, big.LITTLE designs in ARM SoCs are common, where the smaller cores are an entirely different design (sometimes different architecture), lower clocked and much more power efficient, while the big ones are used while the screen is on for apps in the foreground. – allquixotic – 2017-06-25T03:19:08.783

4Multi-core CPU: can I say I have a 3x2.1GHz=6.3GHz CPU?, How do I calculate clock speed in multi-core processors?, – phuclv – 2017-06-25T03:21:52.477

2

see the discussions here big.LITTLE x86: Why not?, Intel and the big.LITTLE concept

– phuclv – 2017-06-25T03:46:10.747@LưuVĩnhPhúc Of course the calculation doesn't work like that - if it did the question would be comparing to equals, it is literally the entire point of the question. The example is simply for means of comparison. CPUs being capable of running different cores at different speeds would apply to any combination of cores.- The Thanks for the links nonetheless. – Jamie – 2017-06-25T13:56:16.200

1Another point to make is that most modern CPUs from Intel and AMD can dynamically scale clock speed based on the task they're doing. My 4790K usually sits at around 2GHz when I'm just browsing the web, but then kicks up to 4GHz+ when I'm gaming. – SGR – 2017-06-26T09:21:19.230

@LưuVĩnhPhúc intel have also been able to run cores at different clock speeds for a long time as well. – Baldrickk – 2017-06-26T10:19:31.413

@Baldrickk AMD are more blatant, especially with FX and very especially with unlocked "latent" cores, these were locked for a reason and generally need to be hobbled. – mckenzm – 2017-06-29T02:37:47.587

@BobJarvis: 16 GHz can't exactly be partitioned up into 8 processors of 2 GHz, of course, but can't it come pretty close? in contrast with the opposite direction? – user541686 – 2017-06-29T04:37:10.493

These days, people have such problems interpreting what

Intel Core i5-7600K, base frequency 3.80 GHz, 4 cores, 4 threadsmeans, can you imagine if you had a list of tech jargon about each individual core in the package? It would be marketing insanity, and everyone except for True Nerds would be confused. Intel has spent 30 years trying to make its chip designations accessible to consumers, which is why they (somewhat) recently moved to the i3/i5/i7 labeling, because otherwise people had no idea if a particular process was "fast" or "slow". – Christopher Schultz – 2017-06-30T13:32:46.237