16

2

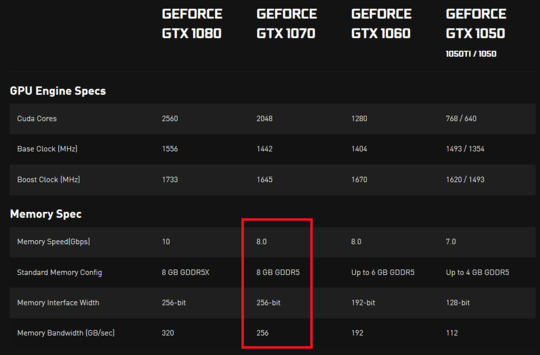

I was looking at Nvidia's series 10 graphics cards' specs and noticed they have memory speed and memory bandwidth specified. Memory speed is expressed in Gbps and memory bandwidth is expressed in GB/sec. To me, that looks like memory speed divided by 8 should be equal to memory bandwidth, since 8 bits make up one Byte and all the other units are the same, but that is not the case.

I was wondering if someone could explain to me, what actually indicates a real transfer rate of data. If there were 2 GPUs, one with higher memory speed(Gbps) and the other with higher memory bandwidth(GB/sec), which one could transfer more data in some fixed timeframe(or is that impossible and these 2 things are somehow linked in some way)?

Am I missing something here? I can't seem to find a good answer anywhere... What is actually important here? And why are both measurements expressed with almost the same units (since a Byte is 8 bits, one measurement should be equal to another, if you convert both to bits or to bytes)?

Evidence here and here(click "VIEW FULL SPECS" in the SPECS section).

Thank you for answering. Good, easily understandable explanation with important details. This helped me a lot :) – BassGuitarPanda – 2017-03-07T16:04:34.883

4@BassGuitarPanda you are very welcome. I admit I was a little baffled to begin with as well. They had two seemingly contradictory values for memory bandwidth which only made sense once I realised that one was a bandwidth-per-data-line figure. I learnt something myself as well, so thank you for a clear and well asked question. – Mokubai – 2017-03-07T16:23:24.640