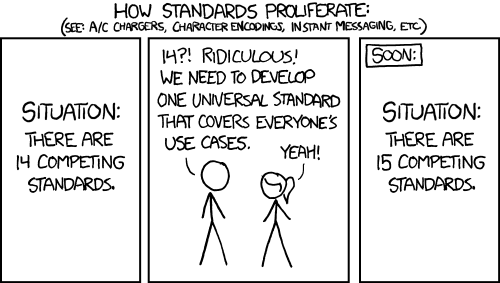

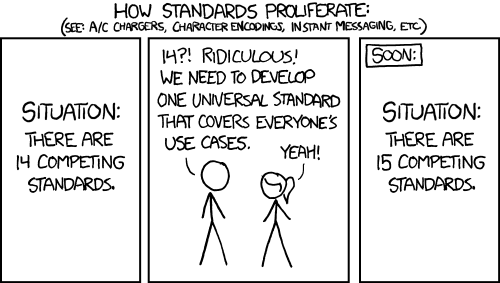

I am missing two twings in the answers here, so I am adding yet another one.

Though this reminds me of adding yet another standard answer...

There are two problems here:

I have a directory containing around 280,000 files.

Most tools do not scale all that well with this number of files. Not just most Linux tools or windows tools, but quite a lot of programs. And that might include your filesystem. The long term solution would be 'well, do not do that then'. If you have different files, but them in different directories. If not expect to keep running into problems in the future.

Having said that, lets move to your actual problem:

If I use cp or mv then I get an error 'argument list too long'

This is caused by expansion of * by the shell. The shell has limited space for the result and it runs out. This means any command with an * expanded by the shell will run into the same problem. You will either need to expand fewer options at the same time, or use a different command.

One alternate command used often when you run into this problem is find. There are already several answers showing how to use it, so I am not going to repeat all that. I am however going to point out the difference between \; and +, since this can make a huge performance difference and hook nicely into the previous expansion explanation.

find /path/to/search --name "*.txt" -exec command {} \;

Will find all files under path/to/search/ and exec a command with it, but notice the quotes around the *. That feeds the * to the command. If we did not encapsulate it or escape it then the shell would try to expand it and we would get the same error.

Lastly, I want to mention something about {}. These brackets get replaced by the content found by find. If you end the command with a semicolom ; (one which you need to escape from the shell, hence the \;'s in the examples) then the results are passed one by one. This means that you will execute 280000 mv commands. One for each file. This might be slow.

Alternatively you can end with +. This will pass as many arguments as possible at the same time. If bash can handle 2000 arguments, then find /path -name "*filetype" -exec some_move {}+ will call the some_move command about 140 times, each time with 2000 arguments. That is more efficient (read: faster).

1What is the total size of all files? Maybe first

tarthese files? – None – 2010-02-10T14:16:02.843See this question.

– Nick Presta – 2010-02-10T14:20:52.670