Short Answer

The "out of memory" pop-up says your'e running out of the limit on private committed memory—a type of virtual memory. Not that you're running out of RAM (physical memory). It doesn't matter how much available RAM you have. Having lots of Available RAM does not allow you to exceed the commit limit. The commit limit is the sum of your total RAM (whether in use or not!) plus your current pagefile size.

Conversely, what "uses up" commit limit (which is mostly the creation of process-private virtual address space) does not necessarily use any RAM! But the OS won't allow its creation unless it knows there is some place to store it if it ever needs to. So you can run into the commit limit without using all of your RAM, or even most of your RAM.

This is why you should not run without a pagefile. Note that the pagefile might not actually ever be written to! But it will still let you avoid the "low on memory" and "out of memory" errors.

Intermediate Answer

Windows does not actually have an error message for running out of RAM. What you're running out of is "commit limit".

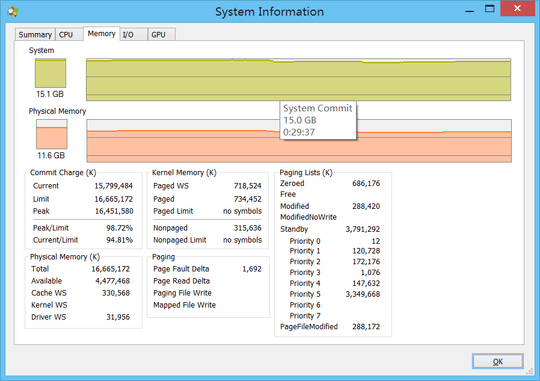

The "System" graph in that version of Process Explorer is poorly named. It should be labeled "commit charge". (In the version I have it's called "System commit". Better, but still not completely consistent.) In any case the "current" height of the graph there is what shows lower down in the text section as "Commit Charge" - "Current", and the max height of the graph represents "Commit Charge" - "Limit".

"Commit charge" refers to virtual address space that is backed by the pagefile (if you have one) - in other words, if it can't all fit in RAM, the remainder goes in the pagefile. (There are other types of v.a.s. that are either backed by other files - that's called "mapped" v.a.s. - or that must stay in RAM all the time; the latter is called "nonpageable".) The "commit limit" is the maximum that the "commit charge" can be. It is equal to your RAM size plus the pagefile size.

You apparently have no pagefile (I can tell because your commit limit equals your RAM size), so the commit limit is simply the RAM size.

Apparently various programs + the OS have used nearly all of the maximum possible commit.

This has nothing directly to do with how much RAM is free or available. Yes, you have about 4.5 GB RAM available. That doesn't mean you can exceed the commit limit. Committed memory does not necessarily use RAM and is not limited by the amount of available RAM.

You need to either re-enable the pagefile - using this much committed, I would suggest a 16 GB pagefile, because you don't want to force the OS to keep so much of that stuff in RAM, and the pagefile works best if it has a lot of free room - or else add more RAM. A LOT more. For good performance you need to have plenty of room in RAM for code and other stuff that isn't backed by the pagefile (but can be paged out to other files).

Very Long Answer

(but still a lot shorter than the memory management chapter of Windows Internals...)

Suppose a program allocates 100 MB of process-private virtual memory. This is done with a VirtualAlloc call with the "commit" option. This will result in a 100 MB increase in the "Commit charge". But this "allocation" does not actually use any RAM! RAM is only used when some of that newly-committed virtual address space is accessed for the first time.

How the RAM eventually gets used

(if it ever does)

The first-time access to the newly committed space would nearly always be a memory write (reading newly-allocated private v.a.s. before writing it is nearly always a programming error, since its initial contents are, strictly speaking, undefined). But read or write, the result, the first time you touch a page of newly-allocated v.a.s., is a page fault. Although the word "fault" sounds bad, page faults are a completely expected and even required event in a virtual memory OS.

In response to this particular type of page fault, the pager (part of the OS's memory manager, which I'll sometimes abbrev. as "Mm") will:

- allocate a physical page of RAM (ideally from the zero page list, but in any case, it comes from what Windows calls "available": The zero, free, or standby page list, in that order of preference);

- fill in a page table entry to associate the physical page with the virtual page; and finally

- dismiss the page fault exception.

After which the code that did the memory reference will re-execute the instruction that raised the page fault, and this time the reference will succeed.

We say that the page has been "faulted into" the process working set, and into RAM. In Task Manager this will appear as a one-page (4 KB) increase in the "private working set" of the process. And a one-page reduction in Available physical memory. (The latter may be tough to notice on a busy machine.)

Note 1: This page fault did not involve anything read from disk. A never-before-accessed page of committed virtual memory does not begin life on disk; it has no place on disk to read it from. It is simply "materialized" in a previously-Available page of RAM. Statistically, in fact, most page faults are resolved in RAM, either to shared pages that are already in RAM for other processes, or to the page caches - the standby or modified lists, or as "demand zero" pages like this one.

Note 2: This takes just one page, 4096 bytes, from "Available". Never-touched-before committed address space is normally realized—faulted in—just one page at a time, as each page is "touched" for the first time. There would be no improvement, no advantage, in doing more at a time; it would just take n times as long. By contrast, when pages have to be read from disk, some amount of "readahead" is attempted because the vast majority of the time in a disk read is in per-operation overhead, not the actual data transfer. The amount "committed" stays at 100 MB; the fact that one or pages have been faulted doesn't reduce the commit charge.

Note 3: Let's suppose that we have 4 GB "available" RAM. That means that we could reference already-allocated but never-before-referenced committed memory about a million more times (4 GB / 4096) before we'd be out of RAM. At which point, if we have a pagefile as David Cutler and Lou Perazzoli intended, some of the longest-ago-referenced pages in RAM would be saved on disk and then made Available for use in resolving these more recent page faults. (Actually the OS would initiate RAM reclamation methods like "working set trimming" rather before that, and the actual writes to the pagefile are cached and batched on the modified page list for efficiency, and and... ) None of that would affect the "committed" count. It is relevant, though, to the "commit limit". If there isn't room for all of "committed" memory in RAM, the excess can be kept in the pagefile. Thus the size of the pagefile contributes to the "commit limit".

And it keeps happening...

But let's suppose we haven't done those million more references and there are still about 4GB worth of pages "available". Now let's suppose the same process - or another, doesn't matter - does another VirtualAlloc, this time of say 200 MB committed. Again, this 200 MB gets added to the commit charge, and it does not remove any RAM from available. Simply VirtualAlloc'ating address space does not use up a corresponding amount of RAM, and having low "available" RAM does not limit the amount of address space you can VirtualAlloc (nor does having high available RAM increase it).

(Well, ok... there is a tiny bit of overhead, amounting to one (pageable!) page that's used for a page table for every 2 MB (4 MB if you're on an x86, non-PAE system) of virtual address space allocated, and there is a "virtual address descriptor" of a few tens of bytes for each virtually contiguous allocated range.)

In this way it is possible - and common! - to use up a lot of "commit charge" while only using small amounts of RAM.

So, if "committing" virtual address space doesn't use up RAM, why does there have to be a limit?

Because the "commit charge" does represent potential future use of storage space. "Commit limit" represents the total amount of storage (RAM + pagefile space) available to hold such allocations, should they ever actually be referenced and thence need to be stored someplace.

When the Mm approves a VirtualAlloc request, it is promising - "making a commitment" - that all subsequent memory accesses to the allocated area will succeed; they may result in page faults but the faults will all be able to be resolved, because there IS adequate storage to keep the contents of all of those pages, whether in RAM or in the pagefile. The Mm knows this because it knows how much storage space there is (the commit limit) and how much has already been "committed" (the current commit charge).

(But all of those pages have not necessarily been accessed yet, so there is not necessarily an actual of storage to go with the amount committed, at any given time.)

So... What about "system is out of memory"?

If you try to VirtualAlloc and the current commit charge plus the requested allocation size would take you over the commit limit, AND the OS cannot expand the pagefile so as to increase the commit limit... you get the "out of memory" pop-up, and the process sees the VirtualAlloc call FAIL. Most programs will just throw up their hands and die at that point. Some will blindly press on, assuming that the call succeeded, and fail later when they try to reference the region they thought they allocated.

Again (sorry for the repetition): it does not matter how much Available RAM you have. The OS has promised that the RAM or pagefile space will be available when it's needed, but that promise doesn't subtract from "Available". Available RAM is only used up by committed v.m. when it is referenced for the first time, which is what causes it to be "faulted in"... i.e. realized in physical memory. And simply committing (= allocating) virtual memory doesn't do that. It only takes free virtual address space and makes usable virtual address space out of it.

But in the "out of memory" case there's been an allocation request for committed memory, and the OS has added the current commit charge to the size of this neew request... and found that the total is more than the commit limit. So if the OS approved this new one, and all that space was referenced after that, there would not be any real places (RAM + pagefile) to store it all.

The OS will not allow this. It will not allow more v.a.s. to be allocated than it has space to keep it in the worst case - even if all of it gets "faulted in." That is the purpose of the "commit limit".

I tell you three times I tell you three times I tell you three times: The amount of "Available" RAM does not matter. That the committed virtual space is not actually using all that storage space yet, does not matter. Windows cannot "commit" to the virtual allocation unless it ''can'' all be faulted in in the future.

Note there is another type of v.a.s. called "mapped", primarily used for code and for access to large data files, but it is not charged to "commit charge" and is not limited by the "commit limit". This is because it comes with its own storage area, the files that are "mapped" to it. The only limit on "mapped" v.a.s. is the amount of disk space you have for the mapped files, and the amount of free v.a.s. in your process to map them into.

But when I look at the system, I'm not quite at the commit limit yet?

That's basically a measurement and record-keeping problem. You're looking at the system after a VirtualAlloc call has already been tried and failed.

Suppose you had just 500 MB of commit limit left and some program had tried to VirtualAlloc 600 MB. The attempt fails. Then you look at the system and say "What? There's still 500 MB left!" In fact there might be a heck of a lot more left by then, because the process in question is likely gone completely by that point, so ALL of its previously-allocated committed memory has been released.

The trouble is that you can't look back in time and see what the commit charge was at the moment the alloc attempt was made. And you also don't know how much space the attempt was for. So you can't definitively see why the attempt failed, or how much more "commit limit" would have been needed to allow it to work.

I've seen "system is running low on memory". What's that?

If in the above case the OS CAN expand the pagefile (i.e. you leave it at the default "system managed" setting, or you manage it but you set the maximum to larger than the initial, AND there is enough free disk space), and such expansion increases the commit limit sufficiently to let the VirtualAlloc call succeed, then... the Mm expands the pagefile, and the VirtualAlloc call succeeds.

And that's when you see "system is running LOW on memory". That is an early warning that if things continue without mitigation you will likely soon see an "out of memory" warning. Time to close down some apps. I'd start with your browser windows.

And you think that's a good thing? Pagefile expansion is evil!!!

No, it isn't. See, the OS doesn't really "expand" the existing file. It just allocates a new extent. The effect is much like any other non-contiguous file. The old pagefile contents stay right where they are; they don't have to be copied to a new place or anything like that. Since most pagefile IO is in relatively small chunks compared to the pagefile size, the chances that any given transfer will cross an extent boundary are really pretty rare, so the fragmentation doesn't hurt much unless it's really excessive.

Finally, once all processes that have "committed" space in the extension have quit (at OS shutdown if not sooner), the extents are silently freed and the pagefile will be back to its previous size and allocation - if it was contiguous before, it is so again.

Allowing pagefile expansion therefore acts as a completely free safety net: If you allow it but the system never needs it, the system will not "constantly expand and contract the pagefile" as is often claimed, so it will cost nothing. And if you do ever need it, it will save you from apps crashing with "out of virtual memory" errors.

But but but...

I've read on dozens of web sites that if you allow pagefile expansion Windows will constantly expand and contract the pagefile, and that this will result in fragmentation of the pagefile until you defrag it.

They're just wrong.

If you've never seen the "running low on memory" (or, in older versions, "running low on virtual memory") pop-up, the OS has never expanded your pagefile.

If you do see that pop-up, then that tells you your initial pagefile size is too small. (I like to set it to about 4x the maximum observed usage; i.e. the "%pagefile usage peak" perfmon counter should be under 25%. Reason: Pagefile space is managed like any other heap and it works best with a lot of free space to play in.)

But why don't they just...

One might argue that the OS should just let the allocation happen and then let the references fail if there's no RAM available to resolve the page faults. In other words, up above where we described how the initial page fault works, what if the "allocate an available physical page of RAM" (step 1) couldn't be done because there wasn't any available, and there was no place left to page anything out to make any available?

Then the pager would be unable to resolve the page fault. It would have to allow the exception (the page fault) to be reported back to the faulting thread, probably changed to some other exception code.

The design philosophy is that VirtualAlloc will return zero (technically a NULL pointer) instead of an address if you run out of commit limit, and it is entirely reasonable to expect the programmer to know that a VirtualAlloc call can fail. So programmers are expected to check for that case and do something reasonable in response (like give you a chance to save your work up to that point, and then end the program "gracefully"). (Programmers: You do check for a NULL pointer return from malloc, new, etc., yes? Then why wouldn't you from this?)

But programmers should not have to expect that a simple memory reference like

i = 0; // initialize loop counter

might fail - not if it's in a region of successfully committed address space. (Or mapped address space, for that matter.) But that's what could happen if the "allow the overcommitted allocate, let the memory reference fail" philosophy was followed.

Unfortunately, a memory reference like the one in the line of code above just does not have a convenient way of returning a bad status! They're just supposed to work, just like addition and subtraction. The only way to report such failures would be as exceptions. So to handle them the programmer would have to wrap the entire program in an exception handler. (try ... catch and all that.)

That can be done... But it would be difficult for the handler to know how to "do the right thing" in response to those exceptions, since there would be so many, many points in the code where they could arise. (Specifically, they could arise at every memory reference to VirtualAlloc'd memory, to memory allocated with malloc or new... and to all local variables as well, since the stack is VirtualAlloc'd too.)

In short, making the program fail gracefully in these cases would be very difficult.

It's pretty easy, on the other hand, to check for a NULL pointer return from VirtualAlloc (or malloc or new, for that matter, though they are not exactly the same thing) and then do something reasonable... like not try to go on and do whatever it was the program needed that virtual space for. And maybe ask the user if they want to save their work so far, if any. (Granted, far too many apps don't bother doing even that much.)

Other users of commit

Incidentally, the "commit limit" is not reduced by the OS's various allocations such as paged and nonpaged pool, the PFN list, etc.; these are just charged to commit charge as they happen. Nor is commit charge or commit limit affected by video RAM, or even video RAM "window" size, either.

Test it yourself

You can demo all of this with the testlimit tool from the SysInternals site. Option -m will allocate committed address space but will not "touch" it, so will not cause allocation of RAM. Whereas option -d will allocate and also reference the pages, causing both commit charge to increase and available RAM to decrease.

References

Windows Internals by Russinovich, Solomon, and Ionescu. There are even demonstrations allowing you to prove all of these points using the testlimit tool. However, I must warn you that if you think this was long, be warned: the Mm chapter alone is 200 pages; the above is an EXTREMELY simplified version. (Please also glance at the "Acknowledgements" section in the Introduction.)

See also MSDN VirtualAlloc documentation

The picture he was trying to attach is here: http://tinypic.com/view.php?pic=npon5c&s=8#.Va3P3_lVhBc I approved an edit to add this URL, but that apparently isn't sufficient.

– Jamie Hanrahan – 2015-07-21T05:01:49.227@JamieHanrahan: Images should be uploaded using the

Ctrl+Gshortcut so that Stack Exchange can keep them from rotting over time. – Deltik – 2015-07-21T05:32:38.823@Deltik It wasn't my image to upload. – Jamie Hanrahan – 2015-07-21T05:34:03.927

@JamieHanrahan - Once the question was submitted, the material was assigned a license, the image is the communities at this point.. – Ramhound – 2015-07-22T15:43:50.003

Noted for future reference, thanks. I simply saw the link to the tinypic site in a proposed edit, and I approved that edit, but that had no effect. – Jamie Hanrahan – 2015-07-22T15:48:20.977