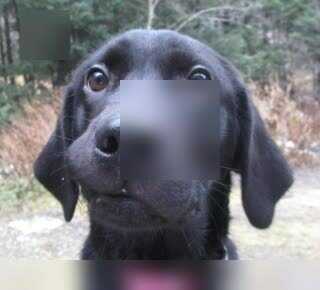

For the case when one dislikes the sharp edge of the blurring, I made a script that layers different stages of blurring so that the edge is not sharp and it looks like this:

Instead of this:

It is a python script:

#!/usr/bin/env python3

import os,stat

def blur_softly(matrix,video_in="video_to_be_blurred.mp4",video_out=""):

if video_out == "":

video_out = video_in[:-4] + "_blurred" + video_in[-4:]

s0 = "ffmpeg -i " + video_in + " -filter_complex \\\n\"[0:v]null[v_int0]; \\\n"

s1 = ''

a = 0

for m in matrix:

blur = m[6]

multiple = m[7]

width = m[0]+blur*multiple*2

height = m[1]+blur*multiple*2

x_cord = m[2]-blur*multiple

y_cord = m[3]-blur*multiple

timein = m[4]

timeout = m[5]

step = m[8]

margin = m[9]

for i in range(blur):

ii = multiple*i

s0 = s0 + "[v_int0]crop="+str(width-2*ii+(margin//2)*2)+":"+str(height-2*ii+(margin//2)*2)+":"+str(x_cord+ii-margin//2)+":"+str(y_cord+ii-margin//2) + \

",boxblur="+str((i+1)*step)+":enable='between(t,"+str(timein)+","+str(timeout)+ \

")',crop="+str(width-2*ii)+ ":"+str(height-2*ii)+":"+str(margin//2)+":"+str(margin//2)+ "[blur_int" + str(i+1+a)+"]; \\\n"

s1 = s1 + "[v_int"+ str(i+a) +"][blur_int"+str(i+a+1)+"]overlay="+str(x_cord+ii)+":"+str(y_cord+ii)+":enable='between(t,"+str(timein)+","+str(timeout)+ ")'[v_int"+str(i+a+1)+"]; \\\n"

a += i+1

s = s0 + s1 + "[v_int"+str(a)+"]null[with_subtitles]\" \\\n-map \"[with_subtitles]\" -map 0:a -c:v libx264 -c:a copy -crf 17 -preset slow -y "+video_out+"\n"

print(s)

file_object = open('blur.sh', 'w')

file_object.write(s)

file_object.close()

st = os.stat('blur.sh')

os.chmod('blur.sh', st.st_mode | stat.S_IXUSR | stat.S_IXGRP | stat.S_IXOTH)

#w,h,x,y,timein,timeout,blur,multiple,step,margin

matrix = [[729,70,599,499,14.96,16.40,25,1,1,90],]

blur_softly(matrix,video_in="your_video.mp4",video_out="output_video.mp4")

You can change the parameters in the last and penultimate lines, the last two parametres between quatation marks are path to your video and output video (assuming they are placed in the working directory). In the penultimate line:

- the first two numbers indicate the size of the initial area to which maximum blur will be applied,

- the second two indicate the x and y coordinates thereof,

- the third two indicate the times in seconds when the blurring should be applied,

- "25" in this example indicates that there will be 25 boxes applied on top of each other)

- the next "1" indicates that bigger boxes with less blurr should be just one pixel wider than their predecessors

- the second "1" indicates that blurring should increase by one until until maximum of 25 (from above)

- "30" indicates the margin that is taken into consideration for applying the blur, so increasing this makes the blur respect more of its surrounding. Increasing this value also solves error texted like

Invalid chroma radius value 21, must be >= 0 and <= 20

By running it, one should get an output like the following (it gets written to a filethat can be run and printed on the output that can be copypasted and run):

ffmpeg -i video_to_be_blurred.mp4 -filter_complex \

"[0:v]null[v_int0]; \

[v_int0]crop=869:210:529:429,boxblur=1:enable='between(t,14.96,16.4)',crop=779:120:45:45[blur_int1]; \

[v_int0]crop=867:208:530:430,boxblur=2:enable='between(t,14.96,16.4)',crop=777:118:45:45[blur_int2]; \

[v_int0]crop=865:206:531:431,boxblur=3:enable='between(t,14.96,16.4)',crop=775:116:45:45[blur_int3]; \

[v_int0]crop=863:204:532:432,boxblur=4:enable='between(t,14.96,16.4)',crop=773:114:45:45[blur_int4]; \

[v_int0]crop=861:202:533:433,boxblur=5:enable='between(t,14.96,16.4)',crop=771:112:45:45[blur_int5]; \

[v_int0]crop=859:200:534:434,boxblur=6:enable='between(t,14.96,16.4)',crop=769:110:45:45[blur_int6]; \

[v_int0]crop=857:198:535:435,boxblur=7:enable='between(t,14.96,16.4)',crop=767:108:45:45[blur_int7]; \

[v_int0]crop=855:196:536:436,boxblur=8:enable='between(t,14.96,16.4)',crop=765:106:45:45[blur_int8]; \

[v_int0]crop=853:194:537:437,boxblur=9:enable='between(t,14.96,16.4)',crop=763:104:45:45[blur_int9]; \

[v_int0]crop=851:192:538:438,boxblur=10:enable='between(t,14.96,16.4)',crop=761:102:45:45[blur_int10]; \

[v_int0]crop=849:190:539:439,boxblur=11:enable='between(t,14.96,16.4)',crop=759:100:45:45[blur_int11]; \

[v_int0]crop=847:188:540:440,boxblur=12:enable='between(t,14.96,16.4)',crop=757:98:45:45[blur_int12]; \

[v_int0]crop=845:186:541:441,boxblur=13:enable='between(t,14.96,16.4)',crop=755:96:45:45[blur_int13]; \

[v_int0]crop=843:184:542:442,boxblur=14:enable='between(t,14.96,16.4)',crop=753:94:45:45[blur_int14]; \

[v_int0]crop=841:182:543:443,boxblur=15:enable='between(t,14.96,16.4)',crop=751:92:45:45[blur_int15]; \

[v_int0]crop=839:180:544:444,boxblur=16:enable='between(t,14.96,16.4)',crop=749:90:45:45[blur_int16]; \

[v_int0]crop=837:178:545:445,boxblur=17:enable='between(t,14.96,16.4)',crop=747:88:45:45[blur_int17]; \

[v_int0]crop=835:176:546:446,boxblur=18:enable='between(t,14.96,16.4)',crop=745:86:45:45[blur_int18]; \

[v_int0]crop=833:174:547:447,boxblur=19:enable='between(t,14.96,16.4)',crop=743:84:45:45[blur_int19]; \

[v_int0]crop=831:172:548:448,boxblur=20:enable='between(t,14.96,16.4)',crop=741:82:45:45[blur_int20]; \

[v_int0]crop=829:170:549:449,boxblur=21:enable='between(t,14.96,16.4)',crop=739:80:45:45[blur_int21]; \

[v_int0]crop=827:168:550:450,boxblur=22:enable='between(t,14.96,16.4)',crop=737:78:45:45[blur_int22]; \

[v_int0]crop=825:166:551:451,boxblur=23:enable='between(t,14.96,16.4)',crop=735:76:45:45[blur_int23]; \

[v_int0]crop=823:164:552:452,boxblur=24:enable='between(t,14.96,16.4)',crop=733:74:45:45[blur_int24]; \

[v_int0]crop=821:162:553:453,boxblur=25:enable='between(t,14.96,16.4)',crop=731:72:45:45[blur_int25]; \

[v_int0][blur_int1]overlay=574:474:enable='between(t,14.96,16.4)'[v_int1]; \

[v_int1][blur_int2]overlay=575:475:enable='between(t,14.96,16.4)'[v_int2]; \

[v_int2][blur_int3]overlay=576:476:enable='between(t,14.96,16.4)'[v_int3]; \

[v_int3][blur_int4]overlay=577:477:enable='between(t,14.96,16.4)'[v_int4]; \

[v_int4][blur_int5]overlay=578:478:enable='between(t,14.96,16.4)'[v_int5]; \

[v_int5][blur_int6]overlay=579:479:enable='between(t,14.96,16.4)'[v_int6]; \

[v_int6][blur_int7]overlay=580:480:enable='between(t,14.96,16.4)'[v_int7]; \

[v_int7][blur_int8]overlay=581:481:enable='between(t,14.96,16.4)'[v_int8]; \

[v_int8][blur_int9]overlay=582:482:enable='between(t,14.96,16.4)'[v_int9]; \

[v_int9][blur_int10]overlay=583:483:enable='between(t,14.96,16.4)'[v_int10]; \

[v_int10][blur_int11]overlay=584:484:enable='between(t,14.96,16.4)'[v_int11]; \

[v_int11][blur_int12]overlay=585:485:enable='between(t,14.96,16.4)'[v_int12]; \

[v_int12][blur_int13]overlay=586:486:enable='between(t,14.96,16.4)'[v_int13]; \

[v_int13][blur_int14]overlay=587:487:enable='between(t,14.96,16.4)'[v_int14]; \

[v_int14][blur_int15]overlay=588:488:enable='between(t,14.96,16.4)'[v_int15]; \

[v_int15][blur_int16]overlay=589:489:enable='between(t,14.96,16.4)'[v_int16]; \

[v_int16][blur_int17]overlay=590:490:enable='between(t,14.96,16.4)'[v_int17]; \

[v_int17][blur_int18]overlay=591:491:enable='between(t,14.96,16.4)'[v_int18]; \

[v_int18][blur_int19]overlay=592:492:enable='between(t,14.96,16.4)'[v_int19]; \

[v_int19][blur_int20]overlay=593:493:enable='between(t,14.96,16.4)'[v_int20]; \

[v_int20][blur_int21]overlay=594:494:enable='between(t,14.96,16.4)'[v_int21]; \

[v_int21][blur_int22]overlay=595:495:enable='between(t,14.96,16.4)'[v_int22]; \

[v_int22][blur_int23]overlay=596:496:enable='between(t,14.96,16.4)'[v_int23]; \

[v_int23][blur_int24]overlay=597:497:enable='between(t,14.96,16.4)'[v_int24]; \

[v_int24][blur_int25]overlay=598:498:enable='between(t,14.96,16.4)'[v_int25]; \

[v_int25]null[with_subtitles]" \

-map "[with_subtitles]" -map 0:a -c:v libx264 -c:a copy -crf 17 -slow preset -y video_to_be_blurred_blurred.mp4

Thanks so much for your response. That all makes great sense.

As a side note, it also made the split filter make sense finally!

Also, could it be possible through arithmetic expressions to dynamically move the blurred box around the image? I.E. for the purpose of blurring someone's face as they move in a non-linear fashion? – occvtech – 2015-04-15T21:49:01.083

Thanks again!

I'll take a crack at it.

I know that a non-linear editor would be 1000 times easier here, but I'm hoping to batch process multiple files and don't want to wait through the import/key frame/export process.

Thanks again! – occvtech – 2015-04-16T15:11:37.730

1does FFMPEG offer other shapes besides boxes, such as circles? – Sun – 2015-09-23T16:09:09.850

@LordNeckbeard I'm using cmd and I want to use Example 1 but when I execute the code I get this error

Unrecognized option 'filter_complex[0:v]crop=200:200:60:30,boxblur=10[fg];[0:v][fg]overlay=60:30[v]-map [v] -map 0:a -c:v libx264 -c:a copy -movflags +faststart output.mp4'. Error splitting the argument list: Option not found– Jim – 2017-05-03T18:10:33.480@LordNeckbeard Sorry for such a stupid question, I'm new to all of this. how to type these 4 lines of command in 1 line? I removed the / and this is the result https://pastebin.com/ajWHzzsj and I let the / inline and this is the result (the error from my last message) https://pastebin.com/6nwNi7Y0

– Jim – 2017-05-03T19:40:40.9331@Jim I noticed that my example command was missing a quote. You command should look something like this:

ffmpeg -i input.mp4 -filter_complex "[0:v]crop=200:200:60:30,boxblur=10[fg]; [0:v][fg]overlay=60:30[v]" -map "[v]" -map 0:a -c:v libx264 -c:a copy -movflags +faststart output.mp4– llogan – 2017-05-03T19:51:03.553@LordNeckbeard Is it possible to use a mask image and get the blurred crop from another area in the same frame, I get this error "Input frame sizes do not match (246x60 vs 1280x720)" and of course if I make the input match it will get the same area and it will be like a normal mask https://pastebin.com/Mpde3Rx3

– Jim – 2017-05-07T10:51:48.157@Jim I don't quite understand what you're trying to do, but try switching the alphamerge and crop positions (and note that alphamerge takes two inputs–the image to apply the transparency and the mask that provides it):

[0:v][1:v]alphamerge,crop=246:60:998:98,boxblur=1[alf]. A link to an image example may be helpful to see what you want to achieve. – llogan – 2017-05-07T17:48:14.793@LordNeckbeard let me show you this example I'm trying to hide the second sun from this frame http://i.imgur.com/iSyFdh5.png by getting part of the sky and blur it, BTW I need to use a mask http://i.imgur.com/6erW3HS.png because I have different shapes in different videos I can do the same with this code

– Jim – 2017-05-09T10:30:25.223ffmpeg -i input.mp4 -filter_complex "[0:v]crop=245:62:999:101,boxblur=10[fg]; [0:v][fg]overlay=main_w-overlay_w-38:39[v]" -map "[v]" -map 0:a -c:v -c:a -vcodec libx264 -movflags +faststart output.mp4but its a rectangular or square I can't do shapes with it@LordNeckbeard can you please answer the similar question?

– Zain Ali – 2018-07-22T17:14:45.590@ZainAli Looks like I was too slow. – llogan – 2018-07-22T22:46:06.477

@LordNeckbeard I have observed for above case(using mask image to apply blur effect) that as you increase boxblur value then blur effect decrease, like from 1-16 it keeps on increasing and after that it start decreasing(no matter how much high value you choose), do you have any idea that why this could happen or any better suggestion to use masks to blur with correct blur intensity? – Zain Ali – 2018-08-02T01:41:13.017