7

4

I recently saw a comparison for 2005 vs. 2014 microSD card: in 2005 there was only max 128 MByte microSD cards, and in 2014, 128 GByte.

My question: I'm not 100% sure. Is 128 GByte 1000× bigger than 128 MByte or 1024× bigger?

7

4

I recently saw a comparison for 2005 vs. 2014 microSD card: in 2005 there was only max 128 MByte microSD cards, and in 2014, 128 GByte.

My question: I'm not 100% sure. Is 128 GByte 1000× bigger than 128 MByte or 1024× bigger?

14

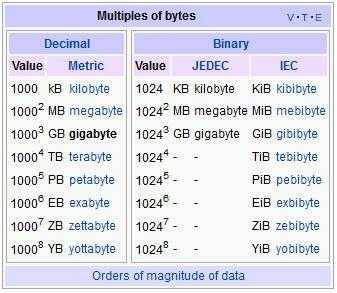

Kilobyte, megabyte and gigabyte mean different things depending on whether the international standard that one uses is based on powers of 2 (binary) or of 10 (decimal).

There are three standards involved :

International System of Units (SI)

The modern form of the metric system and the world's most widely used system of measurement, used in both everyday commerce and science.

JEDEC

The specifications for semiconductor memory circuits and similar storage devices promulgated by the Joint Electron Device Engineering Council (JEDEC) Solid State Technology Association, a semiconductor trade and engineering standardization organization.

International Electrotechnical Commission (IEC)

International standards organization that prepares and publishes International Standards for all electrical, electronic and related technologies.

Depending of which industry you are in, and whether you are using Microsoft, the definitions may vary. For example, gigabyte stands "mostly" for 109 bytes (GB). Many computer people use this term for 10243, while others would use for it the term gibibyte (GiB), while still others would write GiB and call it a gigabyte.

The confusion is even greater for kilobyte, which may stand for both 1000 and 1024!

Some would say that a megabyte is 10002, and that 10242 should be called mebibyte,

others would disagree.

The wikipedia article Gigabyte describes how these terms were introduced into the international standards and furnishes the following table :

In 1998 the International Electrotechnical Commission (IEC) published standards for binary prefixes and requiring the use of gigabyte to strictly denote 10003 bytes and gibibyte to denote 10243 bytes. By the end of 2007, the IEC Standard had been adopted by the IEEE, EU, and NIST, and in 2009 they were incorporated in the International System of Quantities.

In everyday life, programmers usually use megabyte and gigabyte as binary base 2, which is also the case with Microsoft Windows. Disk makers and other companies than Microsoft usually use decimal base 10. This is the reason that Windows reports the capacity of a new disk as smaller than what is written on the box.

Conclusion: A gigabyte is both 1000 times and 1024 times larger than a megabyte. It depends on which international standard you choose to use at the moment. Strictly speaking, the notation that makes the units clearer is :

GB = 1000 x MB

GiB = 1024 x MiB

(but not everyone would agree.)

References :

wikipedia Binary prefix

International System of Units (SI) - Prefixes for binary multiples

units(7) - Linux manual page

Western Digital Settles Capacity Suit (this confusion even caused a law-suit!)

Note that JEDEC does not actually recommend using the 1024-based units. They "are included only to reflect common usage". http://www.jedec.org/standards-documents/dictionary/terms/mega-m-prefix-units-semiconductor-storage-capacity

– endolith – 2015-08-05T11:37:39.8231I have seen some BitTorrent clients (Deluge, qBittorrent, Transmission) use the IEC byte units by default. It's funny how they get this "right" since speeds should be presented in bits. – Mikuz – 2014-05-13T13:56:53.940

3The GiB, MiB, KiB notation is used widely in the software that comprises my Linux installations. Your "used nowhere" claim needs to be amended. – kreemoweet – 2014-05-13T16:08:49.523

1@kreemoweet: I deleted it (too Windows-centric). – harrymc – 2014-05-13T16:31:00.630

when describing JEDEC and IEC.. you could've added IEEE there. And when you wrote a paragraph for each you could've mentioned what each requires. SI standard is no doubt quite old, and says kilo is 1000 before anybody knew what a byte was. Very early computer scientists used kilo=1000. Programmers and computer scientists use kilobyte=1024. It looks like IEC proposed the kibibyte-1024 bytes, and kilobyte=1000 bytes idea in 1996 and published a standard in 1998. IEEE supports them but have some lenience. JEDEC(semiconductor company) publish kilobyte as 1024 bytes. And windows-1024 as you say. – barlop – 2014-05-14T00:56:08.717

@barlop: IEEE adopted the SI metric standard for units (source), stating that the IEC standard is largely ignored by the industry.

– harrymc – 2014-05-14T05:01:15.667@harrymc The IEEE apparently support the IEC standard(as mentioned) which is from 1998/1999.the IEEE supported them while they were being developed http://physics.nist.gov/cuu/Units/binary.html and made a standard.The IEC standard makes an allowance for the so-called binary prefixes / usage of the prefixes,and JEDEC standard(2002) uses/references that lenience in the IEC."On 19 March 2005,the IEEE standard- IEEE 1541-2002 "Prefixes for Binary Multiples"(wikipedia) came out using the IEC one.

– barlop – 2014-05-14T07:24:35.360@harrymc ur words "largely ignored by the industry" don't appear in the article but the article does have the phrase "largely ignored" and industry near each other.It says "The plaintiffs acknowledged that the IEC and IEEE standards define a MB as one million bytes but stated that the industry has largely ignored the IEC standards"The defendents in the case were-interestingly,flash memory manufacturers(I say interestingly as i'd have thought they might argue they were following JEDEC - perhaps they did)... So, it's wikipedia saying the plaintiffs agree. And the term "industry" is not accurate. – barlop – 2014-05-14T07:33:09.873

so,makers of spinning disk drive,telecomms(early as feb02 revision of article).and it looks like even flash memory ones too,use the decimal prefixes.It helps them also,re advertising,as they sound bigger,tho they can get sued4their drives being too smallThat wikipedia link mentions that "According to one HP brochure,"[t]o reduce confusion,vendors are pursuing one of two remedies:they are changing SI prefixes to the new binary prefixes, or they are recalculating the numbers as powers of ten."But even early as 2001(that 2001 versionOf the article mentions) h drv manufac's were using dec prefixes – barlop – 2014-05-14T07:42:37.843

3

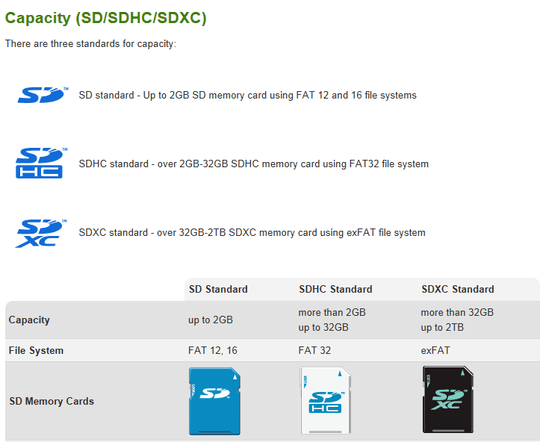

SD card specs are governed by the SD Association (SDA) beginning in August 1999, which means SDA standards were in place for both time periods in your question.

SDA capacity standards dictate what file system to use when determining capacity, speed, class, etc (among other things such as physical size specs).

Assuming we're talking about microSD - SDHC standard, these are determined on FAT32 file systems. (side note - FAT32 hence the maximum file size of 32GB on this class of card). These sizes are determined in base 2 and should refer to MiB and GiB, not MB and GB.

This indicates that, per the SDA's specs, the capacities are determined in decimal rather than binary by using GB (decimal) rather than GiB (binary) in the documentation. The difference between decimal and binary can be seen in this table and shows:

1MB = 10002 bytes

1GB = 10003 bytes

128 MB = 128 x 10002 and

128 GB = 128 x 10003

You can see 128 x 10002 x 1000 = 128 x 10003.

128 GB is 1000 times larger than 128 MB

It's likely the SDA adopted decimal capacity standards based on average consumer understanding.

2

It is 1000 times bigger. For verification you can use a unit based calculator like Frink to perform the calculation.

Although Google disagrees and returns 1024

So there is a disagreement between those two sources, so we can drop down to the math.

1 GByte in bytes according to Google is 1073741824.1 megabyte in bytes according to Google is 1048576. Which is why they are replying with 1024.

Frink takes a different approach with 1000000000 and 1000000 respectively.

For a discussion on the history of the split between 1000 (10^3) and 1024 (2^10) you can see Wikepedia which states:

In 1998 the International Electrotechnical Commission (IEC) enacted standards for binary prefixes, specifying the use of kilobyte to strictly denote 1000 bytes and kibibyte to denote 1024 bytes. By 2007, the IEC Standard had been adopted by the IEEE, EU, and NIST and is now part of the International System of Quantities. Nevertheless, the term kilobyte continues to be widely used with both of the following two meanings:

At the start of this answer I said it was 1000 times bigger. The reason that I did that is, that from a practical standpoint, because it is the more conservative of the two and it is less likely to be found to be wrong. For instance if you can store X number of files on the smaller microSD card given all of the possible combinations of interpretation you should safely be able to store 1000 times X files on the larger microSD card.

5-1 this answer made no sense at the beginning, adds nothing in the middle, and is a fake answer at the end. To begin with, you say with great confidence that it is 1000* bigger. because "frink"(a program somebody wrote) says so. Then you say Google disagrees. So you decide to "drop down to the Math" as if google and "frink" don't do Math. You then state how we get two values the 1024 vs the 1000 (but nobody asked how we get them), and then even if the answer was really 1024, you stand by your answer of 1000 because it won't get their expectations up. Terrible "answer". – barlop – 2014-05-13T18:25:17.373

0

There are two common definitions in use, as you can see from the answers. So you always have to figure out who is using the term.

Harddisk makers almost always use 1000x, so their gigabytes are 1000 megabytes. (See the famous lawsuits)

Memory makes always use 1024x. You can't buy a computer with 65.5 GB of RAM, but you can buy one with 64 GB. (See JEDEC conventions)

Network speeds use 1000x, so Gbit/s Ethernet is 1000 Mbit/s. (See also IEC specifications)

SI does not have a standard for bytes, so their definition is somewhat irrelevant to this discussion.

Now SD cards are a bit of a special case. They appear to the OS as harddisks, but they are physically made from chips, NAND flash to be precise. For that reason the makers follow memory conventions. 128 Gbyte is 128*1024 MByte.

"For that reason the makers follow memory conventions." No they don't. – endolith – 2015-08-05T11:43:26.980

13To put it bluntly, it all depends on the actual cards and how the manufacturers decided to name the actual capacity of the cards. So, it can be 1000x bigger, 1024x bigger or a value in between. Most likely is that they are 1000x bigger, due to convention. – Doktoro Reichard – 2014-05-04T18:33:35.647

3Not sure the downvotes are fair here. This is actually a complex answer due to varying versions of the measurement MB/ GB. Theoretically, if both cards were marketed exactly as the math for their densities advertised it is 1000 times bigger... But I highly doubt that is the case. And even if both used the common x10 method, there is a discrepancy and the difference is not exactly x1000. – Austin T French – 2014-05-04T18:36:46.503

1@AthomSfere might be because this one has been asked many times. I'd dig up the duplicates but I'm on mobile right now. – Bob – 2014-05-04T18:52:47.173

1@Bob fair enough, but it would nice for someone to note that much and not just downvote. – Austin T French – 2014-05-04T18:56:30.033

2

These questions are relevant: What is the origin of K = 1024? and Is it true that 1 MB can mean either 1000000 bytes, 1024000 bytes, or 1048576 bytes?

– Garrulinae – 2014-05-05T00:33:19.5533

Also What is the difference between a kibibyte, a kilobit, and a kilobyte? and Why does 1KB contain 1024 bytes when 1KB = 1000 bytes?

– Garrulinae – 2014-05-05T00:40:28.373@evachristine Can you provide a link to the comparison you alluded to? – gm2 – 2014-05-10T20:35:21.963

Nowadays every storage device use 1 GB = 1000 mb and not 1 GB = 1024 mb. The only reason is that it makes most of the calculations easier in computer. – Hunter – 2014-05-11T16:11:38.200

@Hunter most likely source with the information provided.

– Raystafarian – 2014-05-13T14:17:48.317