16

1

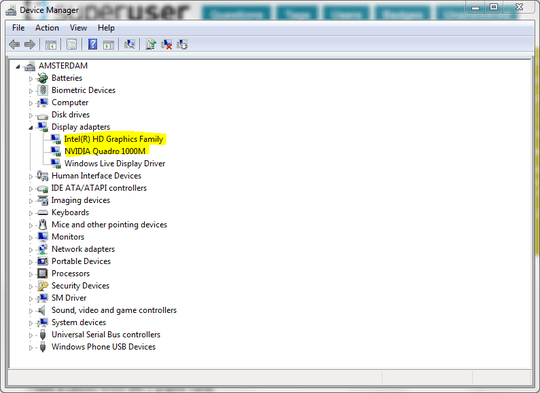

I have a Lenovo W520 laptop with two graphics cards:

I think Windows 7 (64 bit) is using my Intel graphics card ₃ which I think is integrated — because I have a low graphics rating in the Windows Experience Index. Also, the Intel card has 750MB of RAM while the NVIDIA has 2GB.

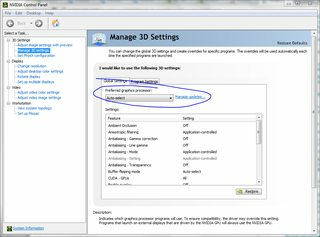

- How do I know for certain which card is Windows 7 really using?

- How do I change it?

- Since this is a laptop and the display is built in, how would changing the graphics card affect the built in display?

2I think it knows to switch which card, based on demand? 3D games should use the NVidia, and most everything else should use the much lower power Intel built in video. – geoffc – 2011-09-01T02:24:08.840

I think you're on to something there, geoffc. @jstawski, Is there any Lenovo brand software running in the system tray, particularly one that manages power or other advanced features? – Hand-E-Food – 2011-09-01T02:55:45.497

You can also disable Nvidia Optimus in the BIOS. :) – Johnny – 2012-08-21T21:12:29.800