I'm going to add extra information about Display port, especially considering the fact that AMD's Eyefinity puts this technology front and center.

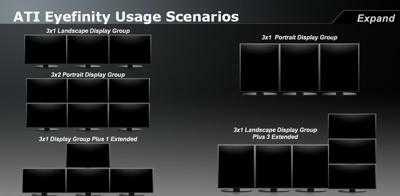

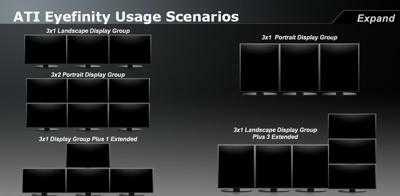

Eyefinity

It basically takes one desktop and scales it to multiple screens. So if the 3x1 Landscape on the top left is three 1920 monitors, then it would show a single Windows desktop with a resolution of 5760x1200.

What makes Display Port different from DVI/VGA/HDMI

Now to achieve this, you'll need to use Display Port. Display Port is a very different technology from DVI/VGA/HDMI.

For one, the Display port has more theoretical bandwitdth. It uses a packet architecture not unlike USB or Ethernet. HDMI/DVI and VGA are more like sound speakers or IDE parallel ports. The three color signal wire pairs run parallel with a clock circuit on the fourth pair. DisplayPort's architecture theoretically allows for far greater potential distances.

The DP components also cost less and also requires less voltage ( 3V vs 5V)), and thus theoretically allow Display port only displays to be thinner than displays with HDMI.

The most exciting feature of Display port is the ability to combine multiple Video/Audio streams (thus allowing displays to daisy chain) and USB and Ethernet

Active and Passive Display Port Dongles

By far the most confusion part of Display Port is the initial confusion about Active and Passive Display Port Dongles. This was most noticable when Eyefinity first came out. To use Eyefinity, you need at least one display using Displayport . . .

Well if you hook up a dongle to the Display Port on the AMD card, then two monitors on the DVI ports, then you should get Eyefinity right??

That depends.

There are two ways for a video card to push a HDMI/DVI signal over display port. The first is what is called an active converter. The video card take the HDMI/DVI and encapsulates the signal using Display Port. When the Active dongle receives the signal, it then sends the HDMI/DVI signal to the monitor.

- Pros

- You can run a Display Port monitor AND an HDMI signal off that same dongle, along with audio and USB (theoretically).

- Cons

- More expensive than passive (not as big of a deal with the introduction of single uplink Active dongles)

The second method is to use a passive dongle. There is no chip here. The graphics card detects them as and outputs a HDMI/DVI signal over Display Port. The passive dongle then just clocks it up to the HDMI specs.

The problem here is that the video cards capable of Eyefinity only have two "pipelines" that can push out the older HDMI/DVI signals. Thus if you use a passive dongle, you are really sharing that pipeline with three monitors. For Eyefinity to work, it needs more bandwidth (remember 3x1920 monitors is 5760 across!). Thus that is who one of the three monitors has to be powered using the Display Port "pipeline" (the quotes are because I have no idea what the real name is or what the heck they exactly do).

Note also, Active Dongles may also require a USB port for power. A common issue is plugging into a USB port with unstable power or the computer just outright making the USB port fall asleep! Zzzzzz

More DisplayPort fun!:

http://www.elitebastards.com/index.php?option=com_content&view=article&id=796&catid=16&Itemid=29

http://pcper.com/reviews/Editorial/Eyefinity-and-Me-Idiots-Guide-AMDs-Multi-Monitor-Technologyhttp://en.community.dell.com/dell-blogs/direct2dell/b/direct2dell/archive/2008/02/19/46464.aspx

http://www.edn.com/article/472107-Bridging_the_new_DisplayPort_standard.php

http://www.bluejeanscable.com/articles/whats-the-matter-with-hdmi.htm

As stated, this question has no meaning : 1280x1024 will display just fine with any/all of the above. Theoretical considerations apart, the real video performance practically depends on so many components in the computer that there is no general answer except "go with the latest technology" even if there will be no discernible difference. The quality of the video card one uses to generate the display and that of the monitor are much more important than the format of the output, converted between analog/digital or not. – harrymc – 2011-12-30T16:08:26.520

I guess I'm the only one who was looking for Display port info. . . – surfasb – 2012-01-05T08:13:04.563