362

218

How can I download all pages from a website?

Any platform is fine.

362

218

How can I download all pages from a website?

Any platform is fine.

347

HTTRACK works like a champ for copying the contents of an entire site. This tool can even grab the pieces needed to make a website with active code content work offline. I am amazed at the stuff it can replicate offline.

This program will do all you require of it.

Happy hunting!

what if i try to copy wiki en? – Timothy – 2014-11-04T03:03:11.190

A nice tutorial for basic use - http://www.makeuseof.com/tag/save-and-backup-websites-with-httrack/

– Eran Morad – 2015-02-16T05:52:36.9874Would this copy the actual ASP code that runs on the server though? – Taptronic – 2010-03-19T13:02:03.703

8@Optimal Solutions: No, that's not possible. You'd need access to the servers or the source code for that. – Sasha Chedygov – 2010-03-31T07:08:58.700

I would like to download for example the large images from the listings on ebay (the ones that are being shown in each listing) by using the link of the search result. is it possible for someone to tell me the settings for HTTrack that I can use to do that? – und3rd06012 – 2015-09-01T15:40:49.763

Is this supporting downloading paged contents like mywebsite.com/games?page=1, mywebsite.com/games?page=2 because seems like it keeps overriding of previously created pages and shows only last page. Please advice. – Teoman shipahi – 2016-02-29T16:27:25.523

Does it support cookies (sessions)? – jayarjo – 2017-03-26T17:23:29.827

8Been using this for years - highly recommended. – Umber Ferrule – 2009-08-09T20:38:39.413

This is a terrible program. It has great trouble downloading single web-pages, poor control over following links and to what depth, and an absurd download speed limit from last decade. Just use wget, save yourself the pain. – aaa90210 – 2018-12-30T21:04:05.730

For macOS, use brew install httrack and then run it with httrack. It has a great menu after that. Easy peezie, lemon squeezie! – Joshua Pinter – 2019-11-21T17:31:04.697

You can also limit the speed of download so you don't use too much bandwidth to the detriment of everyone else. – Umber Ferrule – 2009-08-21T22:18:38.660

Finally this one is bit better than others ;) – joe – 2009-09-23T13:33:45.170

2After trying both httrack and wget for sites with authorization, I have to lean in favor of wget. Could not get httrack to work in those cases. – Leo – 2012-05-18T11:55:48.900

1Whats the option for authentication? – vincent mathew – 2013-05-28T18:03:49.750

282

Wget is a classic command-line tool for this kind of task. It comes with most Unix/Linux systems, and you can get it for Windows too. On a Mac, Homebrew is the easiest way to install it (brew install wget).

You'd do something like:

wget -r --no-parent http://site.com/songs/

For more details, see Wget Manual and its examples, or e.g. these:

2

Homebrew shows how to install it right on their homepage http://brew.sh/

– Eric Brotto – 2014-07-14T10:43:17.6432As I also asked for httrack.com - would this cmd line tool get the ASP code or would it just get the rendering of the HTML? I have to try this. This could be a bit worrisome for developers if it does... – Taptronic – 2010-03-19T13:04:11.440

6@optimal, the HTML output of course - it would get the code only if the server was badly misconfigured – Jonik – 2010-03-19T15:17:33.313

Httrack vs Wget? Which one should I use on a Mac? – 6754534367 – 2016-09-12T14:01:01.680

@gorn That might be doable with a chroot, though I've never tried it. – wjandrea – 2017-09-24T07:31:28.717

Any chance of running this in parallel? – Luís de Sousa – 2018-12-22T10:29:24.320

doesn't work with the links, images, and others, so it is useless. The other answer with command wget -m -p -E -k www.example.com did all the jobs and display a website locally with proper links, images, format, etc, in so easy way. – BMW – 2020-01-29T06:27:28.057

14There's no better answer than this - wget can do anything :3 – Phoshi – 2009-09-16T22:30:53.010

2unfortunately it does not work for me - there is a problem with links to css files, they are not changed to relative i.e., you can see something like this in files: <link rel="stylesheet" type="text/css" href="/static/css/reset.css" media="screen" /> which does not work locally well, unless there is a waz to trick firefox to think that certain dir is a root. – gorn – 2012-07-27T00:42:18.040

7+1 for including the --no-parent. definitely use --mirror instead of -r. and you might want to include -L/--relative to not follow links to other servers. – quack quixote – 2009-10-09T12:43:56.317

1I don't think I've used --mirror myself so I didn't put it the answer. (And it's not really fully "self explanatory" like Paul's answer says...) If you want to elaborate on why it's better than -r I'd appreciate it! – Jonik – 2009-10-09T13:06:02.867

156

Use wget:

wget -m -p -E -k www.example.com

The options explained:

-m, --mirror Turns on recursion and time-stamping, sets infinite

recursion depth, and keeps FTP directory listings.

-p, --page-requisites Get all images, etc. needed to display HTML page.

-E, --adjust-extension Save HTML/CSS files with .html/.css extensions.

-k, --convert-links Make links in downloaded HTML point to local files.

5I tried using your wget --mirror -p --html-extension --convert-links www.example.com and it just downloaded the index. I think you need the -r to download the entire site. – Eric Brotto – 2014-07-14T10:49:20.500

5for those concerned about killing a site due to traffic / too many requests, use the -w seconds (to wait a number of secconds between the requests, or the --limit-rate=amount, to specify the maximum bandwidth to use while downloading – vlad-ardelean – 2014-07-14T18:33:56.900

1@EricBrotto you shouldn't need both --mirror and -r. From the wget man page: "[--mirror] is currently equivalent to -r". – evanrmurphy – 2015-04-16T23:50:30.763

2-p is short for --page-requisites, for anyone else wondering. – evanrmurphy – 2015-04-16T23:59:28.897

2Using wget like this will not get files referenced in Javascript. For example, in <img onmouseout="this.src = 'mouseout.png';" onmouseover="this.src = 'mouseover.png';" src="image.png"> the images for mouseout and mouseover will be missed. – starfry – 2015-05-07T13:40:02.767

1

Does anybody know how can I use wget to get the large images of the listings on ebay? For example I want to get the large images from this link: http://www.ebay.com/sch/i.html?_from=R40&_trksid=p2051542.m570.l1313.TR0.TRC0.H0.X1974+stamps.TRS0&_nkw=1974+stamps&_sacat=0. To be more specific I want the images that are being displayed when you hover the mouse over the image.

– und3rd06012 – 2015-09-12T12:01:43.5201For me it doesn't work as expected, it no recreate all links inside the page in order to be browseable offline – chaim – 2015-11-11T08:54:04.710

1If you only want to download www.example.com/foo, use the --no-parent option. – Cinnam – 2016-09-10T16:43:20.727

This seems to get confused with some hyperlinks: /contact was converted to index.html?p=11 (which it also copied into the top level directory, even though contact/index.html was downloaded too) – hayd – 2017-01-26T04:45:01.480

Ah, these are apparently redirects in wordpress but not resolved by wget, and strangely used in the downloaded html when resolved in the original html (no p= link in the a tag). – hayd – 2017-01-26T04:54:01.990

If you want to output a site running locally, use localhost:4000 (or whatever your port is) and not 127.0.0.1:<your-port> or it won't work—at least it didn't for me. For some reason, it would only get the index.html files for each page in my site and not all the assets, css, etc. – Evan R – 2018-06-09T12:10:11.413

1wget --no-parent --recursive --level=inf --span-hosts --page-requisites --convert-links --adjust-extension --no-remove-listing https://……/…… --directory-prefix=……. There must be someone needs this. – Константин Ван – 2019-02-03T12:35:12.183

I would like to point out that "--span-host" (not --span-hosts) is an important tag to me that I spent some time finding! Without this, links and images that are not in the domain specified will not be backup-ed! – Student – 2019-05-16T02:20:08.973

2If you don’t want to download everything into a folder with the name of the domain you want to mirror, create your own folder and use the -nH option (which skips the host part). – Rafael Bugajewski – 2012-01-03T15:33:11.413

9+1 for providing the explanations for the suggested options. (Although I don't think --mirror is very self-explanatory. Here's from the man page: "This option turns on recursion and time-stamping, sets infinite recursion depth and keeps FTP directory listings. It is currently equivalent to -r -N -l inf --no-remove-listing") – Ilari Kajaste – 2009-09-23T11:04:07.650

2What about if the Auth is required? – Val – 2013-05-13T16:04:36.723

8

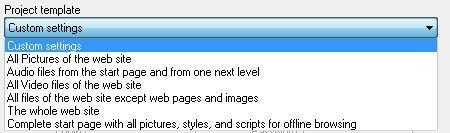

Internet Download Manager has a Site Grabber utility with a lot of options - which lets you completely download any website you want, the way you want it.

You can set the limit on the size of the pages/files to download

You can set the number of branch sites to visit

You can change the way scripts/popups/duplicates behave

You can specify a domain, only under that domain all the pages/files meeting the required settings will be downloaded

The links can be converted to offline links for browsing

You have templates which let you choose the above settings for you

The software is not free however - see if it suits your needs, use the evaluation version.

8

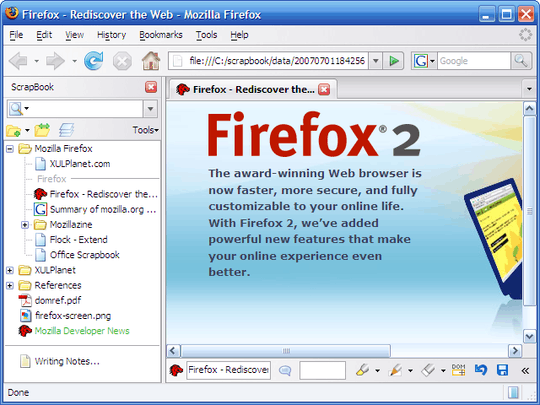

You should take a look at ScrapBook, a Firefox extension. It has an in-depth capture mode.

5No longer compatible with Firefox after version 57 (Quantum). – Yay295 – 2018-04-16T22:31:53.887

7

itsucks - that's the name of the program!

5

I'll address the online buffering that browsers use...

Typically most browsers use a browsing cache to keep the files you download from a website around for a bit so that you do not have to download static images and content over and over again. This can speed up things quite a bit under some circumstances. Generally speaking, most browser caches are limited to a fixed size and when it hits that limit, it will delete the oldest files in the cache.

ISPs tend to have caching servers that keep copies of commonly accessed websites like ESPN and CNN. This saves them the trouble of hitting these sites every time someone on their network goes there. This can amount to a significant savings in the amount of duplicated requests to external sites to the ISP.

5

I like Offline Explorer.

It's a shareware, but it's very good and easy to use.

4

WebZip is a good product as well.

4

I have not done this in many years, but there are still a few utilities out there. You might want to try Web Snake. I believe I used it years ago. I remembered the name right away when I read your question.

I agree with Stecy. Please do not hammer their site. Very Bad.

Nice! I searched for Snake for over 20 years and simply couldn't find it.Though I remember it as free. I remember (or want to believe) it was much much better than what was later on the major download programs suggested on forums or by web search engines.- Only comparable some years later was the early free version of what would later on be available as the the above mentioned OfflineExplorer. The free versions stopped working properly somehow after that. (But I fully understand the programmer.) – John – 2019-11-27T01:22:25.777

3

DownThemAll is a Firefox add-on that will download all the content (audio or video files, for example) for a particular web page in a single click. This doesn't download the entire site, but this may be sort of thing the question was looking for.

It's only capable of downloading links (HTML) and media (images). – Ain – 2017-09-26T17:07:14.843

3

Try BackStreet Browser.

It is a free, powerful offline browser. A high-speed, multi-threading website download and viewing program. By making multiple simultaneous server requests, BackStreet Browser can quickly download entire website or part of a site including HTML, graphics, Java Applets, sound and other user definable files, and saves all the files in your hard drive, either in their native format, or as a compressed ZIP file and view offline.

3

Teleport Pro is another free solution that will copy down any and all files from whatever your target is (also has a paid version which will allow you to pull more pages of content).

3

For Linux and OS X: I wrote grab-site for archiving entire websites to WARC files. These WARC files can be browsed or extracted. grab-site lets you control which URLs to skip using regular expressions, and these can be changed when the crawl is running. It also comes with an extensive set of defaults for ignoring junk URLs.

There is a web dashboard for monitoring crawls, as well as additional options for skipping video content or responses over a certain size.

1

While wget was already mentioned this resource and command line was so seamless I thought it deserved mention:

wget -P /path/to/destination/directory/ -mpck --user-agent="" -e robots=off --wait 1 -E https://www.example.com/

0

Excellent extension for both Chrome and Firefox that downloads most/all of a web page's content and stores it directly into .html file.

I noticed that on a picture gallery page I tried it on, it saved the thumbnails but not the full images. Or maybe just not the JavaScript to open the full pictures of the thumbnails.

But, it worked better than wget, PDF, etc. Great simple solution for most people's needs.

0

You can use below free online tools which will make a zip file of all contents included in that url

0

The venerable FreeDownloadManager.org has this feature too.

Free Download Manager has it in two forms in two forms: Site Explorer and Site Spider:

Site Explorer

Site Explorer lets you view the folders structure of a web site and easily download necessary files or folders.

HTML Spider

You can download whole web pages or even whole web sites with HTML Spider. The tool can be adjusted to download files with specified extensions only.

I find Site Explorer is useful to see which folders to include/exclude before you attempt attempt to download the whole site - especially when there is an entire forum hiding in the site that you don't want to download for example.

-1

download HTTracker it will download websites very easy steps to follows.

download link:http://www.httrack.com/page/2/

video that help may help you :https://www.youtube.com/watch?v=7IHIGf6lcL4

-1 duplicate of top answer – wjandrea – 2017-09-24T07:28:19.210

6Wrong! The question asks how to save an entire web site. Firefox cannot do that. – None – 2016-07-26T06:24:40.040

2Your method works only if it's a one-page site, but if the site has 699 pages? Would be very tiring... – Quidam – 2016-12-15T07:03:15.133

-4

I believe google chrome can do this on desktop devices, just go to the browser menu and click save webpage.

Also note that services like pocket may not actually save the website, and are thus susceptible to link rot.

Lastly note that copying the contents of a website may infringe on copyright, if it applies.

3A web page in your browser is just one out of many of a web site. – Arjan – 2015-05-16T20:05:00.607

@Arjan I guess that makes my option labor intensive. I believe it is more common for people to just want to save one page, so this answer may be better for those people who come here for that. – jiggunjer – 2015-05-17T10:10:51.137

@MenelaosVergis browse-offline.com is gone – user5389726598465 – 2017-07-17T17:58:27.063

Yes, I don't even have the code for that! – Menelaos Vergis – 2017-07-18T04:16:33.443

just FYI please scam!!! do not download from https://websitedownloader.io/ it will ask small amount, which will look convincing but downloads just a webpage, does not even work for plain websites.

– Anil Bhaskar – 2017-12-21T14:11:45.657Try Cyotek best web page scraper for offline viewing.

– Sajjad Hossain Sagor – 2019-02-16T17:34:38.8432

Check out http://serverfault.com/questions/45096/website-backup-and-download on Server Fault.

– Marko Carter – 2009-07-28T13:55:56.160@tnorthcutt, I'm surprised too. If I don't recall awfully wrong, my Wget answer used to be the accepted one, and this looked like a settled thing. I'm not complaining though — all of a sudden the renewed attention gave me more than the bounty's worth of rep. :P – Jonik – 2009-09-17T06:05:59.870

did you try IDM? http://superuser.com/questions/14403/how-can-i-download-an-entire-website/42379#42379 my post is buried down. What did you find missing in IDM?

– Lazer – 2009-09-21T10:30:22.5235@joe: Might help if you'd give details about what the missing features are... – Ilari Kajaste – 2009-09-23T11:06:28.813

browse-offline.com can download the complete tree of the web-site so you can ... browse it offline – Menelaos Vergis – 2014-03-05T13:11:42.160