Singularity

A singularity, as most commonly used, is a point at which expected rules break down. The term comes from mathematics, where a point on an otherwise continuous slope is of undefined or infinite value; such a point is known as a singularity.

| Thinking hardly or hardly thinking? Philosophy |

| Major trains of thought |

| The good, the bad and the brain fart |

| Come to think of it |

|

v - t - e |

The term has extended into other fields; the most notable use is in astrophysics, where a singularity is a point (usually, but perhaps not exclusively, at the center of a black hole) where the curvature of spacetime approaches infinity.

This article, however, is not about the mathematical or physics uses of the term, but rather the borrowing of it by various futurists. They define a technological singularity as the point beyond which we can know nothing about the world. So, of course, they then write at length on the world after that time. Conveniently, this premise not only allows but justifies anything including large inconsistencies with known principles of science.

Technological singularity

“”It's intelligent design for the IQ 140 people. This proposition that we're heading to this point at which everything is going to be just unimaginably different — it's fundamentally, in my view, driven by a religious impulse. And all of the frantic arm-waving can't obscure that fact for me, no matter what numbers he marshals in favor of it. He's very good at having a lot of curves that point up to the right. |

| —Mitch Kapor on Ray Kurzweil |

In transhumanist and singularitarian belief, the "technological singularity" refers to a hypothetical point beyond which human technology and civilization is no longer comprehensible to the current human mind. The theory of technological singularity states that at some point in time humans will invent a machine that through the use of artificial intelligence (AI) will be smarter than any human could ever be. This machine in turn will be capable of inventing new technologies that are even smarter. This event will trigger an exponential explosion of technological advances of which the outcome and effect on humankind is heavily debated by transhumanists and singularists.

Some proponents of the theory believe that the machines eventually will see no use for humans on Earth and simply wipe us (useless eaters) out — their intelligence being far superior to humans, there would be probably nothing we could do about it. They also fear that the use of extremely intelligent machines to solve complex mathematical problems may lead to our extinction. The machine may theoretically respond to our question by turning all matter in our Solar System or our galaxy into a giant calculator, thus destroying all of humankind.

Critics, however, believe that humans will never be able to invent a machine that will match human intelligence, let alone exceed it. They also attack the methodology that is used to "prove" the theory by suggesting that Moore's Law may be subject to the law of diminishing returns, or that other metrics used by proponents to measure progress are totally subjective and meaningless. Theorists like Theodore Modis argue that progress measured in metrics such as CPU clock speeds is decreasing, refuting Moore's Law[1]. (As of 2015, not only Moore's Law is beginning to stall, Dennard scaling

Adherents see a chance of the technological singularity arriving on Earth within the 21st century. Some of the wishful thinking may simply be the expression of a desire to avoid death, since the singularity is supposed to bring the technology to reverse human aging, or to upload human minds into computers. However, recent research, supported by singularitarian organizations including MIRI and the Future of Humanity Institute, does not support the hypothesis that near-term predictions of the singularity are motivated by a desire to avoid death, but instead provides some evidence that many optimistic predications about the timing of a singularity are motivated by a desire to "gain credit for working on something that will be of relevance, but without any possibility that their prediction could be shown to be false within their current career".[2][3]

Don't bother quoting Ray Kurzweil to anyone who knows a damn thing about human cognition or, indeed, biology. He's a computer science genius who has difficulty in perceiving when he's well out of his area of expertise.[4]

Three major singularity schools

Eliezer Yudkowsky identifies three major schools of thinking when it comes to the singularity.[5] While all share common ground in advancing intelligence and rapidly developing technology, they differ in how the singularity will occur and the evidence to support the position.

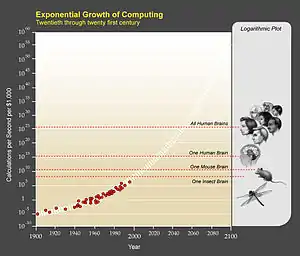

Accelerating change

Under this school of thought, it is assumed that change and development of technology and human (or AI assisted) intelligence will accelerate at an exponential rate. So change a decade ago was much faster than change a century ago, which was faster than a millennium ago. While thinking in exponential terms can lead to predictions about the future and the developments that will occur, it does mean that past events are an unreliable source of evidence for making these predictions.

Event horizon

The "event horizon" school posits that the post-singularity world would be unpredictable. Here, the creation of a super-human artificial intelligence will change the world so dramatically that it would bear no resemblance to the current world, or even the wildest science fiction. Indeed, our modern civilization is simply unimaginable for those living, say, in ancient Greece. This school of thought sees the singularity most like a single point event rather than a process — indeed, it is this thesis that spawned the term "singularity." However, this view of the singularity does treat transhuman intelligence as some kind of magic.

Intelligence explosion

This posits that the singularity is driven by a feedback cycle between intelligence enhancing technology and intelligence itself. As Yudkowsky (who endorses this view) said, "What would humans with brain-computer interfaces do with their augmented intelligence? One good bet is that they’d design the next generation of brain-computer interfaces." When this feedback loop of technology and intelligence begins to increase rapidly, the singularity is upon us.

Techno-faith is still faith

“”A singularity is a sign that your model doesn't apply past a certain point, not infinity arriving in real life. |

| —Forum comment[6] |

There is also a fourth singularity school: that it's all a load of bollocks![7] This position is not popular with high-tech billionaires.[8]

It may be worth taking a look at a similar precursory concept developed by a Jesuit priest by the name of Pierre Teilhard de Chardin

Is the Singularity going to happen?

This is largely dependent on your definition of "singularity".

The intelligence explosion singularity is by far the most unlikely. According to present calculations, a hypothetical future supercomputer may well not be able to replicate a human brain in real time, as we presently don't even understand how intelligence works ─ although proponents of the singularity theory don't usually maintain that advanced AI will have human brain replicas, instead holding that they will develop their own form of intelligence mostly independently. A more daunting problem is that there is no evidence that intelligence can be made to be self-iterative in this manner — in fact, it is not unlikely that improvements in intelligence are actually more difficult the smarter you become, meaning that each improvement in intelligence is increasingly difficult to execute. Indeed, how much smarter than a human being something can be is an open question. Energy requirements are another issue; humans can run off of Doritos and Mountain Dew Dr. Pepper, while supercomputers require vast amounts of energy to function. Singularity proponents usually address the energy issue by positing that the smarter the AI, the more energy will be extractable (with hypothetical structures such as the Dyson sphere often being proposed), but this once again runs into the issue of there being no evidence for intelligence self-iterativeness.

Another major issue arises from the nature of intellectual development; if an artificial intelligence needs to be raised and trained, it may take a long time between generations of artificial intelligences to get further improvements. More intelligent animals seem to generally require longer to mature, which may put another limitation on any such "explosion". Singularitarians usually respond to this problem by pointing to the trend of accelerating change, but it is questionable if this trend even exists: for example, the rate of patents per capita actually peaked in the 20th century, with a minor decline since then, despite the fact that human beings have gotten more intelligent and gotten superior tools. As noted above, Moore's Law is nearing its end, and outside of the realm of computers, the rate of increase in other things has not been exponential — airplanes and cars continue to improve, but they do not improve at the ridiculous rate of computers. It is likely that once computers hit physical limits of transistor density, their rate of improvement will fall off dramatically, and already even today, computers which are "good enough" continue to operate for many years, something which was unheard of in the 1990s, where old computers were rapidly and obviously obsoleted by new ones. Someone like Kurzweil may say that all of this is indicative of an ongoing paradigm shift whereby the rate of development of old technology (such as cars and planes) stalls while the rate of newer, more influential technology (such as AI or quantum computing) begins to increase, but claims like that can be seen as a classic example of the No True Scotsman fallacy. Additional claims which attempt to disprove the No-True-Scotsman interpretation, such as that the trend of exponential growth has existed for millions of years and that it is therefore logical to conclude that slowing growth rates of some existing technology are more likely to indicate paradigm shifts than a global change in the trend, have not been verified in a systematic, unbiased manner.

François Chollet points out that, despite ever-increasing amounts of money and time spent on scientific research, scientific progress is still roughly linear.[10][11] Despite exponentially increasing numbers of software programmers writing code for exponentially more powerful computers, software still sucks. (See also Wirth's law.) The easy problems have already been solved, and continuing progress requires solving harder and harder problems, which require larger and larger teams of people. "Exponential progress meets exponential friction."

Anyway, in the real world, exponential growth never continues forever, since it will always eventually encounter some type of resource depletion that slows it down, turning it into sigmoidal (or "logistic") growth; claims that this resource depletion will occur only after AI reaches superhuman intelligence have already been addressed. Besides, even when exponential growth does continue forever, it can by definition never reach infinity.

Problem with Intelligence Explosion

Intelligence explosion is usually thought of as an intelligent program that modifies its own code to become more intelligent, and further accelerate the process of modification. [12] A few things that need to be mentioned are,

(a) For a program of certain size n bits, there can only be 2^n possible bit strings. So, recursive self-improvement cannot continue indefinitely.

(b) No type of recursively self-improving (RSI) software is known to exist, and the assumption that RSI software is even possible is unproven.

(c) Needs massive computing power: Even if RSI is possible, it would need extreme quantities of computing power. At every stage, the program would write down multiple programs, execute them to check whether they are more intelligent than itself. So, either the starting AI is a very highly competent programmer, or an extremely massive number of programs would be checked. Executing every program would take some time and/or power, thus making the process extremely long and/or power-intensive. Moreover, the AI would have to learn extensively to become competent as an agent in the wider world. It would require computing power to process the information.

(d) The assumption that intelligence is on- dimensional. It is likely that there would be a tradeoff between capabilities in different fields. So, program 1 would have to decide between various candidates for program 1.1, some of whom are more intelligent than it. How will it do so?

(e) Researcher Yamploskiy has raised the concern that errors could accumulate during self-improvement, stopping the program from functioning at some stage. [13]

Extraterrestrial singularity

Extraterrestrial technological singularities might become evident from acts of stellar/cosmic engineering. One such possibility for example would be the construction of Dyson Spheres that would result in the altering of a star's electromagnetic spectrum in a way detectable from Earth. Both SETI and Fermilab have incorporated that possibility into their searches for alien life.[14][15]

A different view of the concept of singularity is explored in the science fiction book Dragon's Egg by Robert Lull Forward, in which an alien civilization on the surface of a neutron star,[note 1] being observed by human space explorers, goes from Stone Age to technological singularity in the space of about an hour in human time, leaving behind a large quantity of encrypted data for the human explorers that are expected to take over a million years (for humanity) to even develop the technology to decrypt.

No definitive evidence of extraterrestrial civilizations has been found as of 2019.[16]

See also

External links

- Science Saturday: The Great Singularity Debate, Eliezer Yudkowsky vs. Massimo Pigliucci, Blogging Heads

- One-Half of a Manifesto, Jaron Lanier, edge.org

- Michael E. Zimmerman. The Singularity: A Crucial Phase in Divine Self Actualization? Cosmos and History: The Journal of Natural and Social Philosophy, Vol 4, No 1-2 (2008)

- Biologist PZ Myers criticizes Kurzweil

- The Myth of a Superhuman AI

- Charles Stross, who recognizes the Singularity is science fiction, wrote Three Arguments Against the Singularity.

- Ramez Naam, another science fiction writer, argues against the Singularity in The Singularity Is Further Than It Appears and Top Five Reasons 'The Singularity' Is A Misnomer

- I Am the Very Model of a Singularitarian

Notes

- No ordinary matter let alone living things can exist given the gravitational field strength on the surface of a neutron star. And that's before we talk about highly magnetized neutron stars (magnetars), whose magnetic fields are the strongest in the known Universe, so strong in fact, they can wipe clean your credit card from 10,000 light years away. Keep out!

References

- The Singularity Myth - THEODORE MODIS

- How We’re Predicting AI—or Failing To - Stuart Armstrong & Kaj Sotala

- Stuart Armstrong- How We're Predicting AI - The Singularity Summit 2012

- PZ Myers' rant on the subject

- Yudkowsky.net - Three Major Singularity Schools

- From the SomethingAwful thread on LessWrong.

- This Week’s Finds (Week 311) - "This week I’ll start an interview with Eliezer Yudkowsky, who works at an institute he helped found: the Singularity Institute of Artificial Intelligence."

- Singularity Schtick: Hi-tech moguls and The New York Times may buy it, but you shouldn't By John Horgan on June 23, 2010

- "God in the machine: my strange journey into transhumanism" The Guardian

- The impossibility of intelligence explosion François Chollet, Nov 27 2017

- The Singularity is not coming - François Chollet, 2012/08/10

- https://wiki.lesswrong.com/wiki/Recursive_self-improvement

- https://arxiv.org/ftp/arxiv/papers/1502/1502.06512.pdf

- Fermilab Dyson sphere searches updated February 26, 2016 D. Carrigan

- When Will We Find the Extraterrestrials? Seth Shostak 2009

- The weird star that totally isn’t aliens is dimming again: The mystery of Tabby’s Star kicks in again. by John Wenz (May 19, 2017) Astronomy Magazine.