No Free Lunch Theorems

No Free Lunch Theorems for Search[1] is the title of a 1995 paper of David H. Wolpert and William G. Macready, and No Free Lunch Theorems for Optimization[2] the title of a follow-up from 1997.

| Part of a convergent series on Mathematics |

| 1+1=11 |

v - t - e |

In these papers, Wolpert and Macready show that for any algorithm, any elevated performance over one class of problems is offset by performance over another class[2], i.e. that on average each algorithm works as well as a blind search.

Intelligent Designers love these No Free Lunch Theorems (often abbreviated to NFL), as they seem to indicate that - for instance - evolutionary algorithms have to get some additional information from somewhere to work.

Usually they neglect the fact that an algorithm has to work only on a certain class of functions, and not on all functions. Wolpert himself has repudiated William Dembski's interpretation of the theorems as lending support to intelligent design.

Mathematical formulation of the Sharpened No Free Lunch Theorem

While the original NFL theorems looked at the class of all functions, the Sharpened NFL theorem is formulated for smaller classes with a special property, i.e., being closed under permutation.

First, some definitions have to be introduced in the way as they seem popular in the literature when talking about the NFL and such. This set of definition can be found - for instance - in a paper[3] of Andrea Valsecchi and Leonardo Vanneschi:

are finite sets.

are finite sets. is a function

is a function- a trace of length m over

and

and  is a sequence of couples

is a sequence of couples  such that

such that  and

and

- a trace is called simple if an element of

appears at most once in it

appears at most once in it - T is the set of all possible traces over the sets

and

and

- a search operator is a function

- g is non-repeating if

(here,

(here,  for the trace

for the trace  , and

, and  )

) - if

is simple and

is simple and  is non-repeating, then also

is non-repeating, then also  is a simple trace. Here

is a simple trace. Here  is the concatenation operator

is the concatenation operator - a deterministic search algorithm

is an application

is an application  with

with  , where g is a search operator.

, where g is a search operator.  is called non-repeating if g is non-repeating

is called non-repeating if g is non-repeating- for convenience, we'll drop the g and speak of a search-algorithm A.

- a deterministic non-repeating search algorithm will be called algorithm for short

is the m-th iteration of A to trace t, defined by

is the m-th iteration of A to trace t, defined by  ,

,

, where

, where  is the empty trace.

is the empty trace.- A set of functions

is called closed under permutation (c.u.p) if

is called closed under permutation (c.u.p) if  and for each permutation

and for each permutation  of

of  (

( ), we have

), we have  .

.

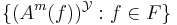

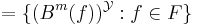

Now, at last, the Sharpened NFL:

Sharpened NFL: Let  be two deterministic non-repeating search algorithms and let

be two deterministic non-repeating search algorithms and let  be c.u.p. Then

be c.u.p. Then

References

- Wolpert, D.H., Macready, W.G. (1995), No Free Lunch Theorems for Search, Technical Report SFI-TR-95-02-010 (Santa Fe Institute).

- Wolpert, D.H., Macready, W.G. (1997), No Free Lunch Theorems for Optimization, IEEE Transactions on Evolutionary Computation 1, 67. http://ic.arc.nasa.gov/people/dhw/papers/78.pdf

- Andrea Valsecchi and Leonardo Vanneschi (2008), A study of Some Implications of the No Free Lunch Theorem, Applications of Evolutionary Computing, Volume 4974/2008, Springer Berlin/Heidelberg, pp. 634-635