28

4

The challenge is to write the fastest code possible for computing the permanent of a matrix.

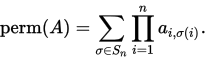

The permanent of an n-by-n matrix A = (ai,j) is defined as

Here S_n represents the set of all permutations of [1, n].

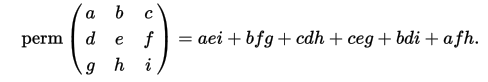

As an example (from the wiki):

In this question matrices are all square and will only have the values -1 and 1 in them.

Examples

Input:

[[ 1 -1 -1 1]

[-1 -1 -1 1]

[-1 1 -1 1]

[ 1 -1 -1 1]]

Permanent:

-4

Input:

[[-1 -1 -1 -1]

[-1 1 -1 -1]

[ 1 -1 -1 -1]

[ 1 -1 1 -1]]

Permanent:

0

Input:

[[ 1 -1 1 -1 -1 -1 -1 -1]

[-1 -1 1 1 -1 1 1 -1]

[ 1 -1 -1 -1 -1 1 1 1]

[-1 -1 -1 1 -1 1 1 1]

[ 1 -1 -1 1 1 1 1 -1]

[-1 1 -1 1 -1 1 1 -1]

[ 1 -1 1 -1 1 -1 1 -1]

[-1 -1 1 -1 1 1 1 1]]

Permanent:

192

Input:

[[1, -1, 1, -1, -1, 1, 1, 1, -1, -1, -1, -1, 1, 1, 1, 1, -1, 1, 1, -1],

[1, -1, 1, 1, 1, 1, 1, -1, 1, -1, -1, 1, 1, 1, -1, -1, 1, 1, 1, -1],

[-1, -1, 1, 1, 1, -1, -1, -1, -1, 1, -1, 1, 1, 1, -1, -1, -1, 1, -1, -1],

[-1, -1, -1, 1, 1, -1, 1, 1, 1, 1, 1, 1, -1, -1, -1, -1, -1, -1, 1, -1],

[-1, 1, 1, 1, -1, 1, 1, 1, -1, -1, -1, 1, -1, 1, -1, 1, 1, 1, 1, 1],

[1, -1, 1, 1, -1, -1, 1, -1, 1, 1, 1, 1, -1, 1, 1, -1, 1, -1, -1, -1],

[1, -1, -1, 1, -1, -1, -1, 1, -1, 1, 1, 1, 1, -1, -1, -1, 1, 1, 1, -1],

[1, -1, -1, 1, -1, 1, 1, -1, 1, 1, 1, -1, 1, -1, 1, 1, 1, -1, 1, 1],

[1, -1, -1, -1, -1, -1, 1, 1, 1, -1, -1, -1, -1, -1, 1, 1, -1, 1, 1, -1],

[-1, -1, 1, -1, 1, -1, 1, 1, -1, 1, -1, 1, 1, 1, 1, 1, 1, -1, 1, 1],

[-1, -1, -1, -1, -1, -1, -1, 1, -1, -1, -1, -1, 1, 1, 1, 1, -1, -1, -1, -1],

[1, 1, -1, -1, -1, 1, 1, -1, -1, 1, -1, 1, 1, -1, 1, 1, 1, 1, 1, 1],

[-1, 1, 1, -1, -1, -1, -1, -1, 1, 1, 1, 1, -1, -1, -1, -1, -1, 1, -1, 1],

[1, 1, -1, -1, -1, 1, -1, 1, -1, -1, -1, -1, 1, -1, 1, 1, -1, 1, -1, 1],

[1, 1, 1, 1, 1, -1, -1, -1, 1, 1, 1, -1, 1, -1, 1, 1, 1, -1, 1, 1],

[1, -1, -1, 1, -1, -1, -1, -1, 1, -1, -1, 1, 1, -1, 1, -1, -1, -1, -1, -1],

[-1, 1, 1, 1, -1, 1, 1, -1, -1, 1, 1, 1, -1, -1, 1, 1, -1, -1, 1, 1],

[1, 1, -1, -1, 1, 1, -1, 1, 1, -1, 1, 1, 1, -1, 1, 1, -1, 1, -1, 1],

[1, 1, 1, -1, -1, -1, 1, -1, -1, 1, 1, -1, -1, -1, 1, -1, -1, -1, -1, 1],

[-1, 1, 1, 1, -1, -1, -1, -1, -1, -1, -1, 1, 1, -1, 1, 1, -1, 1, -1, -1]]

Permanent:

1021509632

The task

You should write code that, given an n by n matrix, outputs its permanent.

As I will need to test your code it would be helpful if you could give a simple way for me to give a matrix as input to your code, for example by reading from standard in.

Be warned that the permanent can be large (the all 1s matrix is the extreme case).

Scores and ties

I will test your code on random +-1 matrices of increasing size and stop the first time your code takes more than 1 minute on my computer. The scoring matrices will be consistent for all submissions in order to ensure fairness.

If two people get the same score then the winner is the one which is fastest for that value of n. If those are within 1 second of each other then it is the one posted first.

Languages and libraries

You can use any available language and libraries you like but no pre-existing function to compute the permanent. Where feasible, it would be good to be able to run your code so please include a full explanation for how to run/compile your code in Linux if at all possible.`

Reference implementations

There is already a codegolf question question with lots of code in different languages for computing the permanent for small matrices. Mathematica and Maple also both have permanent implementations if you can access those.

My Machine The timings will be run on my 64-bit machine. This is a standard ubuntu install with 8GB RAM, AMD FX-8350 Eight-Core Processor and Radeon HD 4250. This also means I need to be able to run your code.

Low level information about my machine

cat /proc/cpuinfo/|grep flags gives

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl nonstop_tsc extd_apicid aperfmperf pni pclmulqdq monitor ssse3 fma cx16 sse4_1 sse4_2 popcnt aes xsave avx f16c lahf_lm cmp_legacy svm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw ibs xop skinit wdt lwp fma4 tce nodeid_msr tbm topoext perfctr_core perfctr_nb cpb hw_pstate vmmcall bmi1 arat npt lbrv svm_lock nrip_save tsc_scale vmcb_clean flushbyasid decodeassists pausefilter pfthreshold

I will ask a closely related follow up multi-language question that doesn't suffer from the big Int problem so lovers of Scala, Nim, Julia, Rust, Bash can show off their languages too.

Leader board

- n = 33 (45 seconds. 64 seconds for n = 34). Ton Hospel in C++ with g++ 5.4.0.

- n = 32 (32 seconds). Dennis in C with gcc 5.4.0 using Ton Hospel's gcc flags.

- n = 31 (54 seconds). Christian Sievers in Haskell

- n = 31 (60 seconds). primo in rpython

- n = 30 (26 seconds). ezrast in Rust

- n = 28 (49 seconds). xnor with Python + pypy 5.4.1

- n = 22 (25 seconds). Shebang with Python + pypy 5.4.1

Note. In practice the timings for Dennis and Ton Hospel's vary a lot for mysterious reasons. For example they seem to be faster after I have loaded a web browser! The timings quoted are the fastest in all the tests I have done.

5I read the first sentence, thought 'Lembik', scrolled down, yep - Lembik. – orlp – 2016-10-22T08:40:08.793

@orlp :) It's been a long time. – None – 2016-10-22T08:40:42.103

One other thing I forgot to ask in sandbox, are submissions limited to using a single thread or are multiple threads allowed? Also, I just noticed you mentioned your GPU type, are you also expecting and allowing submissions to use OpenCL or something similar? Finally, what is the deadline to when you will test our submissions? – miles – 2016-10-22T08:55:31.183

@miles Your code can take advantage of anything my PC has to offer. That is it can use all the cores and anything you can get out of my unimpressive GPU. – None – 2016-10-22T09:11:22.817

Could you please include a bigger test case we can check? – xnor – 2016-10-22T09:49:04.800

@xnor I am away from a computer for 36 hours but hopefully Shebang will post his/her simple python code soon that can be used for testing. Otherwise I will post a bigger example then. – None – 2016-10-22T09:51:17.687

1@Lembik I added a large test case. I'll wait for someone to confirm it to be sure. – xnor – 2016-10-22T09:58:19.953

@Lembik What size of the matrix do you expect? Is it allowed to mix programming languages? – Martin Rosenau – 2016-10-22T10:21:41.273

@MartinRosenau You can mix in any way you like. Please do help me run your code though. It's hard to guess how fast people's code will be but anything more than n=25 is impressive. – None – 2016-10-22T10:25:14.500

Is Mathematica code allowed? – JungHwan Min – 2016-10-22T15:42:00.140

Can we rely on the fact that

In this question matrices are all square and will only have the values -1 and 1 in them.or should solutions be theoretically correct no matter what? – Socratic Phoenix – 2016-10-22T17:38:34.653What is on the

flagsline on your systems/proc/cpuinfo? – Ton Hospel – 2016-10-22T18:05:17.047@JHM Yes although it's not clear what score I can give. Maybe you could compare your code to the python reference code ? – None – 2016-10-22T18:51:36.317

@SocraticPhoenix Ideally your code should be correct for any real number inputs but I won't enforce it. – None – 2016-10-22T18:52:48.997

@TonHospel I will have to tell in 24 hours sorry. I am away from my computer. – None – 2016-10-22T18:53:56.767

2One of the answer prints an approximate result, since it uses double precision floats to store the permanent. Is that allowed? – Dennis – 2016-10-22T22:39:46.757

When you say "I will test your code on random +-1 matrices," I assume you mean matrices in which the entries are independent and identically distributed, from a distribution where -1 and +1 have equal probability? It would be good to be explicit about that, since the structure of the random matrices could conceivably affect the speed of the computation. – Nathaniel – 2016-10-23T03:06:48.800

@Nathaniel Yes that is right. – None – 2016-10-23T06:22:44.663

Dennis Yes the answer outputted should be the exact integer not an approximation. – None – 2016-10-23T06:25:56.673

@SocraticPhoenix Do you know a way to use the fact that matrix entries are restricted to 1 and -1? I searched and thought a lot and didn't find one. (Well, I now use that the entries are not very big...) – Christian Sievers – 2016-10-26T00:42:27.830

1@ChristianSievers I thought I might be able to do some magic with signs, but it didn't pan out... – Socratic Phoenix – 2016-10-26T10:33:02.123