11

2

As if this challenge could be any more Pythonesque in spirit... No prior knowledge of Markov chains or encryption techniques is required.

You are a spy who needs to obtain some crucial information from the British security service M1S. The agents of M1S are well aware that their Wi-Fi signals can be intercepted, their Android/iOS security vulnerabilities exploited etc., so all of them are using Nokia 3310’s to transmit text information which is typed using the T9 auto-completion.

You had previously hacked the phones to be delivered to the intelligence agency and had installed keyloggers under their glorious plastic keyboards, so now you receive sequences of numbers corresponding to the letters they typed, so “the eagle has left the nest alert the agents” becomes

84303245304270533808430637802537808430243687

But wait! Some T9 sequences are ambiguous (“6263” could be “name”, “mane” or “oboe”; the more obscure, the more suspicious it gets!), so what do you do? You know that the only entrance examination M1S uses is summarising Marcel Proust’s masterpiece “Remembrance of Things Past” in 15 seconds, so you want to pick the word that comes next after the previous one according to its frequency distribution in the entire chef-d’œuvre of Proust!

Can you crack the code and obtain what could be the original message?

The principle of T9

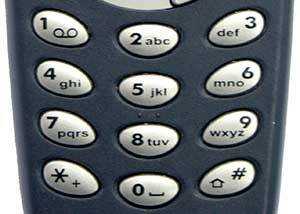

The T9 auto-completion mechanism can be described as follows. It maps alphabetic characters to numbers as shown on the picture above.

abc -> 2

def -> 3

ghi -> 4

jkl -> 5

mno -> 6

pqrs -> 7

tuv -> 8

wxyz -> 9

<space> -> 0

<other> -> <is deleted>

The T9 decryptor receives a sequence of digits and tries to guess the word that could be typed with those keypresses. It might use a standard frequency table, but we are going one step further and predicting the next word using a Markov chain!

Learning sample

The corpus is this heavily stripped version of Proust’s “Remembrance of Things Past” (s/-/ /g, s/['’]s //g and s/[^a-zA-Z ]//g — begone confusing possessive 's!) originally published on the University of Adelaide website (the text of this work is in the Public Domain in Australia).

The whole text must be analysed as one string, as one long sentence, as one long vector of words (whichever is more convenient for your language), stripped of linebreaks, and split into words at spaces. (I do not supply a single-paragraph file because such might be frowned upon by github tools.)

How do I read the whole text as one string / sentence? An example in R:

p_raw <- read.table("proust.txt", sep="\t") # Because there are no tabs

p_vec <- as.character(p_raw$V1) # Conversion to character vector

p_str <- paste(p_vec, collapse=" ") # One long string with spaces

p_spl <- strsplit(p_str, split=" ")[[1]] # Vector of 1360883 words

proust <- p_spl[p_spl!=""] # Remove empty entries — 1360797

Task

Given a sequence of digits as a number, return a possible text string that could be typed using the corresponding T9 keys using a probabilty chain to predict the next word X based on this training text treated as one long sentence.

If X is the first T9-word of the text and there are multiple guesses, pick one at random, otherwise pick the only one possible.

For all subsequent T9-words X(i) preceded by a word already deciphered w(i-1):

- If a T9-word X can be converted into a normal word x in one unique way, do it.

- If there are multiple conversion options available for X, say x1, x2, ..., look up the preceding guessed word w.

- If w is never followed by anything that maps to X in the original work of Proust, pick any of the possible x1, x2, ... at random.

- If w X always corresponds to w x1 in the original and there are no concurrent xi’s that could be mapped into X, pick x1.

- If w X can be converted to w x1, w x2, ... that can be found in the corpus, then count all possible xi’s that follow w and map to X in the corpus and pick xi with probability xi/(x1 + x2 + ...).

Example 2a. If the message is 76630489, where 489 could be guy or ivy (they occur in the corpus at least once), 7663 can be deciphered as some (a very probable first word). If some is never followed by anything that maps to 489 in the corpus, then pick guy or ivy at random with probability 0.5.

Example 2b. If the message is 766302277437, where 2277437 could be barrier or carrier, 7663 can be deciphered as some. If Proust always used some carrier and never some barrier, then pick some carrier.

Example 2c. Suppose that you want to decipher the sequence 536307663. 5363 was predicted as lend. 7663 could be any of these: pond, roof and some. You count the occurrences of the word following lend in the sample corpus. Suppose you get something like this (just to illustrate):

T9 Word following lend Occurrences

7663 some 7

7663 pond 2

7663 roof 1

So if 7663 is preceded by lend, there is 7/(7+2+1)=70% probability that 7663 stands for some, 20% pond and 10% roof. Your algorithm should produce lend some in 70% cases, lend pond in 20% cases etc.

You may safely assume that the agents use only a-z letters and spaces (no punctuation marks, no possessive 's, and no numbers).

You may also assume that the agents of M1S never use any words outside the scope of “Remembrance of Things Past” (which is a whopping vocabulary of 29,237 words!).

The T9 fuctionality was implemented in this challenge, so you might have a look at it.

If you need any help, probabilistic chains were gloriously tamed in this, that and the following challenges, but you do not even need to know the principle of such chains: everything is stated in the task.

Test cases

--Inputs--

20784250276960369

20784250276960369

84303245304270533808430637802537808430243687

94280343084306289072908608430262780482737

94280343084306289072908608430262780482737

--Possible outputs--

c quick brown fox

a stick crown fox

the eagle gas left the nest blest vie agents

what did the navy pay to the coast guards

what did the navy raz un the coast guards

Rules:

- Standard loopholes apply.

- You do not know the original message, all you get is a sequence of digits and the file proust.txt that you just need to load in the memory / workspace / whatever. There is no need to have anything self-contained; assume

proust.txtis always accessible. - Your algorithm must be able to produce different outputs with respective probabilities if more than one decryption option is probable according to the corpus (see Example 2c).

You need to stay as discreet as possible, so the shortest code wins!

P.S. The obvious benefit of this probabilistic algorithm is the fact that the probability you will get a true original string for an ambiguous deciphered string tends to one — just wait...

P.P.S. See also Prediction by Partial Matching.

Peter Taylor’s remarks from the sandbox were taken into account. Sadly, very few people responded during the week it had been posted there despite multiple updates, so any suggestions are welcome! BTW, this is my first challenge! – Andreï Kostyrka – 2016-09-07T00:49:35.573

I suspect that a big reason you didn't get many responses is because of the advanced knowledge needed to understand this problem. If you are wanting this challenge to appeal to a larger crowd, I'd recommend including some earlier examples that show the markov chain at work :) – Nathan Merrill – 2016-09-07T00:56:01.373

@NathanMerrill OK, I added 3 links to sample challenges. However, a user need not know Markov chains at all because the task is described in the question body as algorithmically as possible: if X, do Y with probabilities obtained by calculating Z in this learning sample. I tried to make it as self-sufficient as it gets... – Andreï Kostyrka – 2016-09-07T01:02:24.643

Oh, I understand. If you didn't explain it, I would have voted to close it. It just looks like it needs advanced knowledge :) – Nathan Merrill – 2016-09-07T01:03:14.227

@NathanMerrill Well, verbosity will kill me some day... – Andreï Kostyrka – 2016-09-07T01:05:10.667

1I like this challenge, but I haven't had time to sit down and create/golf a solution yet. Hopefully that will happen soon. – Mego – 2016-09-07T08:54:05.423

Reference that might be of interest for this challenge: Prediction by Partial Matching

– Arnauld – 2016-09-07T10:01:04.193IMHO, a dictionary of 29K words looks excessive for this format. It's already more than 100K once zipped and I don't feel like building a trie or a DAG on so many entries. I'd suggest to limit the training text to a few relevant paragraphs. – Arnauld – 2016-09-07T10:20:16.540

@Arnauld Referenced that PPM article. However, the dictionary must be so huge because of the rule 2c: if there are multiple decryption options possible and such sequences had occurred in the text before... There is very low probability that the exact two words go enought times one after another in the learning text (except such popular cases as “said to”). Proust never wrote “brown fox”. I wish I could make the learning sample larger just to estimate the probabilities of the real-world language and thought “RoTP” was big enough (in fact, it is not... where’s my “brown fox”, then?). – Andreï Kostyrka – 2016-09-07T10:33:16.377

Are we expected to encode the data from the Proust corpus into our solution? Given the size of the text file, that would seem to bias the solutions toward data compression rather than the word selection aspects of the task. On the other hand, it the text file is an assumed input, the solutions could focus on building and using a data structure to perform the steps as stated in the task. – RootTwo – 2016-09-09T18:09:50.080

@RootTwo No, no! It will suffice to provide a function that outputs the deciphered text on the fly. You are encouraged to read and find occurrences in

proust.txtonly as a necessary step for prediction... there is no need to output a data structure, a matrix of probabilities or a matrix of follow-ups and precedences between any two words. Your function may “forget” what it had calculated after outputting a string. Seems that I should post my own (non-competing) solution in order to encourage other people... – Andreï Kostyrka – 2016-09-09T19:28:11.737@Andrei My solution is a function of two parameters, the message to decode and the file name of the corpus ("proust.txt"). It reads the corpus and then decodes and prints the message. My question was whether that would be an acceptable answer. Can the solution read proust.txt or does the solution need to be self contained? – RootTwo – 2016-09-09T21:09:52.597

@RootTwo I should update the specification: the solution may read

proust.txt— and in fact it should. Therefore, you may treat the fileproust.txtas a pre-existing set of strings that you just need to load in the memory / workspace / whatever. There is no need to have anything self-contained; assumeproust.txtis always accessible. – Andreï Kostyrka – 2016-09-10T00:35:58.350