25

9

Domain name trading is big business. One of the most useful tools for domain name trading is an automatic appraisal tool, so that you can easily estimate how much a given domain is worth. Unfortunately, many automatic appraisal services require a membership/subscription to use. In this challenge, you will write a simple appraisal tool that can roughly estimate the values of .com domains.

Input / Output

As input, your program should take a list of domain names, one per line. Each domain name will match the regex ^[a-z0-9][a-z0-9-]*[a-z0-9]$, meaning that it is composed of lowercase letters, digits, and hyphens. Each domain is at least two characters long and neither begins nor ends with a hyphen. The .com is omitted from each domain, since it is implied.

As an alternative form of input, you can choose to accept a domain name as an array of integers, instead of a string of characters, as long as you specify your desired character-to-integer conversion.

Your program should output a list of integers, one per line, which gives the appraised prices of the corresponding domains.

Internet and Additional Files

Your program may have access to additional files, as long as you provide these files as part of your answer. Your program is also allowed to access a dictionary file (a list of valid words, which you don't have to provide).

(Edit) I have decided to expand this challenge to allow your program to access the internet. There are a couple restrictions, being that your program cannot look up the prices (or price histories) of any domains, and that it only uses pre-existing services (the latter to cover up some loopholes).

The only limit on total size is the answer size limit imposed by SE.

Example input

These are some recently-sold domains. Disclaimer: Although none of these sites seem malicious, I do not know who controls them and thus advise against visiting them.

6d3

buyspydrones

arcader

counselar

ubme

7483688

buy-bikes

learningmusicproduction

Example Output

These numbers are real.

635

31

2000

1

2001

5

160

1

Scoring

Scoring will be based on "difference of logarithms." For example, if a domain sold for $300 and your program appraised it at $500, your score for that domain is abs(ln(500)-ln(300)) = 0.5108. No domain will have a price less than $1. Your overall score is your average score for the set of domains, with lower scores better.

To get an idea what scores you should expect, simply guessing a constant 36 for the training data below results in a score of about 1.6883. A successful algorithm has a score less than this.

I chose to use logarithms because the values span several orders of magnitude, and the data will be filled with outliers. The use of absolute difference instead of difference squared will help reduce the effect of outliers in scoring. (Also, note that I am using the natural logarithm, not base 2 or base 10.)

Data Source

I have skimmed a list of over 1,400 recently sold .com domains from Flippa, a domain auction website. This data will make up the training data set. After the submission period is over, I will wait an additional month to create a test data set, with which the submissions will be scored. I might also choose to collect data from other sources to increase the size of the training/test sets.

The training data is available at the following gist. (Disclaimer: Although I have used some simple filtering to remove some blatantly NSFW domains, several might still be contained in this list. Also, I advise against visiting any domain you don't recognize.) The numbers on the right-hand side are the true prices. https://gist.github.com/PhiNotPi/46ca47247fe85f82767c82c820d730b5

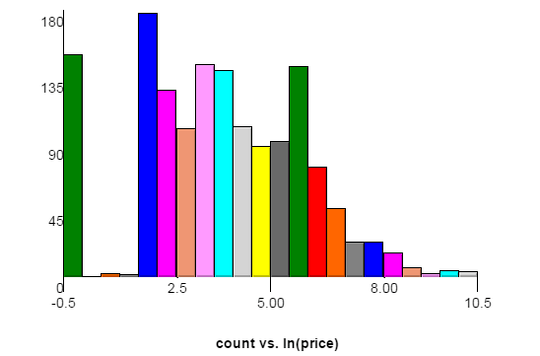

Here is a graph of the price distribution of the training data set. The x-axis the the natural log of price, with y-axis being count. Each bar has a width of 0.5. The spikes on the left correspond to $1 and $6 since the source website requires bids to increment at least $5. The test data may have a slightly different distribution.

Here is a link to the same graph with a bar width of 0.2. In that graph you can see spikes at $11 and $16.

Just a heads up, using the most common digraphs to score a domain works abysmally! R² ≅ 0 – None – 2016-07-05T17:29:00.370

2Someone obviously needs to do a neural network answer for this. – user48538 – 2016-07-06T17:31:05.533

1Can the program link to the internet (say, query google)? Explicitly not for the purpose of looking up prices of course, but for collecting data to use as a characteristic. – Joe – 2016-07-08T19:43:03.670

@Joe Sorry I haven't had a chance to respond, but I have decided to allow internet access. – PhiNotPi – 2016-07-11T17:52:28.643