73

13

In this code-challenge you will write a hash function in 140 bytes1 or less of source code. The hash function must take an ASCII string as input, and return a 24-bit unsigned integer ([0, 224-1]) as output.

Your hash function will be evaluated for every word in this large British English dictionary2. Your score is the amount of words that share a hash value with another word (a collision).

The lowest score wins, ties broken by first poster.

Test case

Before submitting, please test your scoring script on the following input:

duplicate

duplicate

duplicate

duplicate

If it gives any score other than 4, it is buggy.

Clarifying rules:

- Your hash function must run on a single string, not a whole array. Also, your hash function may not do any other I/O than the input string and output integer.

- Built-in hash functions or similar functionality (e.g. encryption to scramble bytes) is disallowed.

- Your hash function must be deterministic.

- Contrary to most other contests optimizing specifically for the scoring input is allowed.

1 I am aware Twitter limits characters instead of bytes, but for simplicity we will use bytes as a limit for this challenge.

2 Modified from Debian's wbritish-huge, removing any non-ASCII words.

Are seeded

randfunctions allowed, or are they considered "similar functionality"? – Doorknob – 2016-04-01T11:05:21.077@Doorknob I would consider them similar functionality. It is intended you scramble the input using your own code, and not through some side effect of other hashing algorithms, crypto, rng, compression, etc. – orlp – 2016-04-01T11:06:12.530

11

Llanfairpwllgwyngyllgogerychwyrndrobwllllantysiliogogogoch's? What the...? – Luis Mendo – 2016-04-01T11:39:40.6438

@DonMuesli https://en.wikipedia.org/wiki/Llanfairpwllgwyngyll (fun fact: that word is also in Jelly's built-in compression dictionary)

– Martin Ender – 2016-04-01T11:40:41.3001

Relevant: http://140byt.es/

– ASCII-only – 2016-04-01T12:29:05.957@orlp What domain does the hash function have to be defined on? Is it only required to work for words in the dictionary? Or does it have to work with inputs not in that dictionary as well? – kasperd – 2016-04-01T13:04:37.133

This is a lovely challenge! – None – 2016-04-01T14:58:48.133

8I think you should disallow built-in dictionaries. – Dennis – 2016-04-01T15:29:52.963

Per the spec in the question, a valid hash should cover the inclusive range

[0, 2**24-1], but your own submission uses%(2**24-1), which covers the range[0, 2**24-2], sincen%n(in this casenis2**24-1) returns0. Could you clarify the challenge's specifications more, please? – mbomb007 – 2016-04-01T16:22:37.6002I estimate that a random oracle would score around 6832 on this challenge. Anything better than that has to be optimized for the dictionary used for scoring. Any decent hash function will score around the same value. There will be some variation in score depending on the exact constants used in the hashing algorithm, thus it is possible to do a little better than 6832, but if you want to do significantly better than that, you have to an approach that is much more targeted towards the specific dictionary. – kasperd – 2016-04-01T16:43:36.710

4For reference: Taking the 24 MSB of SHA-512 would achieve a score of 6816. – Dennis – 2016-04-01T17:26:41.587

@Dennis I interpret built-in dictionaries as being disallowed by clarifying rule #1. I consider that to be extra I/O, as you're reading a nontrivial amount of extra information outside of the input string. – orlp – 2016-04-01T22:39:41.390

3

@kasperd That's correct, this challenge however is not about finding a generic hash function, it's about finding a perfect hash function.

– orlp – 2016-04-01T22:40:20.173@mbomb007 That's a mistake, my submission had a bug. The specs are still authoritative. – orlp – 2016-04-01T22:41:19.367

@kasperd It's not required that the hash function works on elements not inside the dictionary, although I don't see why that would matter for any valid implementation (dictionary lookups are considered extra I/O, even when the dictionary is a builtin in your language). – orlp – 2016-04-01T22:43:59.273

@orlp If the function is only required to work for words in the dictionary, then the modulus operation in your answer is redundant. – kasperd – 2016-04-01T22:57:01.377

1Not going to post as an answer because it definitely should be disallowed (the hash function should not use mutable global state, or something like that), but I had some fun writing this:

H=lambda s,i=[1],d={}:d[s] if s in d else (i[0],d.__setitem__(s,i[0]),i.__setitem__(0,i[0]+1))[0]- score is 0. – Random832 – 2016-04-02T07:25:56.830@Random832 Global state is considered extra I/O. – orlp – 2016-04-02T08:27:18.650

2@orlp That's an unreasonable definition of "I/O". You're not reading it, you're maintaining it in memory. The text of the clarification isn't sufficient to conclude that "I/O" is meant to include things that are not I/O. The text of the clarification is clearly targeted at hash functions that open the file again themselves. – Random832 – 2016-04-02T08:40:34.297

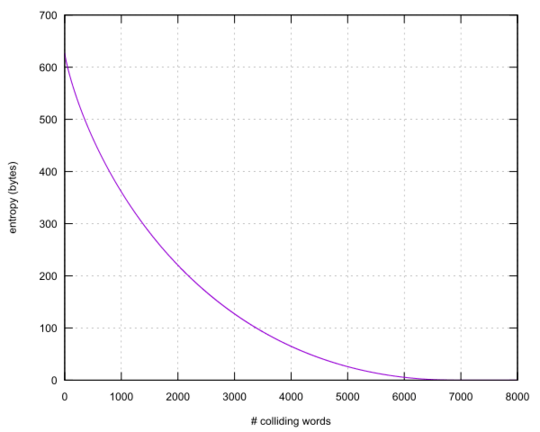

10Some back-of-the-envelope calculations: With

D=340275words andR=2^24hash outputs, a random hash has an expectedD^2/(2*R) = 3450colliding pairs, some of which overlap. There are an expectedD^3/(6*R^2) = 23colliding triples and a negligible number of larger collisions, which means these triples are likely disjoint. This gives an expected6829words that share a hash value, ~70in triples and the rest in pairs. The standard deviation is estimated at118, so getting<6200with a random hash is roughly a 5 sigma event. – xnor – 2016-04-02T17:57:13.487@Random832 A hash function is supposed to produce the same output each time it is given the same input. That needs to be true even across invocations of the program. So after the program has been stopped and started again, your hash function still needs to reproduce the same hash output given the same input. That means the global state cannot help you, because you are required to give the same result, even when given a new clean version of the global state. – kasperd – 2016-04-02T20:03:32.103

@orlp The point of my calculations was to reason about what was needed for an entry to be competitive. Those calculations lead me to the conclusion, that a generic hash function cannot be competitive, not even if you tweak the parameters. So if I wanted a better score, I would have to do something else (which I did). And the calculation also shows that any answer with a generic hash function and a score less than 6000 is essentially guaranteed to be flawed. – kasperd – 2016-04-02T20:06:47.667

@xnor Those numbers appear to be consistent with my rough estimate in an earlier comment. Nice to know the standard deviation as well. With those numbers I can be even more confident when I point out answers with an unrealistic score. – kasperd – 2016-04-02T20:10:24.110

3 of spades! Yay! – CalculatorFeline – 2016-04-02T21:10:17.860

@kasperd You're misinterpreting the question. You can do significantly better than the random oracle, and that's the point of this question. Please read up on perfect hash functions. This question is not about generic hash functions.

– orlp – 2016-04-02T22:28:38.2002@orlp You are totally not getting the point. There have been numerous answers providing a generic hash function with no targeting for the specific dictionary which still claimed a score in the ballpark around 3600. As long as the answer is a generic hash function where you have perhaps tweaked some of the parameters you will get a score within a few standard deviations of the expected value, there is no way such an entry can score less than 6000. – kasperd – 2016-04-02T22:34:09.577

@kasperd All right, I haven't seen those answers, so I was unaware of that. – orlp – 2016-04-02T22:35:05.880

@orlp That's probably because each time it happens there is somebody around to point it out in comments, and the answer gets fixed or deleted. It has happened frequently enough that the question even got edited to warn about it. Not that it helped, I have seen at least 3 of them after the warning was added to the question. – kasperd – 2016-04-02T22:39:23.423

Oh cool, my generic hash function comes to 6825, so slightly better than expected. But given the other contenders, I’m not bothering to reimplememnt it in a short language (I used the shell implementation for easiness of testing). – mirabilos – 2016-04-04T08:05:06.973

@mirabilos If you feel your code can be golfed to within the limit, you may submit a link with the same for someone else to do so. – ghosts_in_the_code – 2016-04-04T13:48:30.620